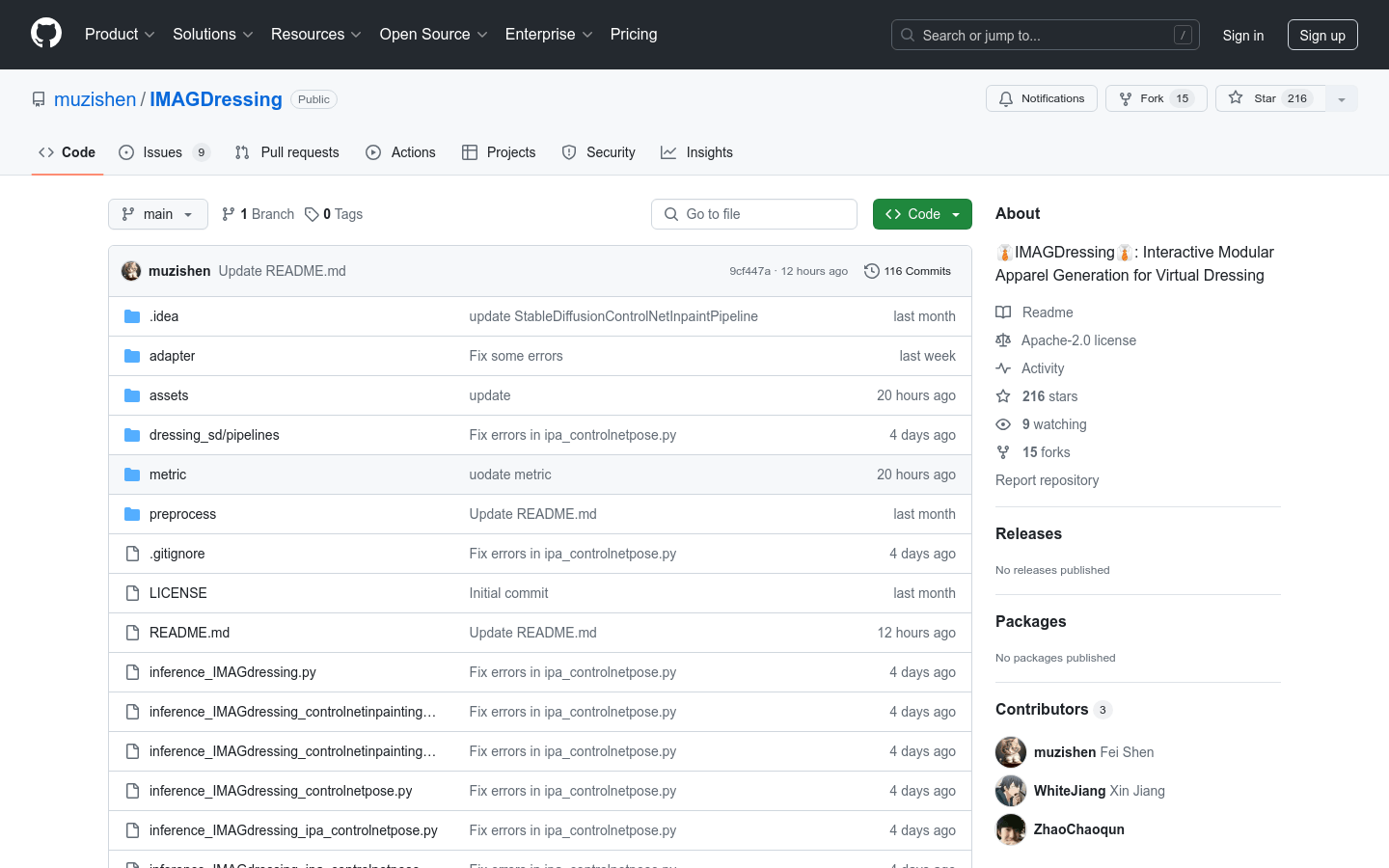

IMAGDressing is an interactive modular clothing generation model designed to provide flexible and controllable customized services for virtual trial-on systems. This model uses a hybrid attention module to integrate these features into the denoising UNet by combining the semantic features of CLIP and the texture features of VAE, ensuring that the user can control editing. In addition, IMAGDressing also provides an IGPair dataset that contains more than 300,000 pairs of clothing and dress images, establishing a standard data assembly process. The model can be used in conjunction with extension plugins such as ControlNet, IP-Adapter, T2I-Adapter, and AnimateDiff to enhance diversity and controllability.

Demand population:

" IMAGDressing is mainly aimed at clothing designers, virtual fitting software developers and researchers. It helps designers quickly preview design effects by providing realistic clothing generation and flexible customization capabilities, and software developers realize virtual fitting functions, and researchers can use it to research and explore related fields."

Example of usage scenarios:

Clothing designers use IMAGDressing to generate clothing renderings of different styles.

Virtual fitting software developers use IMAGDressing to realize the function of users trying on clothes online.

Researchers used IMAGDressing to conduct related research on clothing generation and virtual trials.

Product Features:

Simple architecture: Generate realistic clothing and support user-driven scene editing.

Flexible plug-in compatibility: Integrate with extension plug-ins such as IP-Adapter, ControlNet, T2I-Adapter and AnimateDiff.

Quick Customization: Quick customization in seconds without additional LoRA training.

Support text prompts: Support text prompts according to different scenarios.

Supports clothing replacement in designated areas (experimental features).

Supports use with ControlNet and IP-Adapter.

Provides IGPair dataset: Contains over 300,000 pairs of clothing and wearing images.

Tutorials for use:

1. Install the Python environment (Anaconda or Miniconda is recommended).

2. Install PyTorch (the version must be greater than or equal to 2.0.0).

3. Install CUDA (version 11.8).

4.Clone IMAGDressing 's GitHub repository.

5. Install the dependency library: Run `pip install -r requirements.txt`.

6. Download and install the required model files, such as humanparsing and openpose model files.

7. Run IMAGDressing ’s inference code to perform clothing generation and virtual try-on.

8. As needed, combine extension plug-ins such as ControlNet and IP-Adapter to enhance functions.