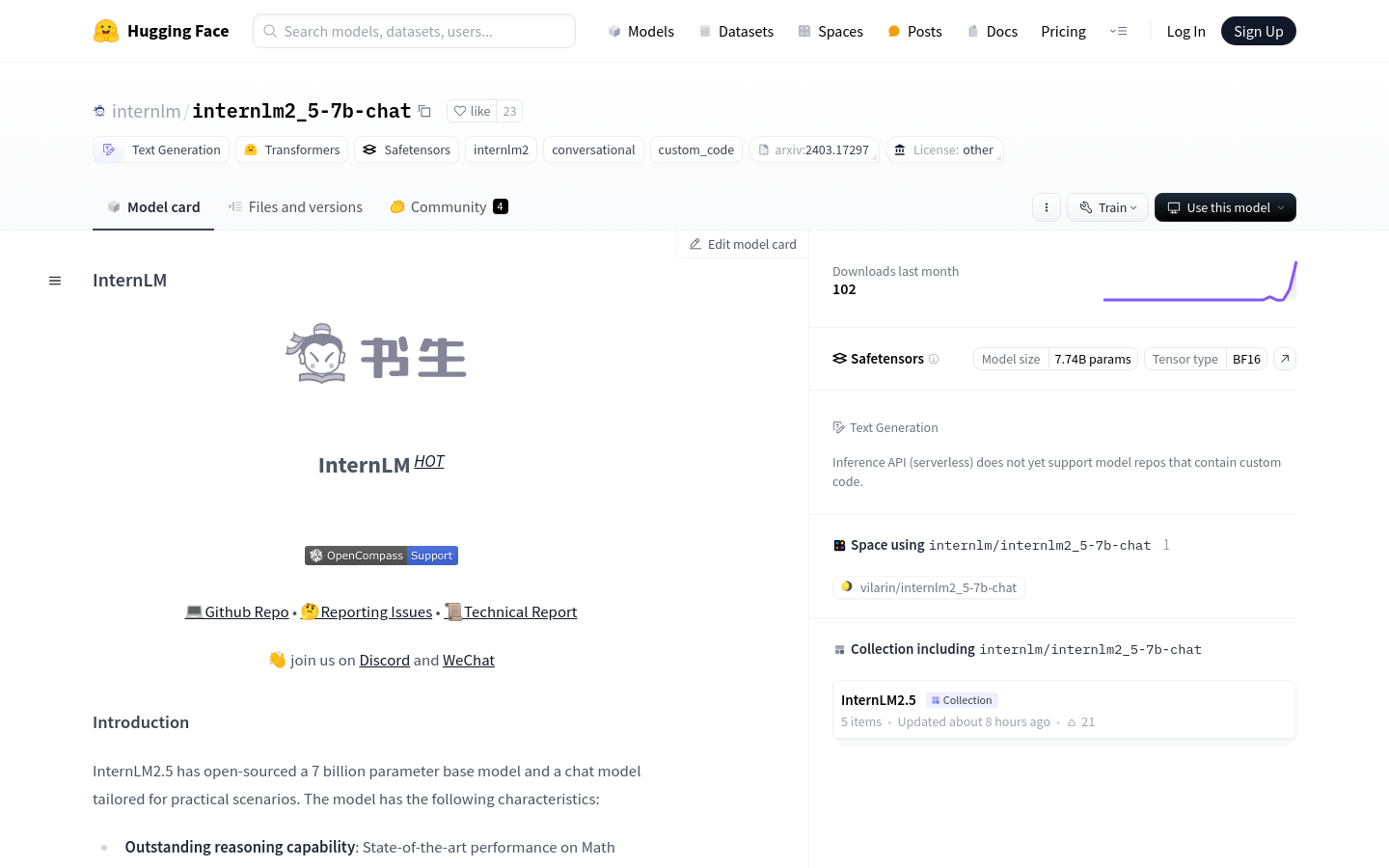

InternLM2.5-7B-Chat product introduction

InternLM2.5-7B-Chat is an open source Chinese conversation model with 700 million parameters, specially designed for practical scenarios. It has excellent reasoning capabilities and is superior to models such as Llama3 and Gemma2-9B in mathematical reasoning. The model supports collecting information from a large number of web pages and performing analysis and inference. It has powerful tool calling capabilities and supports ultra-long context windows. It is suitable for processing long texts and building complex task agents.

target users

The target users of this model are enterprises and research institutions that need to perform complex conversation processing, long text analysis and information collection. It is ideal for building applications such as intelligent customer service, personal assistants, and educational tutoring.

Usage scenario examples

Intelligent customer service system provides 24-hour automatic reply service

Personal assistant to help users manage schedules and remind important matters

In the field of education, assist students in learning, provide personalized learning suggestions and answer questions

Product features

Excellent mathematical reasoning ability, surpassing models of the same magnitude

Supports ultra-long context windows, suitable for long text processing

Collect information from multiple web pages and perform analytical inferences

Ability to understand instructions, select tools, and reflect on results

Support model deployment through LMDeploy and vLLM and provide API services

The code is open source following the Apache-2.0 protocol, and the model weights are completely open to academic research.

Tutorial

1 Load the InternLM2.5-7B-Chat model

2 Set model parameters and select precision (float16 or float32)

3 Use the chat or stream_chat interface for conversation or stream generation

4 Deploy the model through LMDeploy or vLLM to achieve local or cloud inference

5 Send a request to get the results

6 Post-process the results according to the application scenario