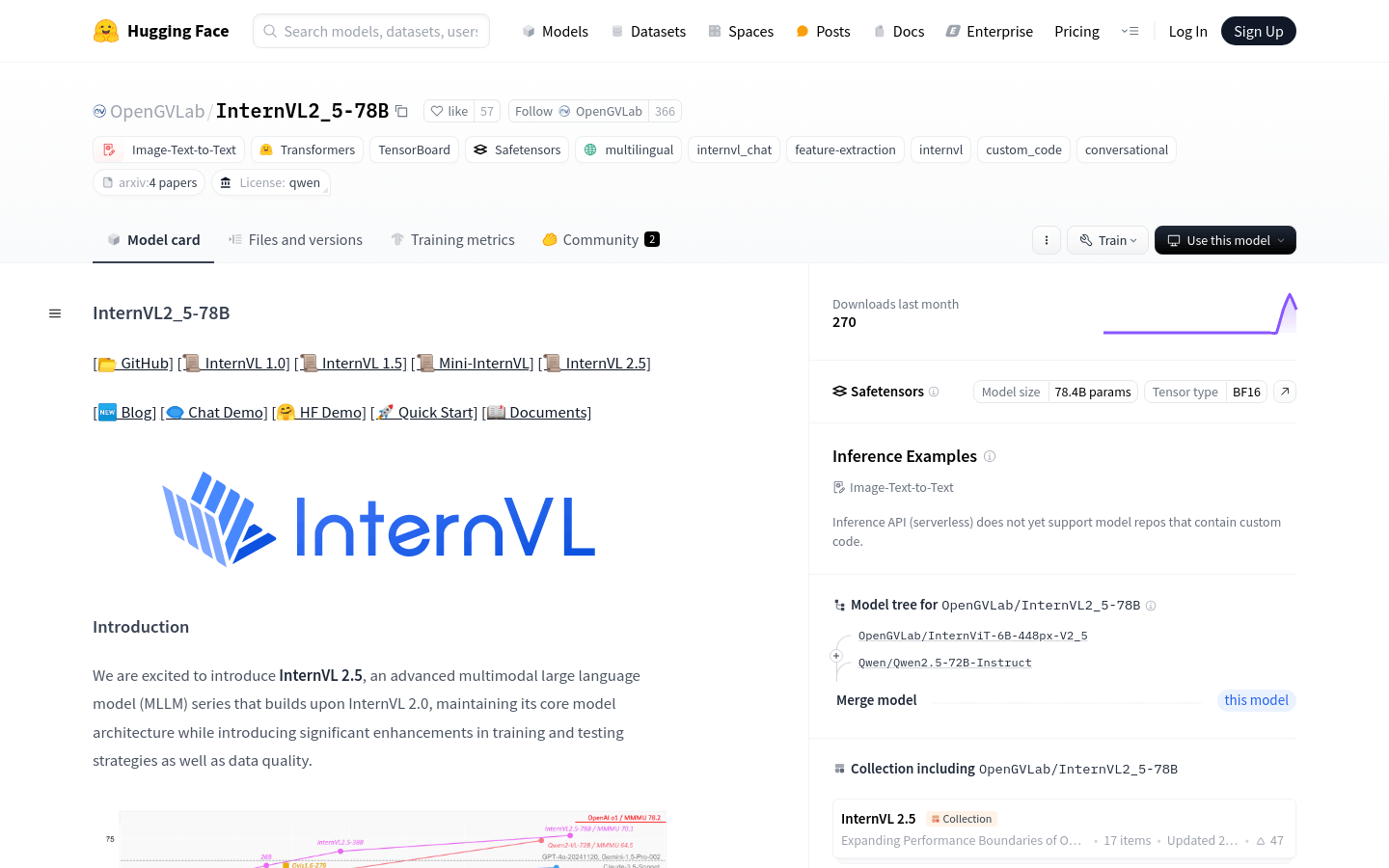

What is InternVL 2.5?

InternVL 2.5 is an advanced series of multimodal large language models (MLLM) that builds upon InternVL 2.0 with significant improvements in training and testing strategies as well as enhanced data quality. This model series has been optimized for visual perception and multimodal capabilities, supporting functions like image-to-text conversion and text-to-text transformations. It is ideal for complex tasks involving both visual and linguistic information.

Who Can Use InternVL 2.5?

The target audience includes researchers, developers, and enterprise users, especially those developing AI applications that handle visual and language data. The InternVL2_5-78B model is particularly suitable for applications involving image recognition, natural language processing, and machine learning due to its robust multimodal processing capabilities and efficient training strategies.

Example Scenarios:

Image Description Generation: Use InternVL2_5-78B to convert image content into textual descriptions.

Multimodal Image Analysis: Analyze and compare different images to identify similarities and differences using InternVL2_5-78B.

Video Understanding: Employ InternVL2_5-78B to process video frames and provide detailed analysis of video content.

Key Features:

Supports dynamic high-resolution training methods for multimodal datasets, enhancing performance on multi-image and video tasks.

Utilizes the 'ViT-MLP-LLM' architecture, combining newly pre-trained InternViT with various pre-trained large language models.

Incorporates randomly initialized MLP projectors to effectively integrate visual encoders and language models.

Implements a progressive expansion strategy to optimize alignment between visual encoders and large language models.

Uses random JPEG compression and loss reweighting techniques to improve robustness against noisy images and balance NTP losses for responses of varying lengths.

Supports input from multiple images and videos, broadening the model's application in multimodal tasks.

Getting Started Guide:

1. Visit the Hugging Face website and search for the InternVL2_5-78B model.

2. Download and load the model according to your specific use case.

3. Prepare input data, including images and text, and perform necessary preprocessing.

4. Use the model for inference by following the provided API documentation and inputting the processed data.

5. Obtain the model output, which could be a textual description of an image, video content analysis, or results from other multimodal tasks.

6. Process the output as needed, such as displaying, storing, or further analyzing it.

7. Optionally fine-tune the model to better suit specific application requirements.