Llama-3 70B Instruct Gradient 1048k is an advanced language model developed by the Gradient AI team. It demonstrates the ability of the SOTA (State of the Art) language model to learn to process long text after appropriate adjustments by extending the context length to more than 1048K. The model uses NTK-aware interpolation and RingAttention technology, as well as the EasyContext Blockwise RingAttention library to efficiently train on high-performance computing clusters. It has a wide range of application potential in commercial and research purposes, especially in scenarios where long text processing and generation are required.

Demand population:

["Applicable to business intelligence assistants that need to handle large amounts of text and complex conversations.","Applicable to researchers' experiments and model training in the field of natural language processing.","For developers, it can be used to create customized AI models or agents to support business-critical operations."]

Example of usage scenarios:

As a chatbot, customer service support is provided.

In content creation, generate creative copywriting and stories.

In the field of education, assisted language learning and text analysis.

Product Features:

Supports long text generation, and the context length is extended to 1048K.

Based on the large language model of the Meta Llama 3 family, dialogue use cases are optimized.

Training was performed using NTK-aware interpolation and RingAttention techniques.

Training is performed on Crusoe Energy's high-performance L40S cluster to support long text processing.

The generated long text is fine-tuned by data augmentation and chat datasets.

The model is carefully adjusted in terms of security and performance to reduce false rejections and improve user experience.

Tutorials for use:

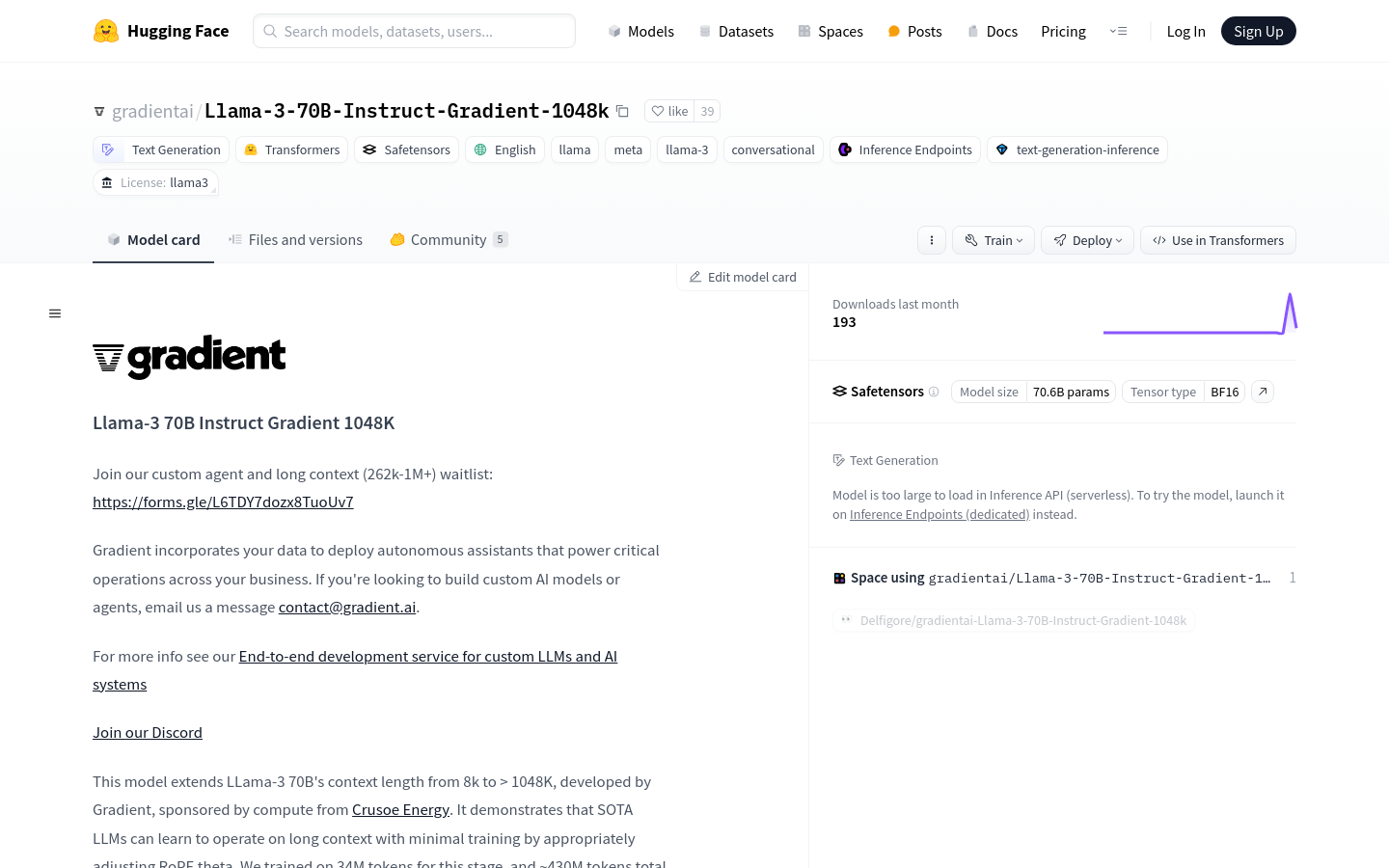

Step 1: Access Llama-3 70B Instruct Gradient 1048k page in the Hugging Face model library.

Step 2: Choose to use transformers library or original llama3 code library for model loading according to your needs.

Step 3: Configure model parameters and load the model through the provided code snippet.

Step 4: Prepare input text or dialogue messages and use the model's tokenizer for processing.

Step 5: Set the parameters for generating text, such as the maximum number of new tokens, temperature, etc.

Step 6: Call the model to generate text or perform a specific task.

Step 7: Follow-up processing or display based on the output results.