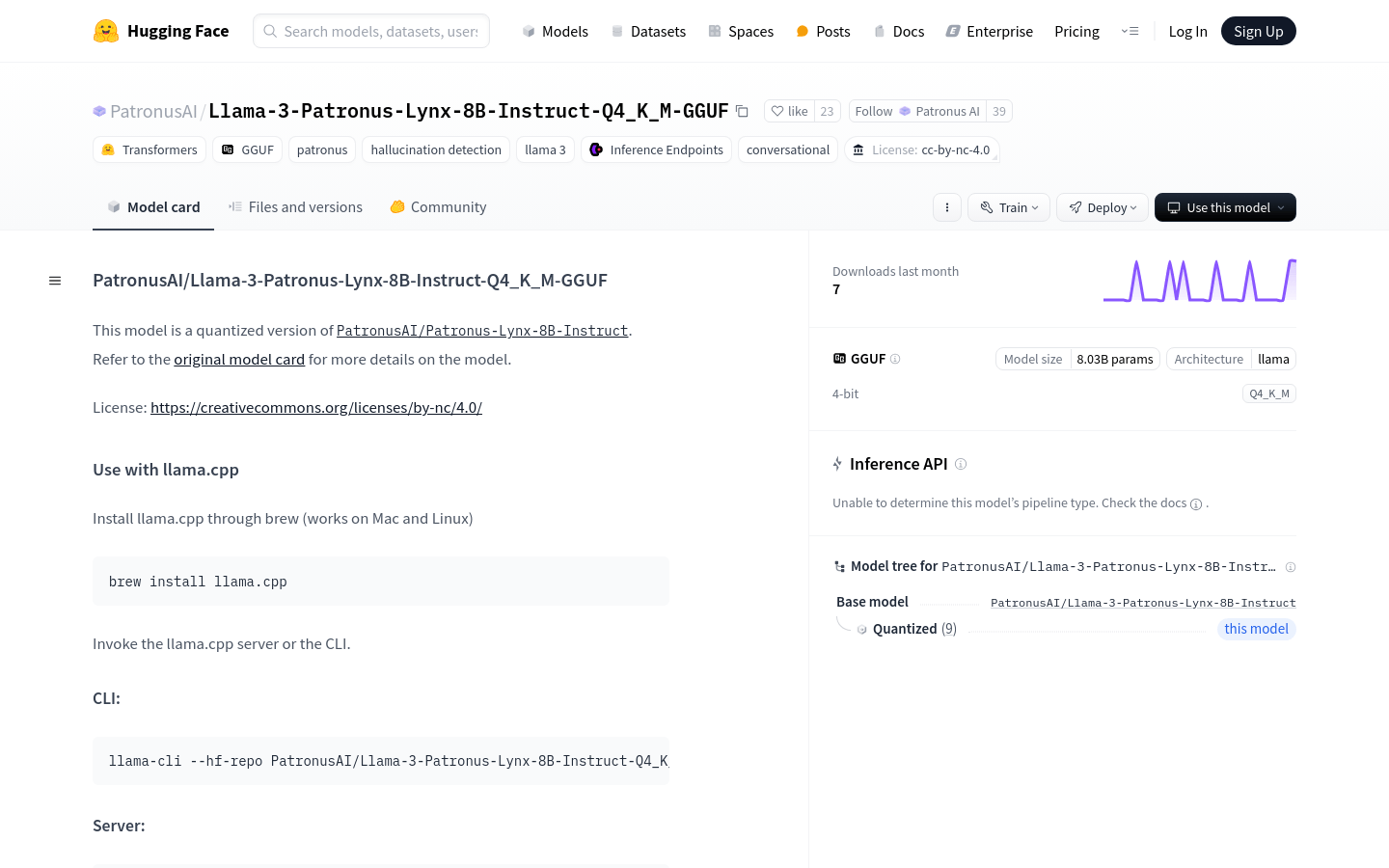

This model is a large-scale language model that uses 4-bit quantization technology to reduce storage and computing needs. It is suitable for natural language processing, with a parameter volume of 8.03B, free and can be used for non-commercial purposes, and is suitable for high-performance language application needs in resource-constrained environments.

Demand population:

"The target audience is researchers, developers, and enterprises. Researchers can be used to explore natural language processing research; developers can facilitate development of related applications; enterprises can deploy internal resource-constrained servers, etc. for text processing and other tasks."

Example of usage scenarios:

1. Apply to intelligent customer service system to generate accurate text replies.

2. Aid in creating creative texts such as articles and stories on the content creation platform.

3. Help the enterprise knowledge management system to automate document summary and question-and-answer answers.

Product Features:

1. Support command line interface (CLI) reasoning through llama.cpp, which is convenient and fast.

2. Can build server reasoning through llama.cpp, which is conducive to service deployment.

3. The parameter volume reaches 8.03B, with strong language understanding and generation capabilities, and meets text processing needs.

4. Adopt 4-bit quantization technology to effectively optimize storage and computing efficiency and save resources.

5. It is suitable for natural language processing tasks such as text generation, question and answer, and has a wide range of application scenarios.

6. Based on a specific architecture, reasoning can be done through relevant methods, making it easy to use.

7. Free use and sharing are allowed for non-commercial use, and the usage restrictions are clear.

Tutorials for use:

1. Install llama.cpp through brew (for Mac and Linux).

2. Use the llama-cli command line tool to perform inference and enter parameters in the corresponding format.

3. Build llama-server for inference services and configure corresponding parameters.

4. You can also directly refer to the usage steps in the llama.cpp repository for reasoning.

5.Clone the llama.cpp repository, enter the directory and build as required.

6. Run inference through the built binary file.