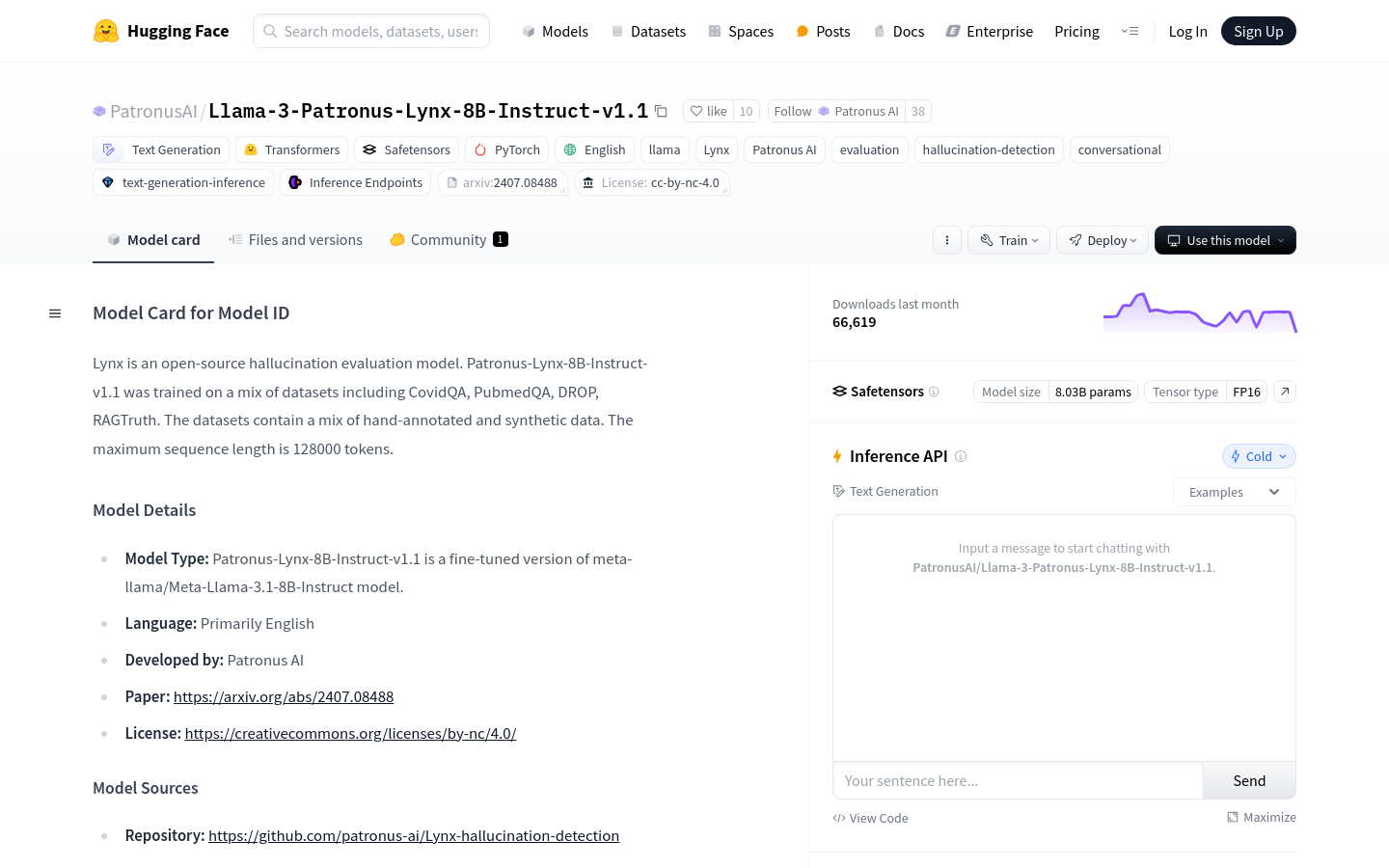

Patronus-Lynx-8B-Instruct-v1.1 is a fine-tuned version based on the meta-llama/Meta-Llama-3.1-8B-Instruct model, mainly used to detect hallucinations in RAG settings. The model has been trained on multiple data sets such as CovidQA, PubmedQA, DROP, RAGTruth, etc., and includes manual annotation and synthetic data. It is able to evaluate whether a given document, question, and answer is faithful to the content of the document, and does not provide new information beyond the scope of the document, nor contradict the document information.

Demand population:

"The target audience is researchers, developers, and businesses, who need a reliable model to evaluate and generate text that is faithful to the source document. This model is suitable for applications such as natural language processing, text summary, question and answer systems, and chatbots."

Example of usage scenarios:

The researchers used the model to evaluate the accuracy of answers in the medical literature.

Developers integrate models into a Q&A system to provide accurate document-based answers.

Enterprises use models to detect information consistency in financial reports.

Product Features:

Hallucination detection: Assess whether the answer is true to a given document.

Text generation: Generate answers based on user input questions and documents.

Chat format training: The model is trained in a chat format, suitable for conversational applications.

Multi-dataset training: including CovidQA, PubmedQA, DROP, RAGTruth, etc.

Long sequence processing: Supports sequence lengths of up to 128,000 tokens.

Open source license: complies with the cc-by-nc-4.0 license, and is freely used and modified.

High performance: Excellent performance in multiple benchmark tests, such as HaluEval, RAGTruth, etc.

Tutorials for use:

1. Prepare input data for questions, documents, and answers.

2. Organize input data using the recommended prompt format of the model.

3. Call Hugging Face's pipeline interface and pass in the model name and configuration parameters.

4. Pass the prepared data to the pipeline as user message.

5. Get model output, including 'PASS' or 'FAIL' ratings and reasoning.

6. Analyze the model output and judge whether the answer is true to the document based on the score and reasoning.

7. Adjust model parameters as needed to optimize performance.