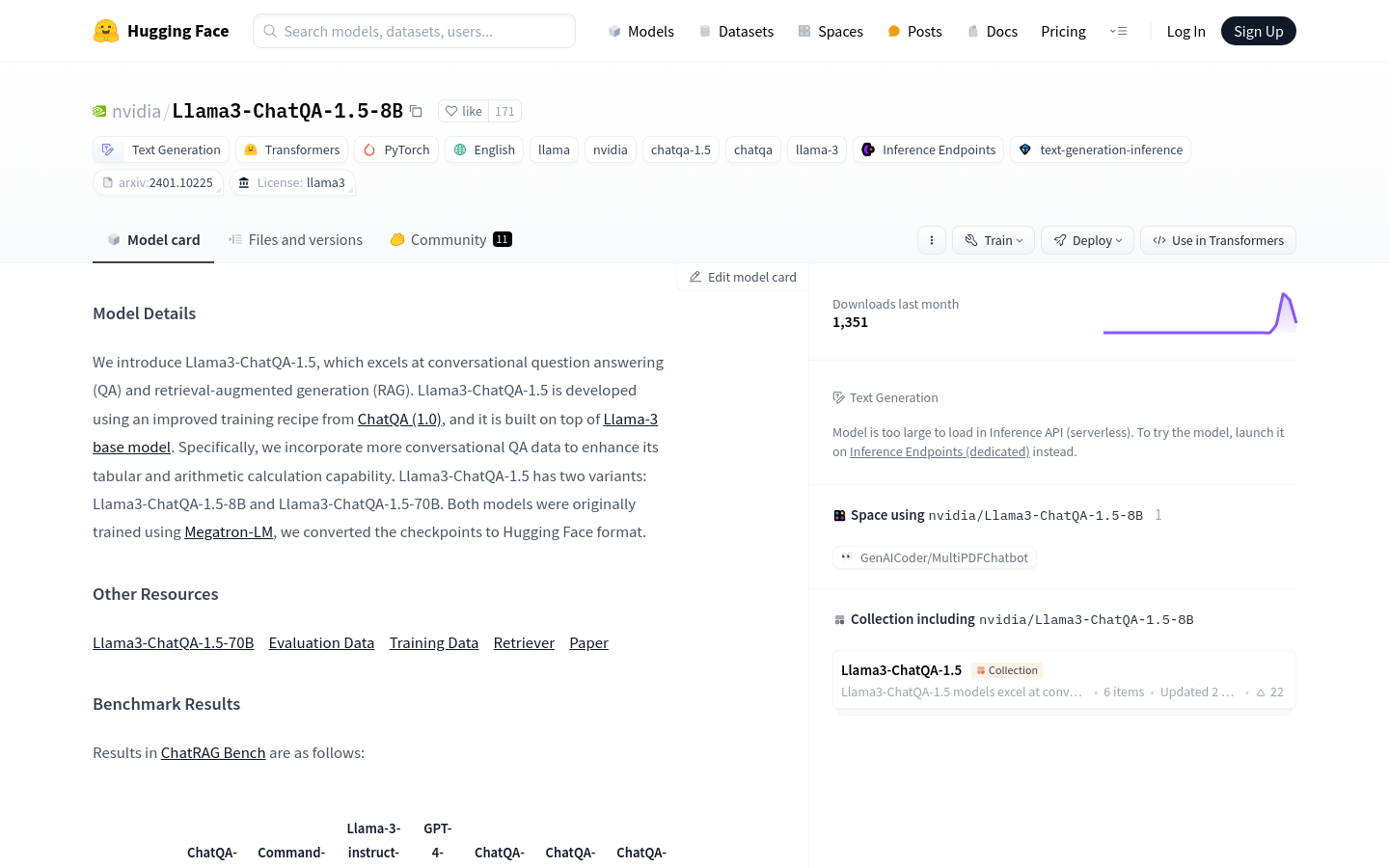

Llama3-ChatQA-1.5-8B is an advanced conversational question answering and retrieval enhanced generation model developed by NVIDIA. The model is improved based on ChatQA (1.0), adding conversational question and answer data, and enhancing table and arithmetic calculation capabilities. It has two versions: Llama3-ChatQA-1.5-8B and Llama3-ChatQA-1.5-70B, both trained with Megatron-LM and converted to Hugging Face format. This model performs well in the ChatRAG Bench benchmark and is suitable for scenarios requiring complex dialogue understanding and generation.

Demand group

Developers: This model can be quickly integrated into chatbots and conversational systems.

Enterprise users: Can be used in customer service and internal support systems to improve automation and efficiency.

Researchers: For conducting academic research on conversational systems and natural language processing.

Educators: Integrate within educational software to provide interactive learning experiences.

Usage scenario examples

Customer service chatbot: automatically answer customer inquiries and improve service efficiency.

Intelligent personal assistant: Helps users manage daily tasks such as scheduling and information retrieval.

Online education platform: Provide personalized learning experience and interactive teaching through dialogue.

Product features

Conversational Question and Answer (QA): Ability to understand and answer complex conversational questions.

Retrieval-augmented generation (RAG): combines retrieved information for text generation.

Enhanced tabular and arithmetic computing capabilities: Specifically optimized for processing tabular data and performing arithmetic operations.

Multi-language support: Supports dialogue understanding and generation in multiple languages such as English.

Context-based optimization: Provide more accurate answers with context.

High performance: Using Megatron-LM training ensures the high performance of the model.

Easy to integrate: Hugging Face format is provided to facilitate developers to integrate into various applications.

Tutorial

1. Import the necessary libraries such as AutoTokenizer and AutoModelForCausalLM.

2. Initialize the tokenizer and model using the model ID.

3. Prepare conversation messages and document context.

4. Build the input using the provided prompt format.

5. Pass the constructed input to the model for generation.

6. Get the output generated by the model and decode it.

7. If necessary, run a retrieval to obtain contextual information.

8. Run text generation again based on the retrieved information.