What is Llasa-1B?

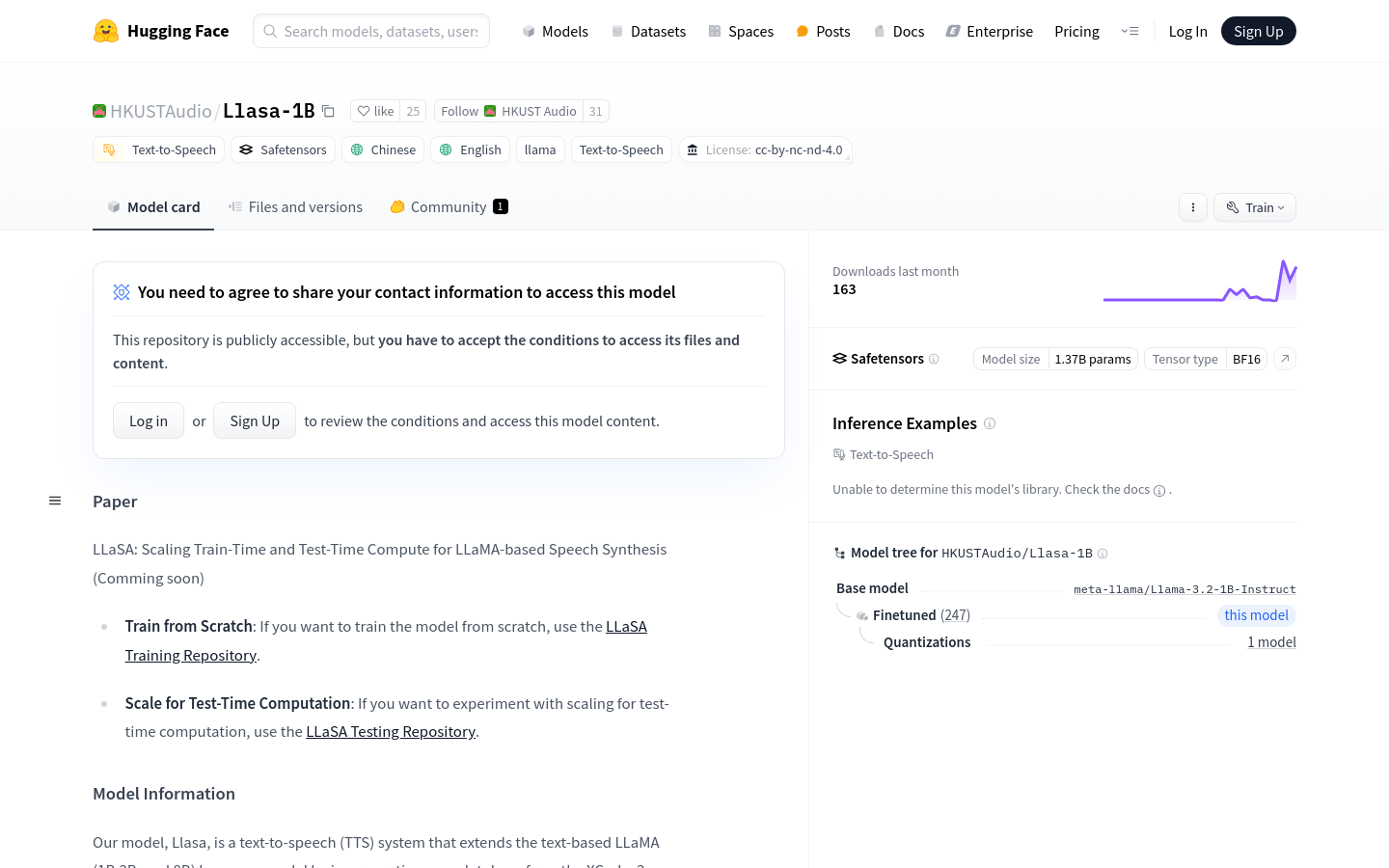

Llasa-1B is a text-to-speech model developed by the Audio Lab at Hong Kong University of Science and Technology. It uses the LLaMA architecture combined with voice tokens from the XCodec2 codebook to convert text into natural-sounding speech. This model has been trained on 250,000 hours of English and Chinese voice data. It supports generating speech from plain text or using given voice samples. Key features include high-quality multilingual speech suitable for various applications such as audiobooks and voice assistants.

Who Can Benefit from Llasa-1B?

This model is ideal for developers and researchers who need high-quality speech synthesis capabilities. It can be used in developing applications like voice assistants, audiobook platforms, and educational software.

Example Usage Scenarios

Generate natural-sounding Chinese and English voice content for an audiobook app.

Provide high-quality speech synthesis for an intelligent voice assistant.

Read text aloud in educational software to aid learning.

Model Features

Supports text-to-speech synthesis in Chinese and English

Generates more natural speech using voice prompts

Built on LLaMA architecture with strong language understanding capabilities

Trained on large-scale data for high-quality output

Provides open-source code and model files for easy use and extension

Step-by-Step Guide to Using Llasa-1B

1. Install the XCodec2 library ensuring it is version 0.1.3.

2. Use the transformers library to load the Llasa-1B model and tokenizer.

3. Deploy the model and tokenizer to a GPU for faster processing.

4. Format input text according to the model’s requirements.

5. Use the model to generate speech tokens and decode them into audio waveforms using XCodec2.

6. Save the generated speech as a WAV file for playback or further processing.