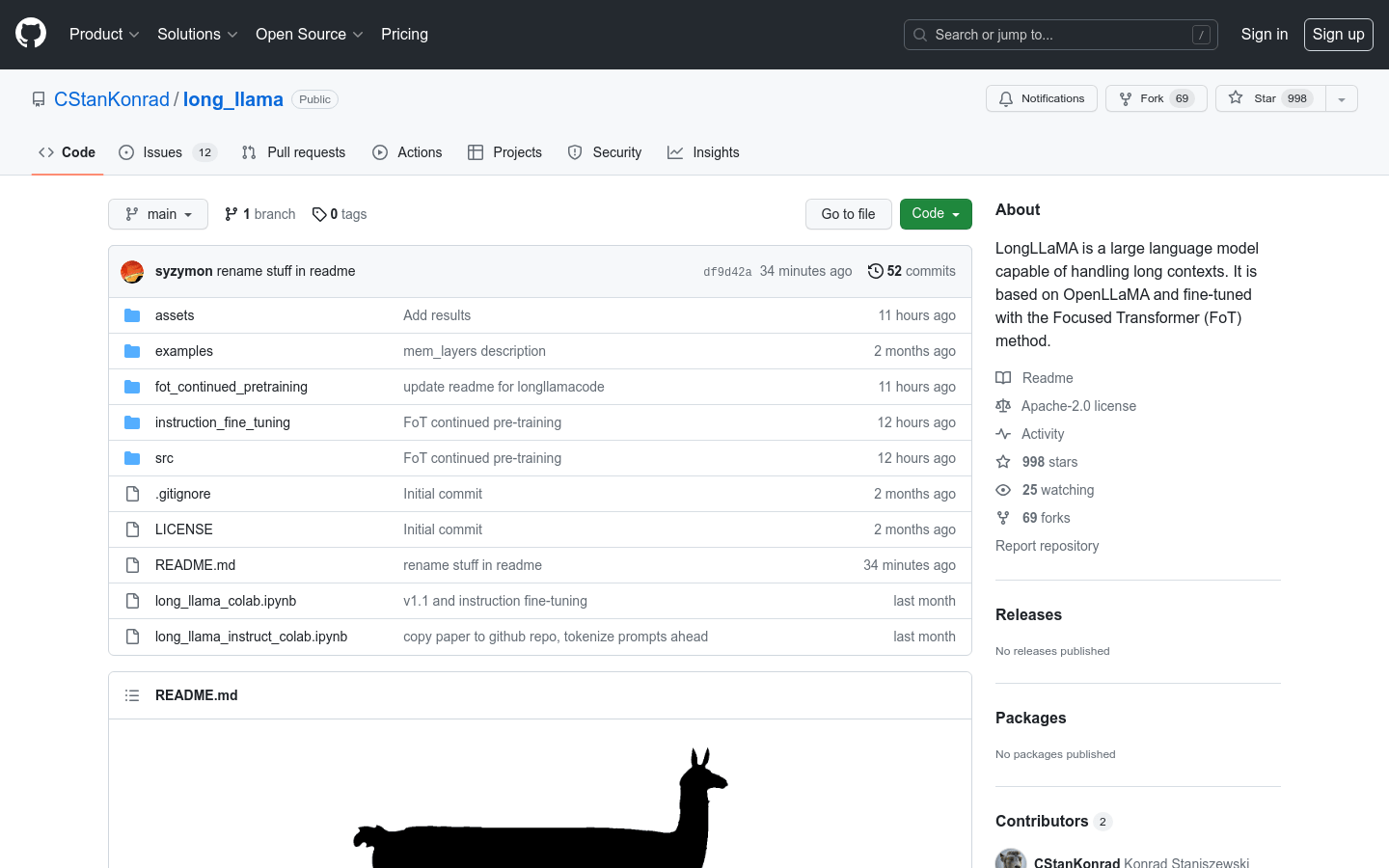

LongLLaMA is a large language model capable of processing long texts. It is based on OpenLLaMA and fine-tuned using the Focused Transformer (FoT) method. It is capable of handling text up to 256k marks or more. We provide a smaller 3B base model (without instruction tuning) and inference code supporting longer context on Hugging Face. Our model weights can serve as a replacement for LLaMA in existing implementations (for short contexts up to 2048 tokens). Furthermore, we provide evaluation results and comparisons with the original OpenLLaMA model.

Demand group:

"Suitable for various natural language processing tasks, such as text generation, text classification, question answering systems, etc."

Product features:

Processing long texts

Supports 256k tags or longer text

for natural language processing tasks