Magic 1-For-1 is a model focused on efficient video generation, with its core function being to quickly convert text and images into video. This model optimizes memory usage and reduces inference latency by decomposing the text-to-video generation task into two subtasks: text-to-image and image-to-video subtasks. Its main advantages include high efficiency, low latency and scalability. The model was developed by the DA-Group team of Peking University to promote the development of interactive basic video generation. At present, this model and related code are open source and can be used for free by users, but they must comply with the open source license agreement.

Demand population:

"This model is suitable for users who need to quickly generate video content, such as video creators, advertising producers, content developers, etc. It can help users generate high-quality videos in a short period of time, saving time and energy, and improving creative efficiency. At the same time, its open source features are also suitable for further development and research by researchers and developers."

Example of usage scenarios:

Video creators can use this model to quickly generate video materials to improve creative efficiency.

Advertising producers can use this model to quickly generate advertising videos, saving production costs.

Researchers can conduct further research and development based on this model to explore new video generation technologies.

Product Features:

Efficient image-to-video generation, which can generate one minute of video in one minute.

Supports two-stage generation of text to image and image to video, optimizing memory usage and inference delays.

Provide quantitative functions to further optimize model performance.

Supports single-GPU and multi-GPU inference, and flexibly adapts to different hardware environments.

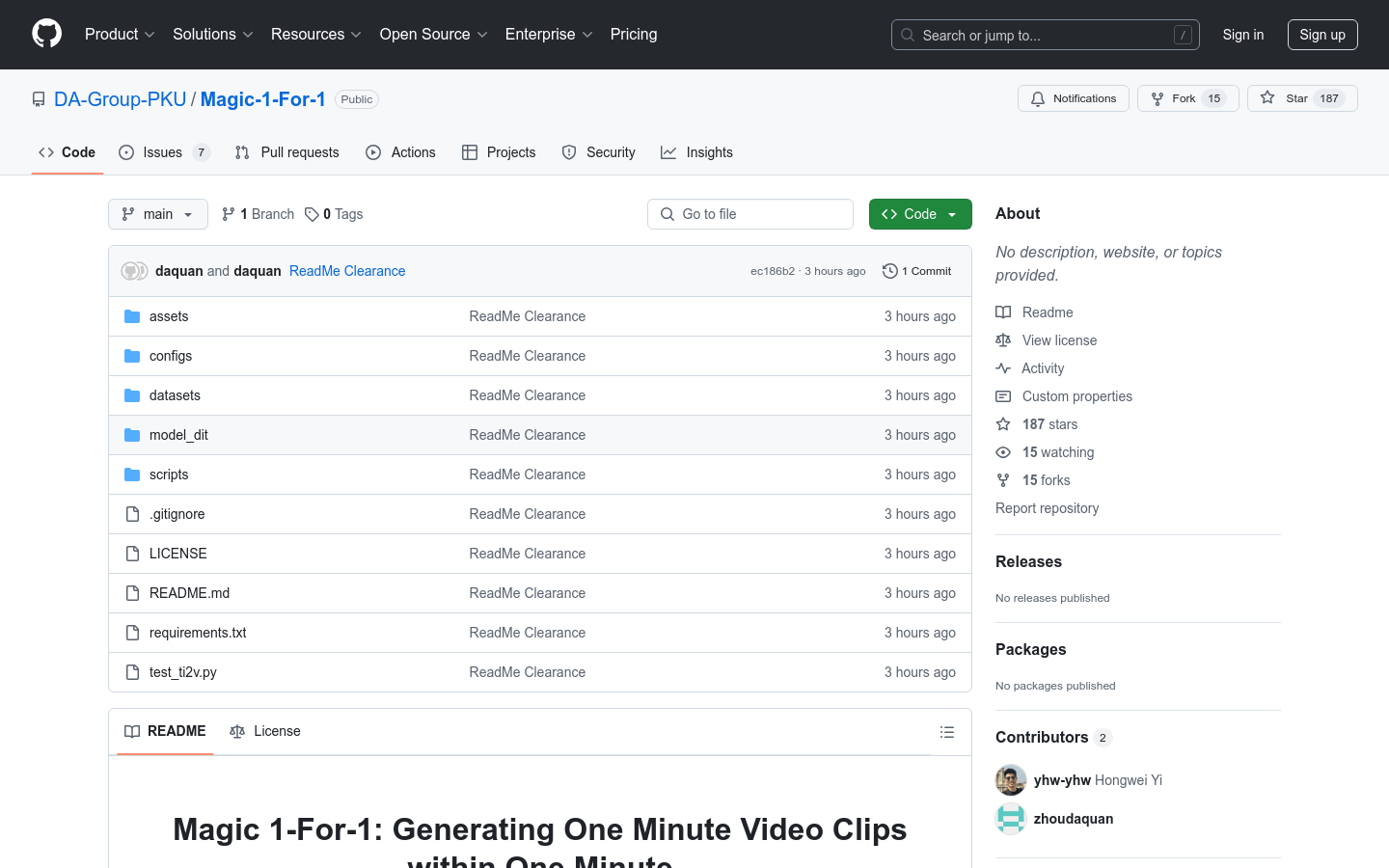

Open source code and model weights are convenient for users to conduct secondary development and research.

Provide detailed usage documents and scripts to facilitate users to get started quickly.

Supports the download and use of a variety of pre-trained model components.

Tutorials for use:

1. Install git-lfs and use conda to create the project environment.

2. Install project dependencies and run the command pip install -r requirements.txt.

3. Create the weight directory pretrained_weights and download the model weights and related components.

4. Run the script python test_ti2v.py or bash scripts/run_flashatt3.sh to reason.

5. Enable quantization or adjust multi-GPU configuration as needed.