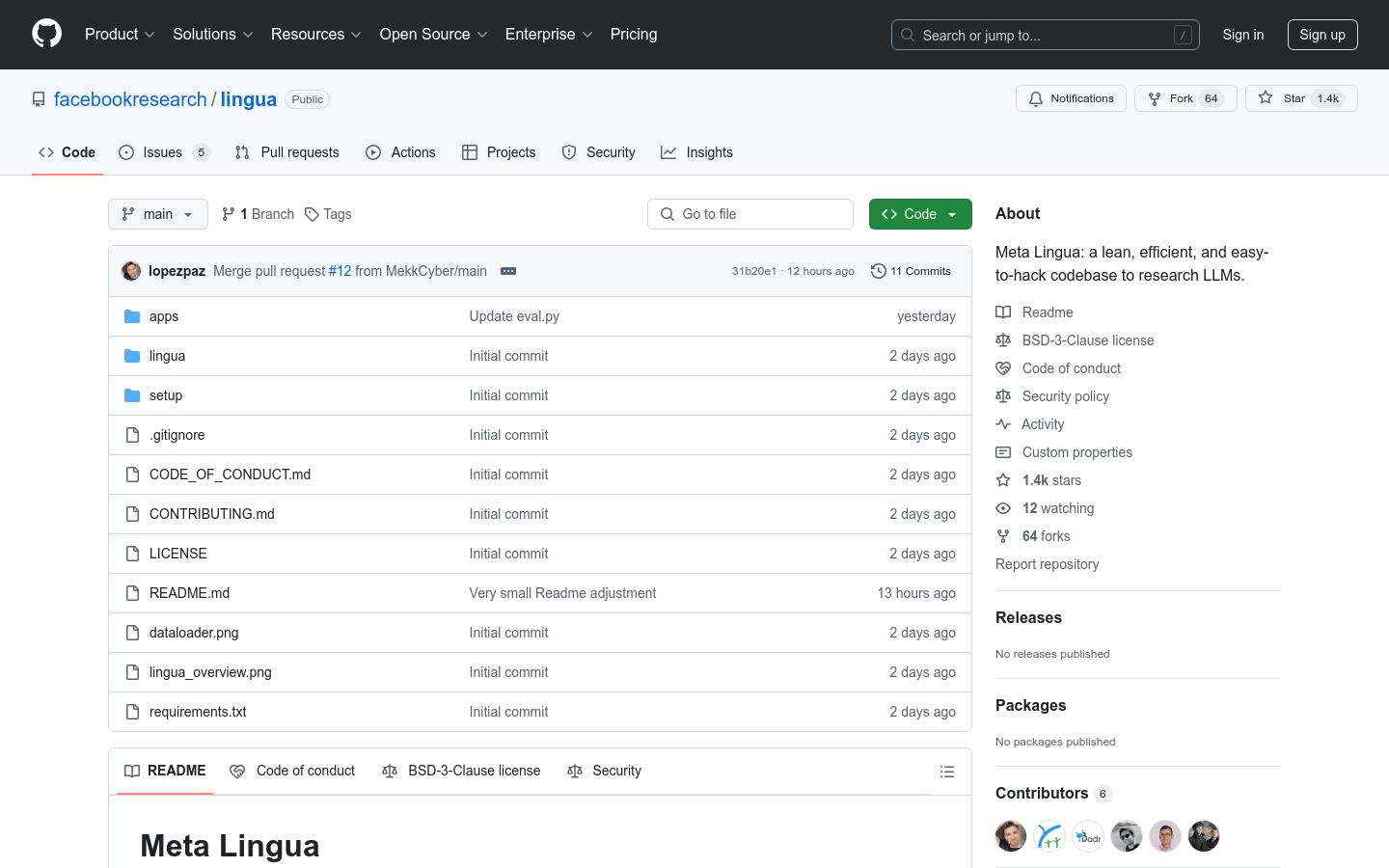

What is Meta Lingua?

Meta Lingua is a lightweight and efficient library for training and inference of large language models (LLMs), tailored for research purposes. Built using PyTorch components, it allows researchers to experiment with new architectures, loss functions, and datasets easily. The library supports end-to-end training, inference, and evaluation, providing tools to analyze model performance and stability.

Target Audience:

It is aimed at researchers, developers, and students in natural language processing and machine learning. Its flexibility and ease of use make it ideal for exploring novel LLM architectures and training strategies.

Usage Scenarios:

Researchers can train custom LLMs for text generation tasks.

Developers can optimize model performance and resource utilization across multiple GPUs.

Students can learn how to build and train large language models.

Key Features:

Models are built using PyTorch components, making it easy to modify and experiment with new architectures.

Supports various parallel strategies like data parallelism, model parallelism, and activation checkpointing.

Offers distributed training support for multi-GPU setups.

Includes dataloaders for pre-training LLMs.

Integrates performance analysis tools to assess memory and computational efficiency.

Provides model checkpoint management for saving and loading models on different numbers of GPUs.

Supports configuration files and command-line arguments for flexible experiment setup and iteration.

Getting Started:

1. Clone the Meta Lingua repository to your local machine.

2. Navigate to the repository directory and run the setup script to create the environment.

3. Activate the created environment.

4. Use provided or custom configurations to start the training script.

5. Monitor the training process and adjust configuration parameters as needed.

6. Use evaluation scripts to assess the model at given checkpoints.

7. Utilize analysis tools to check the model’s performance and resource usage.