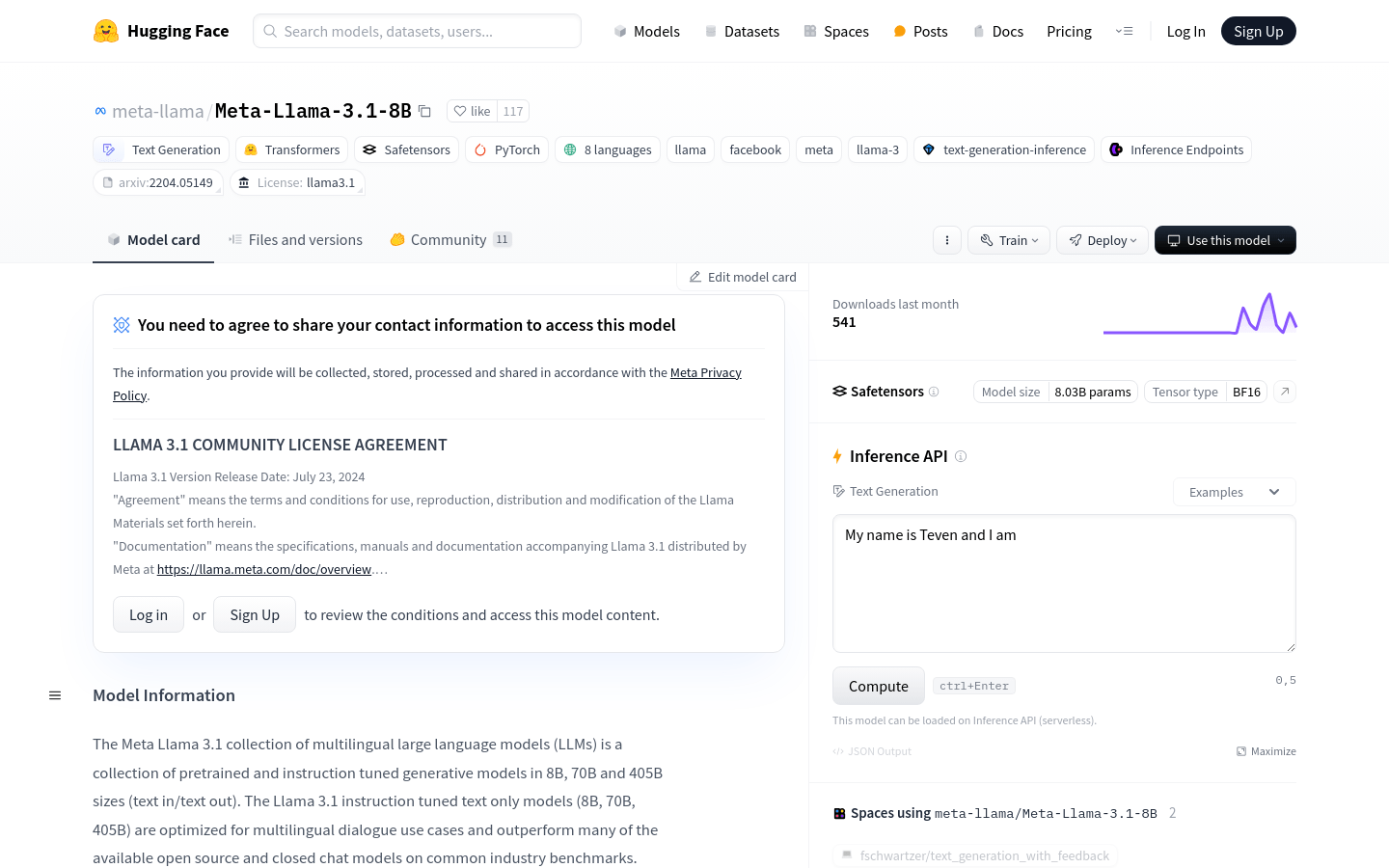

Meta Llama 3.1 is a series of pre-trained and instruction-tuned multilingual large language models (LLMs) with 8B, 70B and 405B sizes, supports 8 languages, optimized for multilingual dialogue use cases, and performs excellently in industry benchmarks. The Llama 3.1 model adopts an autoregressive language model, uses an optimized Transformer architecture, and improves the usefulness and security of the model through supervised fine-tuning (SFT) and reinforcement learning combined with human feedback (RLHF).

Demand population:

"The target audience is for researchers and developers who need natural language processing and dialogue system development in multiple language environments. The model is suitable for them because it provides multilingual support, can handle complex dialogue scenarios, and improves the security and usefulness of the model through advanced training techniques."

Example of usage scenarios:

Used to build multilingual chatbots, providing user consultation and support services

Integrate into a cross-language content creation platform to assist users in generating content that meets the target language culture

As a research tool for multilingual translation and language understanding, it promotes research progress in the field of natural language processing

Product Features:

Supports text generation and dialogue capabilities in 8 languages

Improve model performance using an optimized Transformer architecture

Training through supervised fine-tuning and reinforcement learning combined with human feedback to match human preferences

Supports multilingual input and output, enhancing the multilingual capabilities of the model

Provides static and instruction-tuned models to suit different natural language generation tasks

Supports the use of model output to improve other models, including synthetic data generation and model distillation

Tutorials for use:

1. Install the necessary libraries and tools such as Transformers and PyTorch.

2. Use the pip command to update the Transformers library to the latest version.

3. Import the Transformers library and PyTorch library to prepare for model loading.

4. Load the Meta-Llama-3.1-8B model by specifying the model ID.

5. Use the pipeline or generate() function provided by the model for text generation or dialogue interaction.

6. Adjust model parameters such as device mapping and data types as needed.

7. Call the model to generate text or respond to user input.