What is Mistral-Nemo-Instruct-2407?

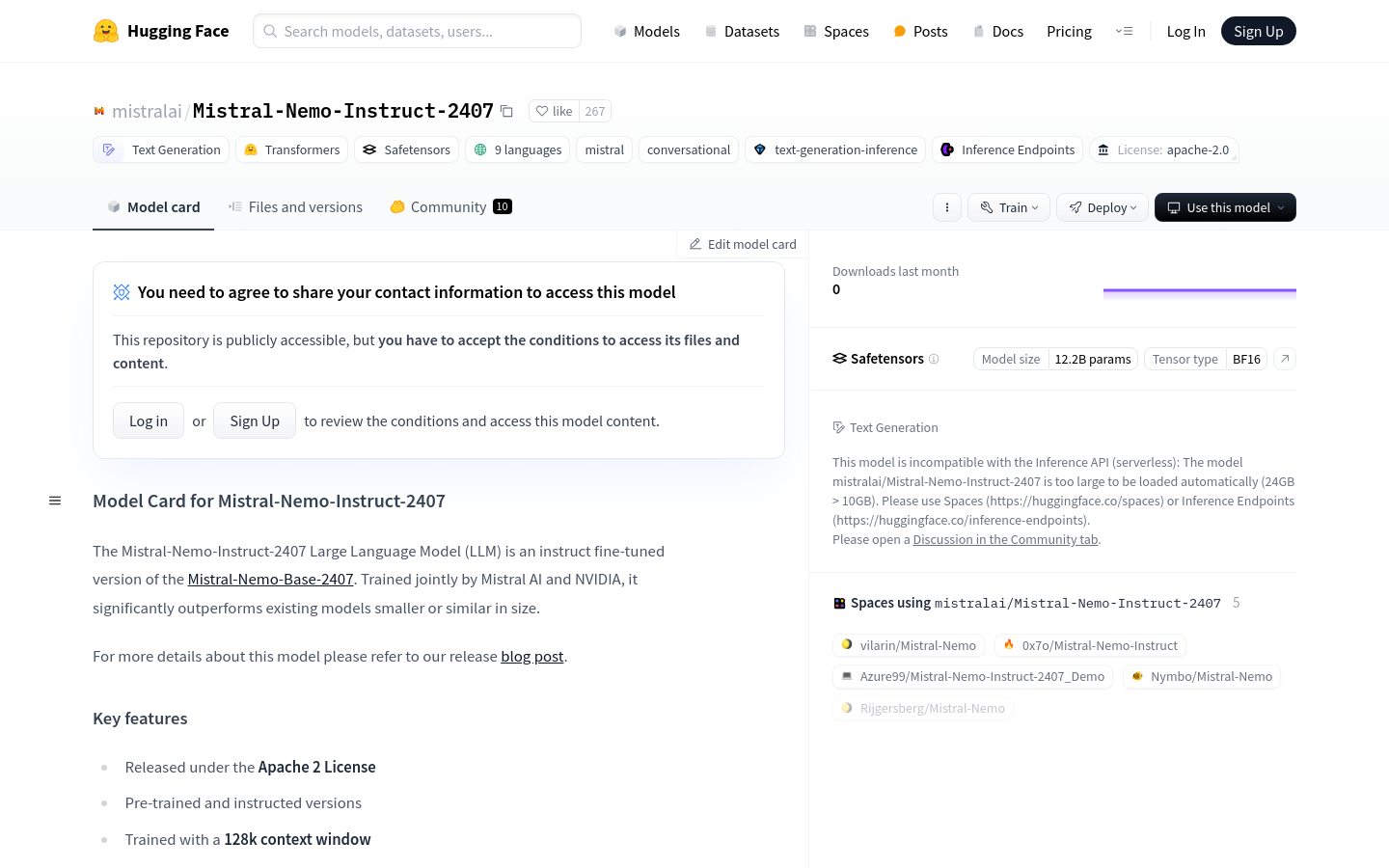

Mistral-Nemo-Instruct-2407 is a large language model (LLM) developed by Mistral AI and NVIDIA. This model is a guided fine-tuning version of Mistral-Nemo-Base-2407. It is trained on multilingual and code data, significantly outperforming similar or smaller models.

Key Features:

Supports training on multilingual and code data

Has a 128k context window

Can replace Mistral 7B

Model Architecture:

40 layers

5120 dimensions

128 attention heads

1436 hidden dimensions

32 attention heads per layer

8 key-value attention heads (GQA)

2^17 vocabulary size (approximately 128k)

Rotational embeddings (theta=1M)

Performance:

Outperforms other models in benchmarks like HellaSwag, Winogrande, and OpenBookQA

Target Audience:

Developers and researchers who need to handle large volumes of text and multilingual data

Usage Scenarios:

Text generation based on specific instructions

Machine translation in multilingual environments

Retrieving current weather information through function calls

Product Highlights:

Trained on multilingual and code data

128k context window

Powerful text processing capabilities with its architecture

Outstanding performance in various benchmarks

Getting Started Guide:

1. Install mistral_inference to ensure compatibility with the model

2. Download model files including params.json, consolidated.safetensors, and tekken.json

3. Use mistral-chat CLI to interact with the model

4. Generate text using the transformers framework and pipeline functions

5. Retrieve current weather information using Tool and Function classes

6. Adjust model parameters such as temperature to optimize outputs

7. Refer to the model card for detailed information and usage limitations