What is Nemotron-4-340B-Base?

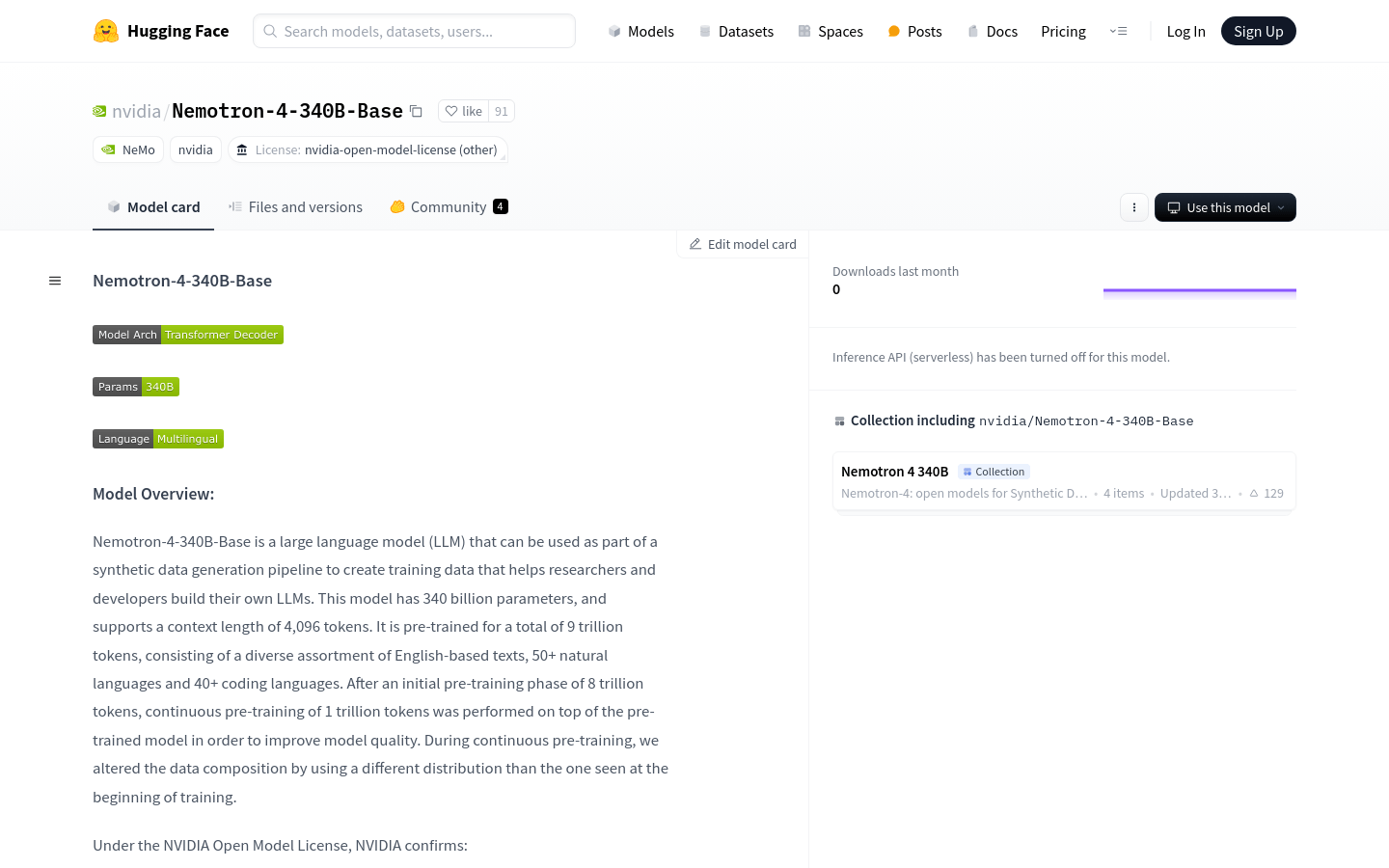

Nemotron-4-340B-Base is a large language model developed by NVIDIA with 340 billion parameters. It supports up to 4096 tokens of context and is ideal for generating synthetic data to help researchers and developers build their own large language models. The model has been pre-trained on 9 trillion tokens across more than 50 natural languages and over 40 programming languages.

Target Audience:

The primary audience includes researchers and developers who need to build or train their own large language models. Its support for multiple languages and programming languages makes it suitable for developing multilingual applications and code generation tools.

Example Scenarios:

Researchers can use Nemotron-4-340B-Base to generate training data for domain-specific language models.

Developers can utilize the model’s multilingual capabilities to create chatbots that support multiple languages.

Educational institutions can employ the model to assist students learning programming by generating example code to explain complex concepts.

Key Features:

Supports over 50 natural languages and more than 40 programming languages.

Compatible with the NVIDIA NeMo framework, providing parameter-efficient fine-tuning and model alignment tools.

Uses Grouped-Query Attention and Rotary Position Embeddings technologies.

Pre-trained on 9 trillion tokens, including diverse English text.

Supports BF16 inference and can be deployed on various hardware configurations.

Provides 5-shot and zero-shot performance evaluations showcasing its multilingual understanding and code generation abilities.

Usage Guide:

1. Download and install the NVIDIA NeMo framework.

2. Set up the required hardware environment, including a GPU that supports BF16 inference.

3. Create a Python script to interact with the deployed model.

4. Write a Bash script to start the inference server.

5. Use the Slurm job scheduler to distribute the model and associate the inference server across multiple nodes.

6. Send text generation requests through Python scripts and obtain responses from the model.