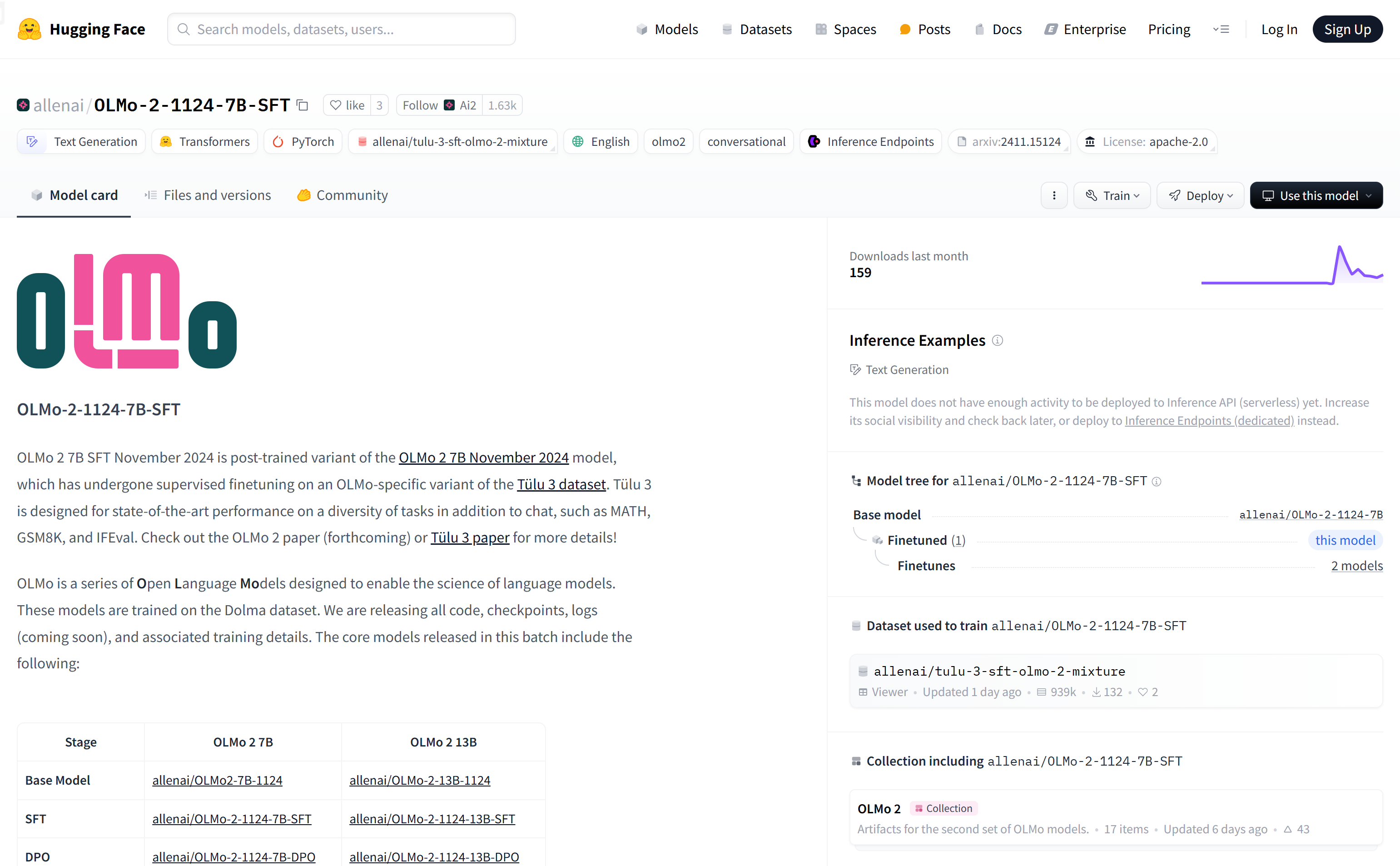

OLMo-2-1124-7B-SFT is an English text generation model released by the Allen Institute of Artificial Intelligence (AI2), a supervised fine-tuned version of the OLMo 2 7B model optimized specifically for the Tülu 3 dataset. The Tülu 3 dataset is designed to provide top performance for diverse tasks, including chat, math question answering, GSM8K, IFEval, etc. The main advantages of this model include strong text generation capabilities, diverse task processing capabilities, and open source code and training details, making it a powerful tool in the research and education fields.

Demand population:

"The target audience is researchers, developers, and educators in the field of natural language processing. Due to its strong generation capabilities and a wide range of application scenarios, the model is particularly suitable for users who need to handle complex language tasks and conduct model research."

Example of usage scenarios:

Case 1: Researchers used the OLMo-2-1124-7B-SFT model to develop chatbots to improve the naturalness and accuracy of the conversation.

Case 2: Educational institutions use this model to generate teaching materials, such as answers and explanations of mathematical problems, to assist in teaching.

Case Three: Developers integrate models into their applications to provide automatic review and generation suggestions for user-generated content.

Product Features:

• Provide high-quality text generation capabilities based on large-scale dataset training

• Supports a variety of natural language processing tasks, including chat, math question answering, etc.

• Open source code and training details for easy research and further development

• Supervised fine-tuning improves model performance on specific tasks

• Supports Hugging Face platform, easy to load and use

• Suitable for research and education, promoting the scientific development of language models

Tutorials for use:

1. Visit the Hugging Face platform and search for OLMo-2-1124-7B-SFT model.

2. Load the model using the provided code snippet: `from transformers import AutoModelForCausalLM; olmo_model = AutoModelForCausalLM.from_pretrained("allenai/ OLMo-2-1124-7B-SFT ")`.

3. Set system prompts as needed to define the role and functions of the model.

4. Use the model to perform text generation or other natural language processing tasks.

5. Adjust parameters according to the model output to optimize performance.

6. Integrate models into larger systems such as chatbots or content generation platforms.

7. Follow the open source license agreement, use the model reasonably, and cite relevant papers in the research.