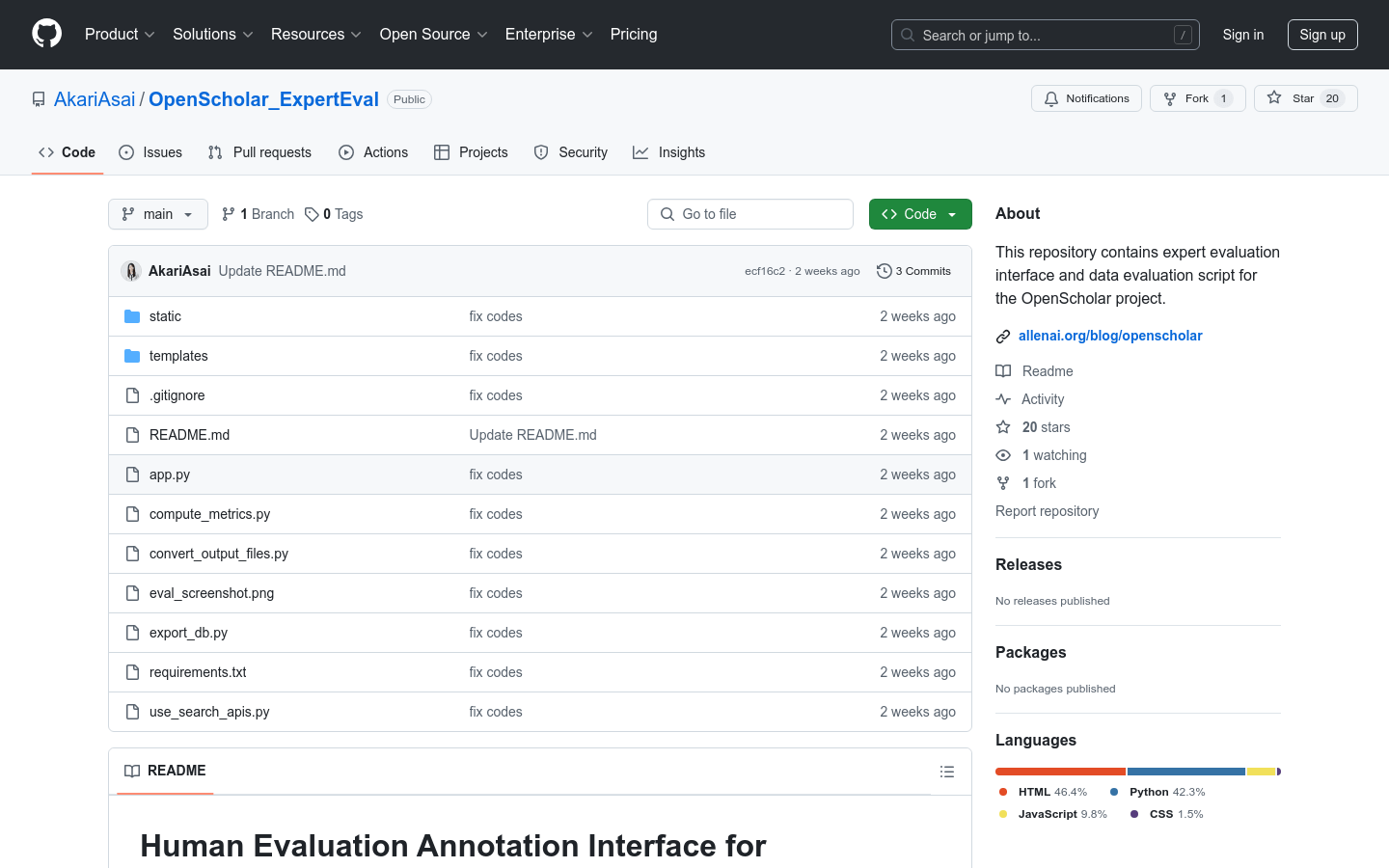

OpenScholar_ExpertEval is a collection of interfaces and scripts for expert evaluation and data evaluation, designed to support the OpenScholar project. This project searches scientific literature for enhanced language model synthesis and conducts detailed manual evaluation of the text generated by the model. Product background is based on AllenAI's research project, which has important academic and technical value, and can help researchers and developers better understand and improve language models.

Demand population:

"The target audience is for researchers, developers and educators, especially those working in the fields of natural language processing and machine learning. The product is suitable for them because it provides a platform to evaluate and improve the performance of language models, especially in scientific literature synthesis."

Example of usage scenarios:

The researchers used the tool to evaluate the accuracy and reliability of scientific literature generated by different language models.

Educators can use this tool to teach students how to evaluate content generated by AI.

Developers can use this tool to test and improve their own language models.

Product Features:

Provides a manual evaluation labeling interface: used by experts to evaluate the text generated by the model.

Supports RAG evaluation: Ability to evaluate retrieval enhanced generative models.

Fine-grained evaluation: allows experts to conduct more detailed evaluations.

Data preparation: The evaluation instance needs to be placed into the specified folder, supporting JSONL format.

Result database storage: The evaluation results are stored in the local database file by default.

Results export: Supports exporting evaluation results into Excel files.

Evaluation indicator calculation: Provide script calculation evaluation indicators and consistency.

Interface sharing: Support deployment on cloud services to share evaluation interface.

Tutorials for use:

1. Installation environment: Follow the guides in README to create and activate the virtual environment and install dependencies.

2. Prepare data: Put the evaluation instance into the `data` folder, each instance should contain prompts and the completion results of the two models.

3. Run the application: Use the `python app.py` command to start the evaluation interface.

4. Access interface: Open `http://localhost:5001` in the browser to access the evaluation interface.

5. Evaluation results: After the evaluation is completed, you can view the progress at `http://localhost:5001/summary`.

6. Results export: Use the `python export_db.py` command to export the evaluation results into an Excel file.

7. Calculate metrics: Use the `python compute_metrics.py` command to calculate evaluation metrics and consistency.