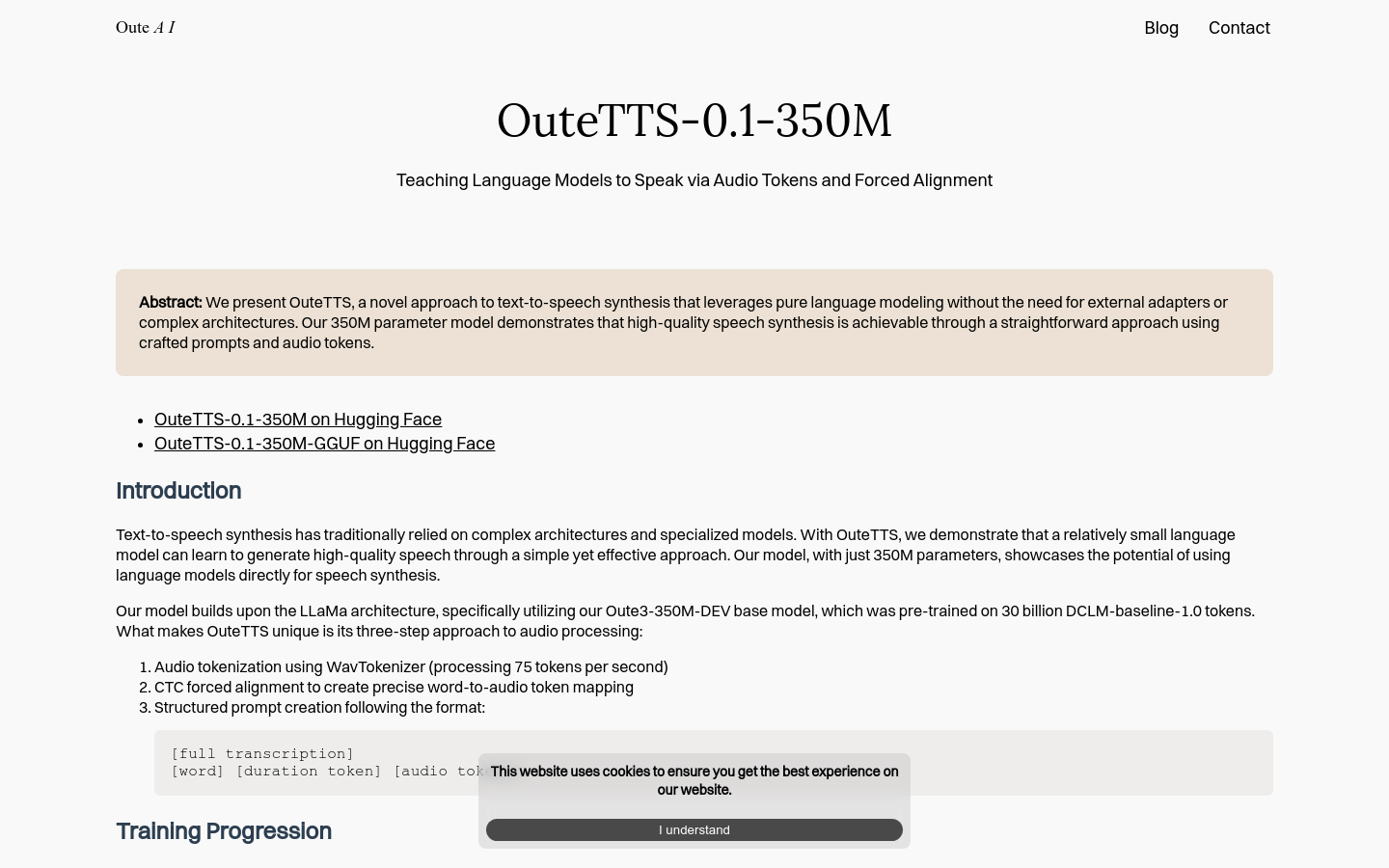

OuteTTS-0.1-350M is a text-to-speech synthesis technology based on a pure language model. It does not require external adapters or complex architectures and achieves high-quality speech synthesis through carefully designed prompts and audio tags. This model is based on the LLaMa architecture and uses 350M parameters, demonstrating the potential of directly using language models for speech synthesis. It processes audio in three steps: audio tokenization using WavTokenizer, CTC-enforced alignment to create precise word-to-audio token mapping, and creation of structured prompts that follow a specific format. Key advantages of OuteTTS include a pure language modeling approach, sound cloning capabilities, and compatibility with llama.cpp and GGUF formats.

Demand group:

"The target audience is developers and enterprises that require high-quality speech synthesis technology, such as voice assistants, audiobook production, automatic news broadcasting, etc. OuteTTS-0.1-350M simplifies the speech synthesis process and reduces technical costs with its pure language model approach. The threshold enables more developers and enterprises to use this technology to improve production efficiency and user experience. "

Example of usage scenario:

Developers use OuteTTS-0.1-350M to provide natural and smooth voice output for voice assistants.

Audiobook producers utilize this model to convert text content into high-quality audiobooks.

News organizations use OuteTTS-0.1-350M to automatically convert press releases into news broadcast voices.

Product features:

Pure language modeling method for text-to-speech synthesis

Voice cloning capability to create speech output with specific vocal characteristics

Based on LLaMa architecture, using a model with 350M parameters

Compatible with llama.cpp and GGUF formats for easy integration and use

Accurate speech synthesis with audio tokenization and CTC-enforced alignment

Structured prompt creation to improve speech synthesis accuracy and naturalness

Supports efficient speech synthesis of shorter sentences, while long texts need to be segmented and processed

Usage tutorial:

1. Install OuteTTS: Install the outetts library through pip.

2. Initialize the interface: Choose to use the Hugging Face model or the GGUF model, and initialize the interface.

3. Generate speech: Enter text and set relevant parameters, such as temperature, repetition penalty, etc., and call the interface to generate speech.

4. Play voice: Use the playback function of the interface to directly play the generated voice.

5. Save the voice: Save the generated voice as a file, such as WAV format.

6. Voice Clone: Create a custom speaker and use that voice to generate speech.