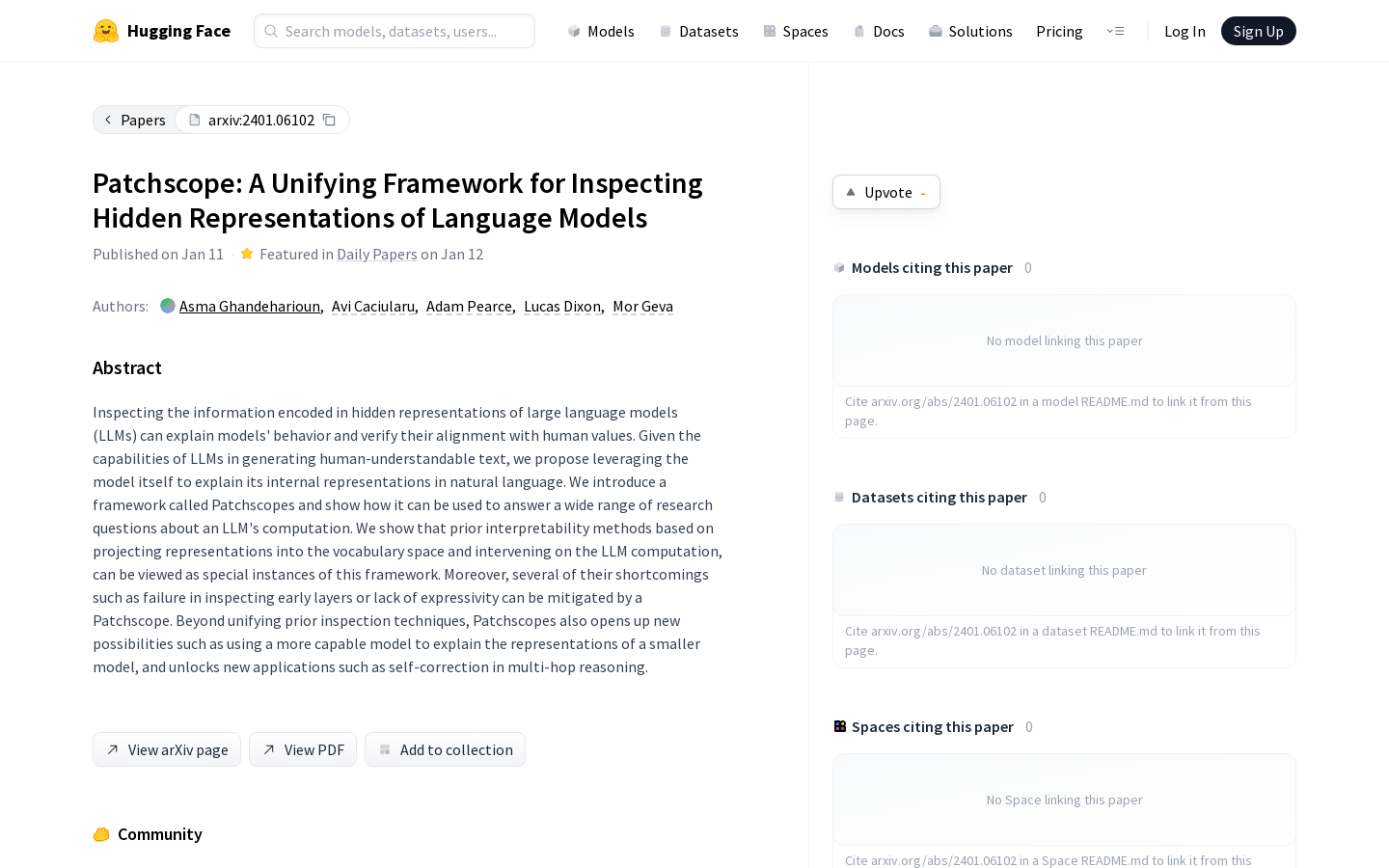

Patchscope is a unified framework for inspecting hidden representations of large language models (LLMs). It can explain model behavior and verify its consistency with human values. By leveraging the model itself to generate human-understandable text, we propose leveraging the model itself to interpret its natural language internal representation. We show how the Patchscope s framework can be used to answer a wide range of research questions on LLM computation. We find that previous interpretability methods based on projecting representations into lexical space and intervening in LLM calculations can be considered as special instances of this framework. In addition, Patchscope opens up new possibilities, such as using more powerful models to interpret representations of smaller models, and unlocks new applications such as self-correction, such as multi-hop inference.

Demand group:

" Patchscope can be used to study the inner workings of large language models, verify their consistency with human values, and answer research questions about LLM computation."

Example of usage scenario:

For analyzing text generated by large language models

Verify that a language model conforms to specific values

Investigate internal representations of language model computations

Product features:

Interpret internal representations of large language models

Verify model consistency with human values

Answer research questions about LLM calculations