Phi-4-multimodal-instruct is a multimodal basic model developed by Microsoft, which supports text, image and audio input and generates text output. The model is based on the research and datasets of Phi-3.5 and Phi-4.0, and is constructed through supervised fine-tuning, direct preference optimization, and human feedback reinforcement learning to improve instruction compliance and security. It supports text, image and audio inputs in multiple languages, has a context length of 128K, and is suitable for a variety of multimodal tasks such as speech recognition, speech translation, visual question and answer. The model has achieved significant improvements in multimodal capabilities, especially in speech and visual tasks. It provides developers with powerful multimodal processing capabilities that can be used to build various multimodal applications.

Demand population:

"This model is suitable for developers and researchers who need multimodal processing capabilities. It can be used to build multilingual, multimodal AI applications, such as voice assistants, visual question and answer systems, multimodal content generation, etc. It can handle complex multimodal tasks and provide efficient solutions, especially suitable for scenarios with high performance and security requirements."

Example of usage scenarios:

As a voice assistant, it provides users with multilingual voice translation and voice Q&A services.

In the field of education, help students learn mathematics and science through visual and voice inputs

Used for content creation, generating relevant text descriptions based on image or audio input

Product Features:

Supports text, image and audio inputs, generates text output

Supports text (such as English, Chinese, French, etc.) and audio (such as English, Chinese, German, etc.)

With powerful automatic speech recognition and speech translation capabilities, surpassing existing expert models

Able to process multiple image inputs, support visual question and answers, chart comprehension and other tasks

Supports voice summary and voice Q&A, providing efficient audio processing capabilities

Tutorials for use:

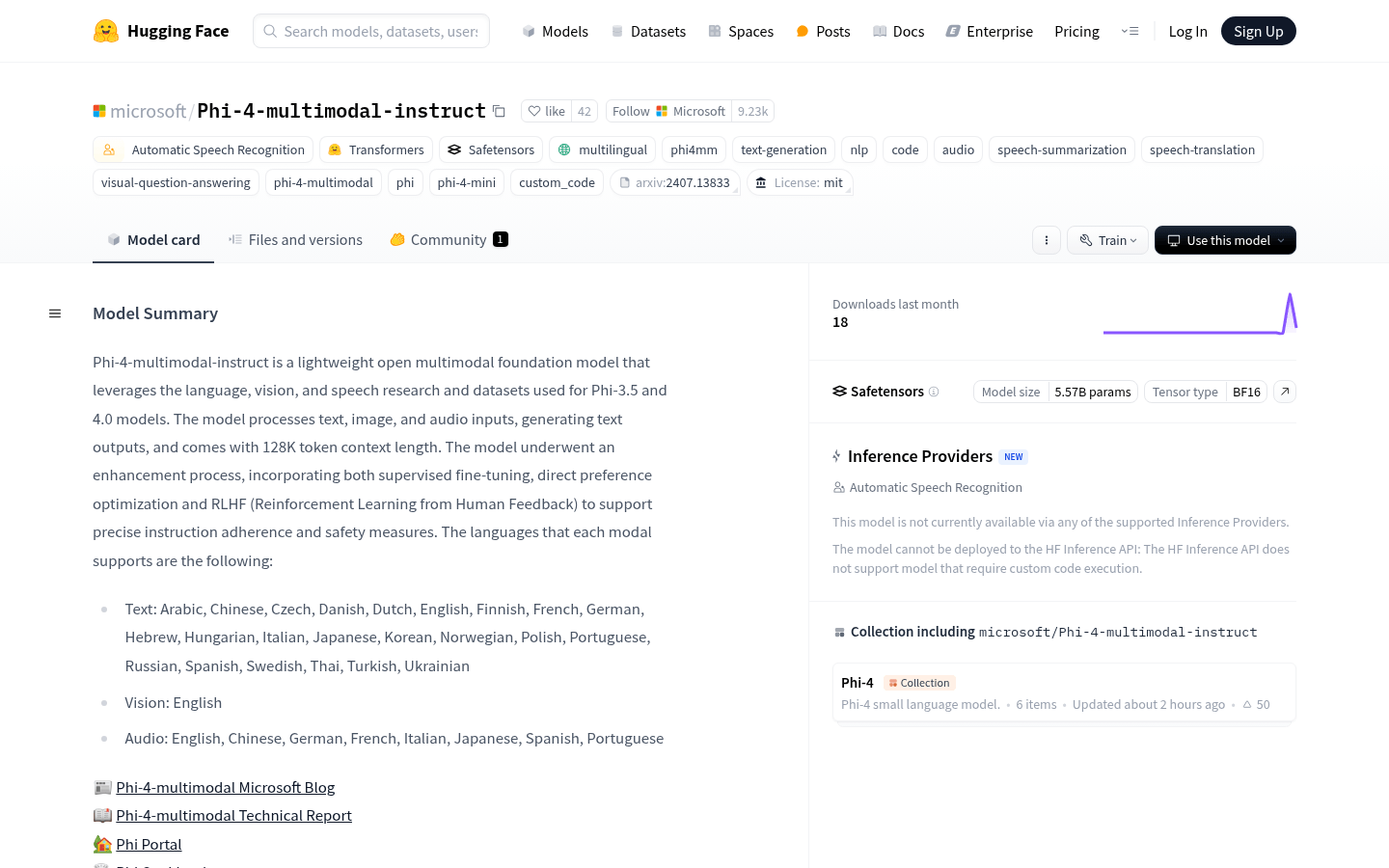

1. Visit the Hugging Face website and find the Phi-4-multimodal-instruct model page

2. Choose the appropriate input format (text, image or audio) according to your needs

3. Use the model's API or local loading model for reasoning

4. For image input, convert the image to a supported format and upload it

5. For audio input, make sure the audio format meets the requirements and specify tasks (such as speech recognition or translation)

6. Provide prompt text (such as a question or instruction), and the model will generate the corresponding text output.

7. Further processing or application based on the output results