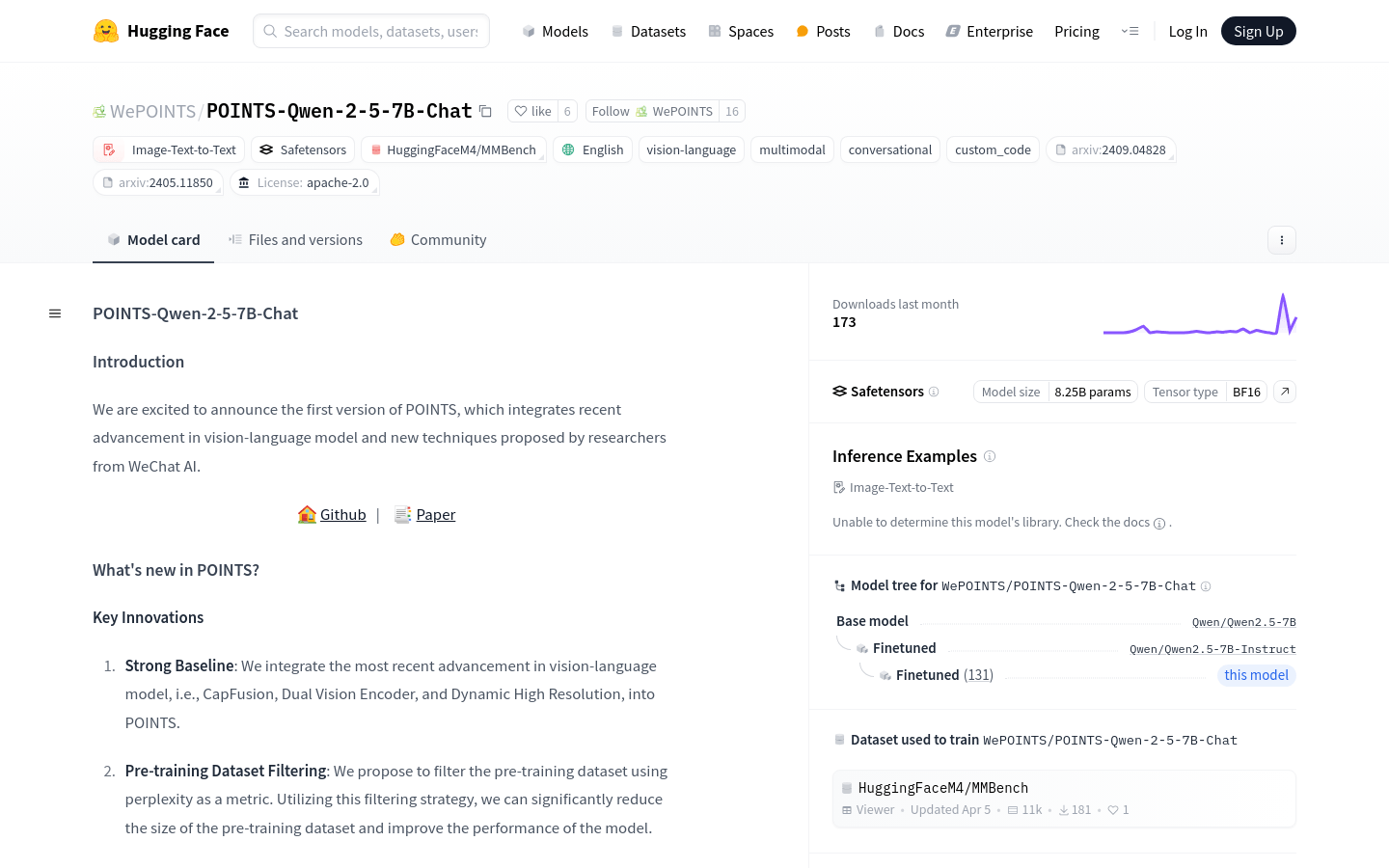

POINTS-Qwen-2-5-7B-Chat is a model that integrates the latest advances and new techniques of visual language models, proposed by researchers at WeChat AI. It significantly improves model performance through pre-trained dataset screening, model soup and other technologies. This model performs well in multiple benchmarks and is an important advance in the field of visual language models.

Demand population:

"The target audience is researchers, developers and enterprise users who need to use advanced visual language models to process image and text data to improve the product's intelligent interaction capabilities. POINTS-Qwen-2-5-7B-Chat is particularly suitable for AI projects that need to process large amounts of visual language data due to its high performance and ease of use."

Example of usage scenarios:

Use models to describe image details, such as landscapes, people, or objects.

In the field of education, used for image recognition and description, assisted teaching.

In the commercial field, it is used for image recognition and response in customer service.

Product Features:

Integrate the latest visual language modeling technologies such as CapFusion, Dual Vision Encoder and Dynamic High Resolution.

Use confusion as an indicator for filtering pretrained data sets to effectively reduce the data set size and improve model performance.

Use model soup technology to integrate the models after fine-tuning the data set of different visual instructions to further improve performance.

Excellent in multiple benchmark tests, such as MMBench-dev-en, MathVista, etc.

Supports multimodal and dialogue functions, suitable for image text-to-text tasks.

The model parameters are large, reaching 8.25B, and the BF16 tensor type is used.

Provide detailed usage examples and community discussions to facilitate user learning and communication.

Tutorials for use:

1. Import necessary libraries and modules, including transformers, PIL, torch, etc.

2. Get the image URL and obtain the image data through requests.

3. Use the PIL library to open the image data and prepare the prompt text.

4. Specify the model path and load the tokenizer and model from the pretrained model.

5. Set the image processor and generate configuration, including the maximum number of new tokens, temperature, top_p, etc.

6. Use the model.chat method to pass in the image, prompt text, tokenizer, image processor and other parameters to perform model interaction.

7. Output the response result of the model.