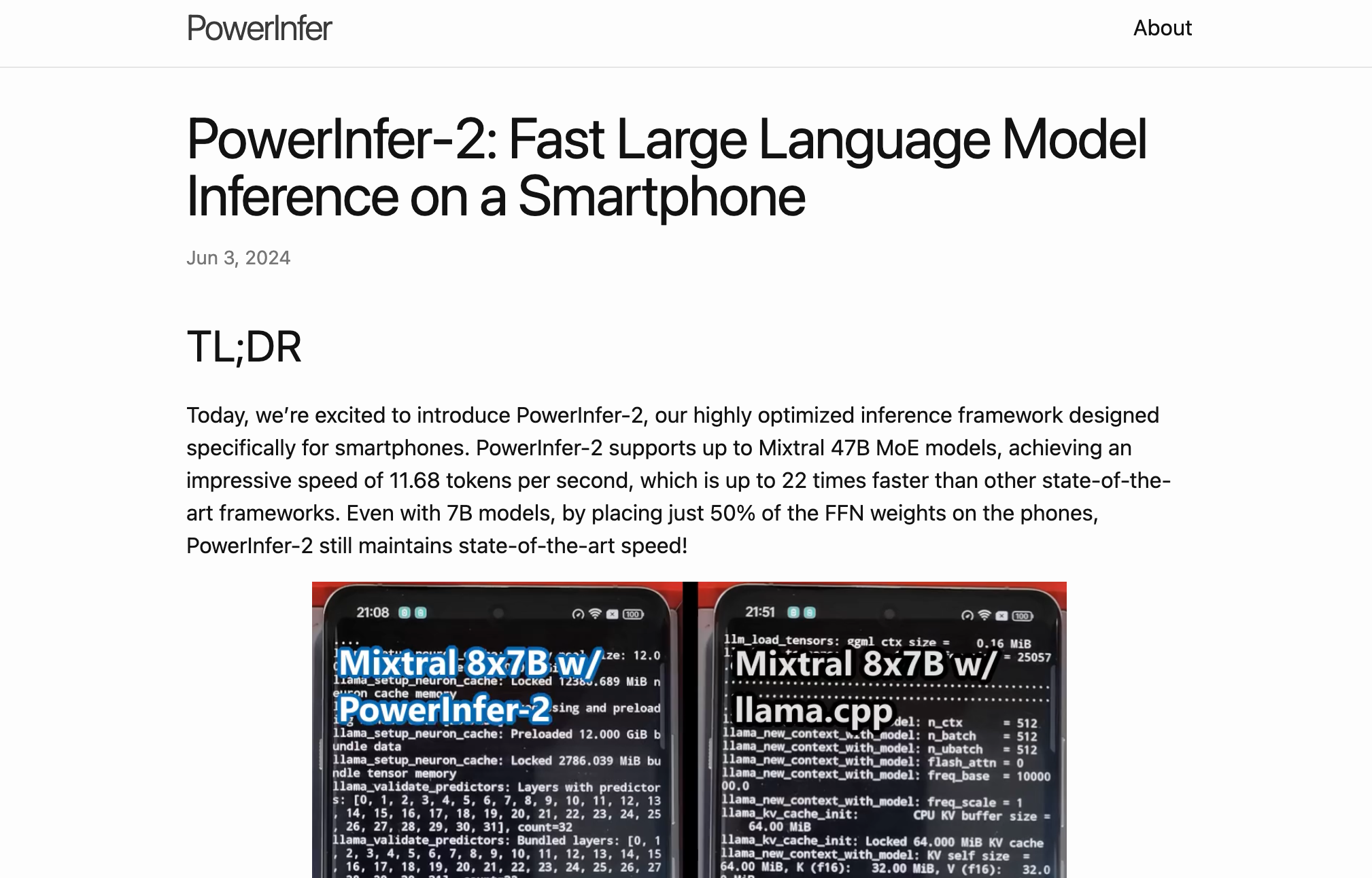

PowerInfer-2 is an inference framework optimized for smartphones. It supports MoE models with up to 47B parameters and achieves an inference speed of 11.68 tokens per second, which is 22 times faster than other frameworks. Through heterogeneous computing and I/O-Compute pipeline technology, memory usage is significantly reduced and inference speed is increased.

target audience

Developers and enterprises who need to deploy large language models on mobile devices. They can leverage PowerInfer-2 's high-speed inference capabilities to develop mobile applications with superior performance and stronger data privacy protection.

Usage scenario examples

Mobile application developers use PowerInfer-2 to deploy personalized recommendation systems on smartphones.

Enterprises leverage PowerInfer-2 to automate customer service on mobile devices.

Research institutions use PowerInfer-2 for real-time language translation and interaction on mobile devices.

Product features

Supports MoE models with up to 47B parameters.

Achieving an inference speed of 11.68 tokens per second.

Heterogeneous computing optimization and dynamic adjustment of computing unit size.

I/O-Compute pipeline technology maximizes the overlap between data loading and calculation.

Significantly reduces memory usage and increases inference speed.

For smartphones, enhance data privacy and performance.

The model system is co-designed to ensure the predictable sparsity of the model.

Tutorial

1. Visit the official website of PowerInfer-2 and download the framework.

2. According to the documentation, integrate PowerInfer-2 into the mobile application development project.

3. Select a suitable model and configure model parameters to ensure the sparsity of the model.

4. Use the API of PowerInfer-2 for model inference to optimize inference speed and memory usage.

5. Test the inference effect on mobile devices to ensure application performance and user experience.

6. Make adjustments based on feedback to optimize the model deployment and inference process.