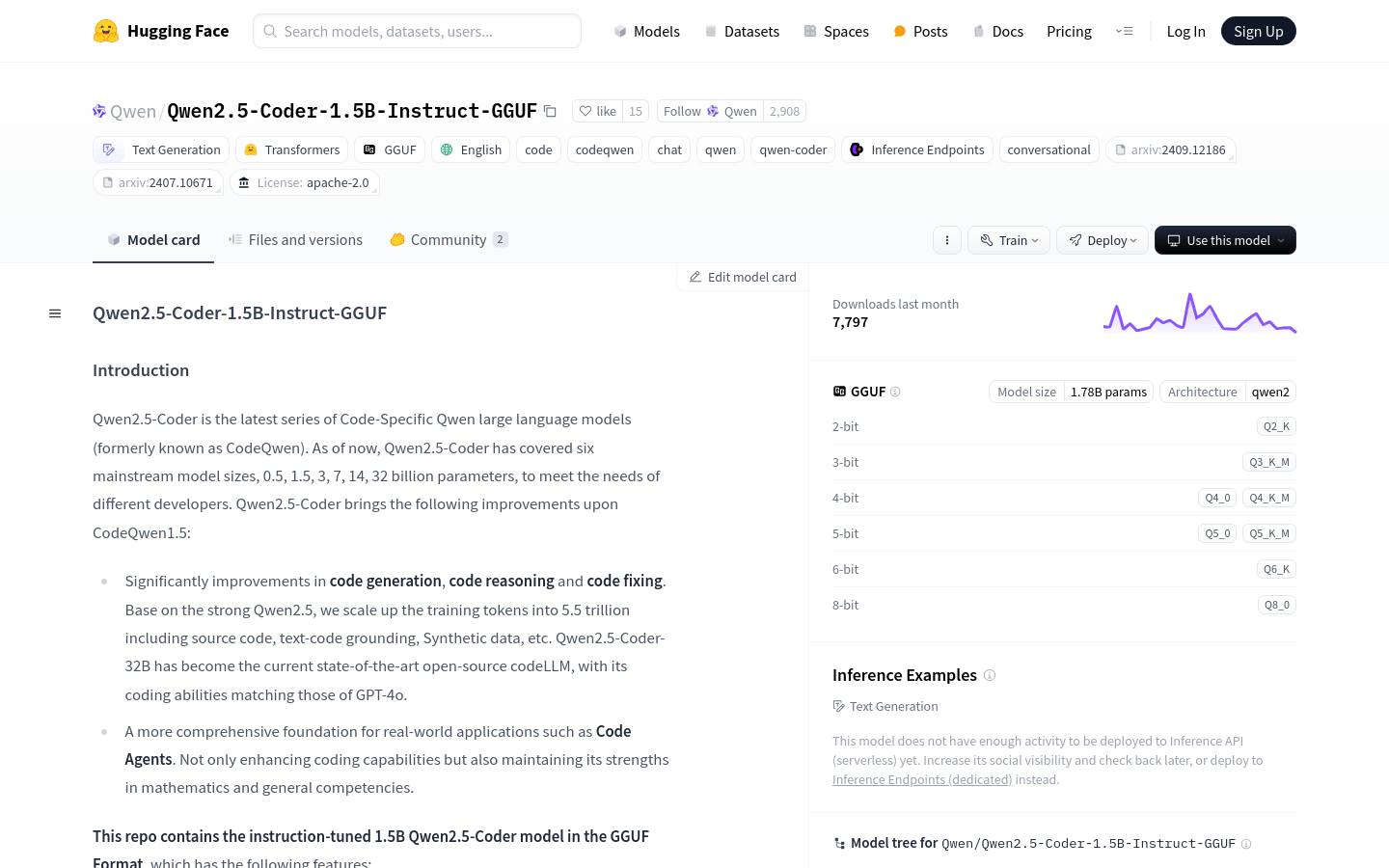

Qwen2.5-Coder is the latest series of Qwen's large language models, designed for code generation, code reasoning and code repair. Based on the powerful Qwen2.5, by adding training tokens to 5.5 trillion, including source code, text code foundation, synthetic data, etc., Qwen2.5-Coder-32B has become the most advanced open source code large language model, and its encoding capabilities are matched by GPT-4o. This model is an instruction-tuned version of 1.5B parameters, adopts GGUF format, and has the characteristics of causal language model, pre-training and post-training stage, transformers architecture, etc.

Demand population:

"The target audience is for developers and programmers, especially those who need to quickly generate, understand and repair code in projects. Qwen2.5-Coder helps developers improve their productivity, reduce coding errors, and accelerate development processes by providing strong code generation and reasoning capabilities."

Example of usage scenarios:

Developers use Qwen2.5-Coder to automatically complete the code to improve coding efficiency.

During the code review process, use Qwen2.5-Coder to identify potential code defects and errors.

In an educational environment, Qwen2.5-Coder serves as a teaching tool to help students understand and learn programming concepts.

Product Features:

Code generation: Significantly improves code generation capabilities, including source code generation, text code basis and synthetic data.

Code reasoning: Enhance the model's understanding of code logic and structure.

Code Repair: Improves the ability of the model to identify and fix errors and defects in the code.

Comprehensive application: Applicable to practical application scenarios, such as code proxy, not only enhances coding capabilities, but also maintains mathematical and general capabilities.

Model parameters: 1.54B parameters, where the non-embedding parameters are 1.31B, 28 layers, 12 attention heads for Q, and 2 for KV.

Context length: Supports a complete 32,768 tokens, which is one of the current models that support long sequence processing.

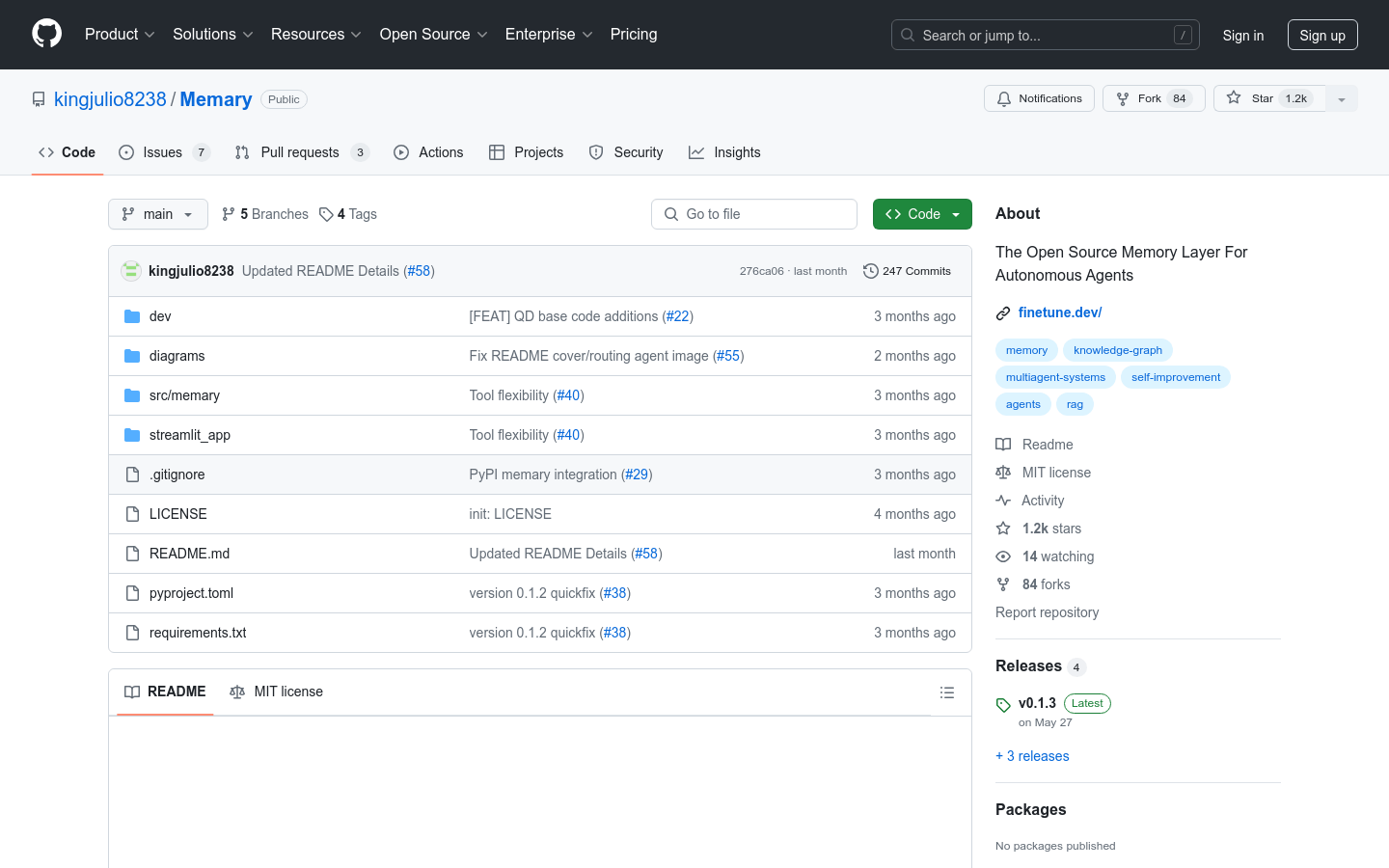

Quantification: Supports multiple quantization levels, such as q2_K, q3_K_M, q4_0, q4_K_M, q5_0, q5_K_M, q6_K, q8_0.

Tutorials for use:

1. Install huggingface_hub and llama.cpp to download and run the model.

2. Use huggingface-cli to download the required GGUF file.

3. Install llama.cpp according to the official guide and make sure to follow the latest version.

4. Use llama-cli to start the model and configure it through the specified command line parameters.

5. Run the model in chat mode to achieve an interactive experience similar to a chatbot.

6. Adjust parameters such as GPU memory and throughput as needed to suit different usage scenarios.