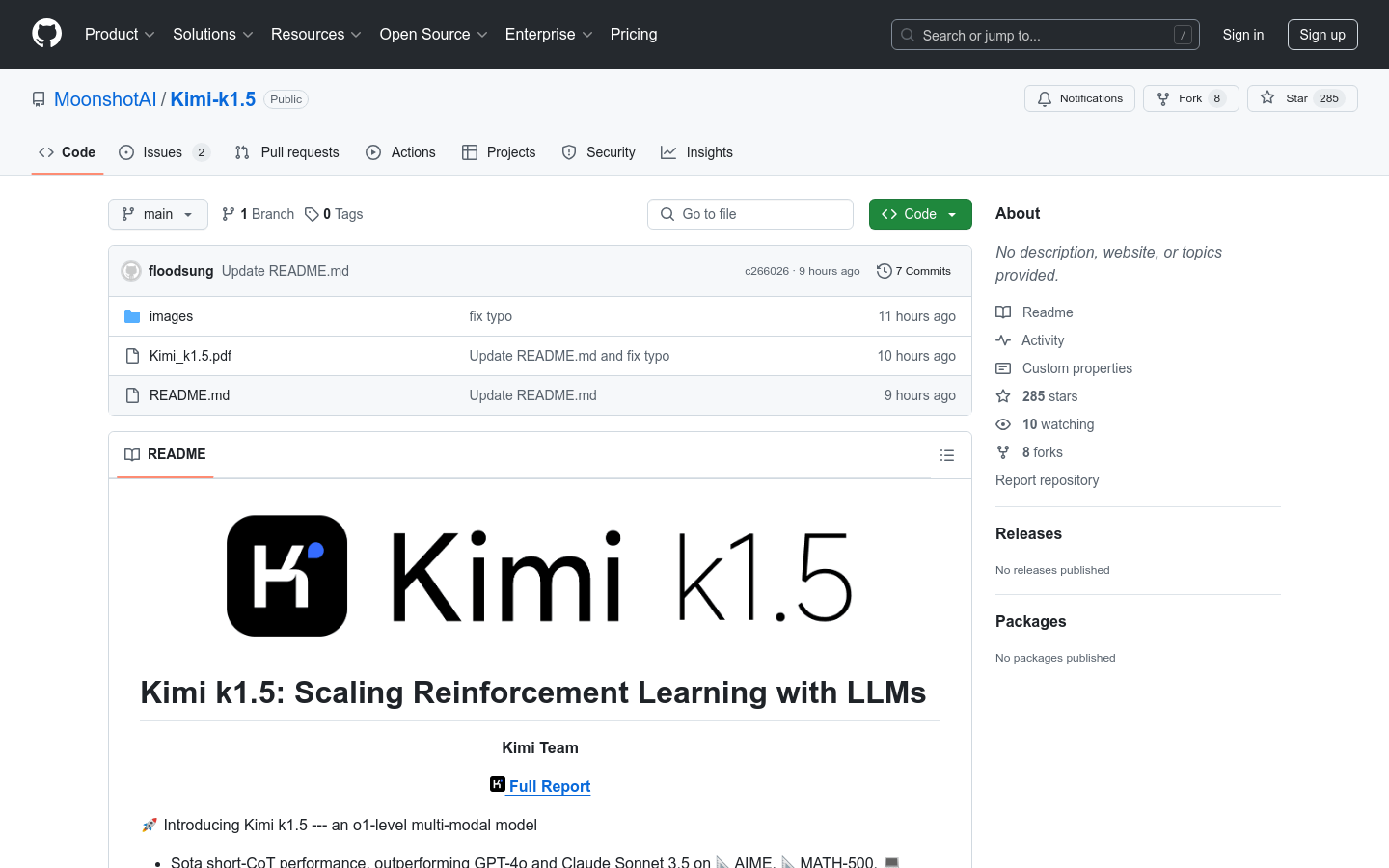

Qwen2.5-Coder-3B-Instruct-GPTQ-Int8 is a large language model in the Qwen2.5-Coder series, specially optimized for code generation, code reasoning and code repair. The model is based on Qwen2.5, and the training data includes source code, text code association, synthetic data, etc., reaching 5.5 trillion training tokens. Qwen2.5-Coder-32B has become the most advanced large-scale language model for open source code, and its coding capabilities match GPT-4o. The model also provides a more comprehensive foundation for real-world applications such as code agents, which not only enhance coding capabilities but also maintain advantages in mathematical and general abilities.

target audience

The target audience is software developers, programming enthusiasts and data scientists. This product is suitable for them because it provides powerful code assistance functions that can significantly improve programming efficiency and code quality, while supporting the processing of long code snippets and suitable for complex programming tasks.

Usage scenario examples

Developer: Use the model to generate code for the sorting algorithm.

Data Scientist: Leverage models for large-scale code analysis and optimization.

Educators: Integrate models into programming teaching to help students understand and learn code logic.

Product features

Code generation: Significantly improve code generation capabilities and help developers quickly implement code logic.

Code reasoning: Enhance the model’s understanding of code logic and improve the accuracy of code analysis.

Code repair: Automatically detect and repair errors in the code to improve code quality.

Full parameter coverage: Provides different model sizes from 0.5 billion to 3.2 billion parameters to meet different developer needs.

GPTQ quantization: 8-bit quantization technology to optimize model performance and memory usage.

Long context support: supports context lengths up to 32768 tokens, suitable for processing long code fragments.

Multi-language support: Mainly supports English and is suitable for international development environments.

Open source: The model is open source to facilitate community contribution and further research.

Tutorial

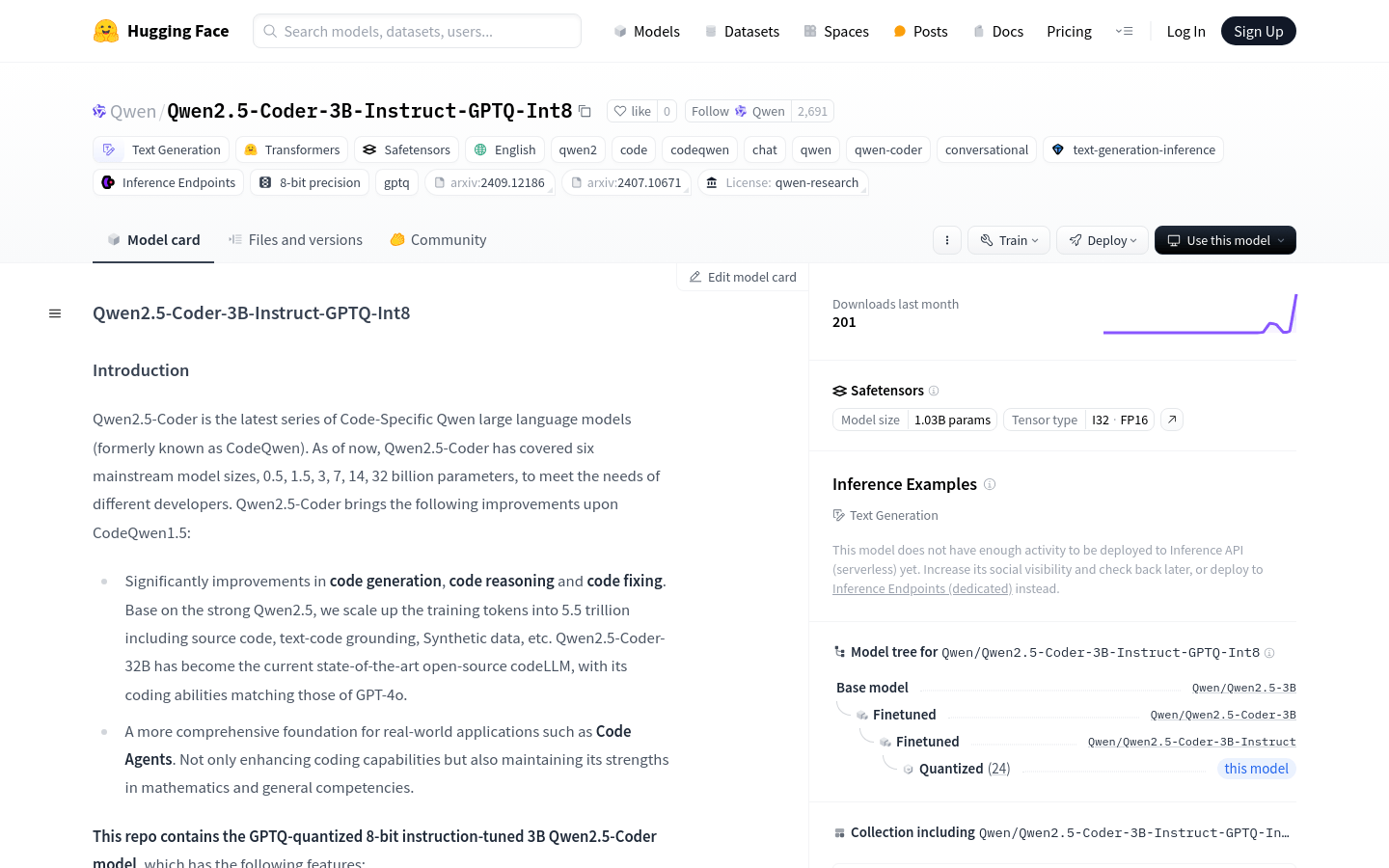

1. Install the Hugging Face transformers library and make sure the version is at least 4.37.0.

2. Use AutoModelForCausalLM and AutoTokenizer to load the model and tokenizer from Hugging Face Hub.

3. Prepare input prompts, such as writing a quick sort algorithm.

4. Use the tokenizer.applychattemplate method to process the input message and generate model input.

5. Pass the generated model input to the model and set the maxnewtokens parameter to control the length of the generated code.

6. After the model generates code, use the tokenizer.batch_decode method to convert the generated token into text.

7. Perform further testing and debugging of the generated code as needed.