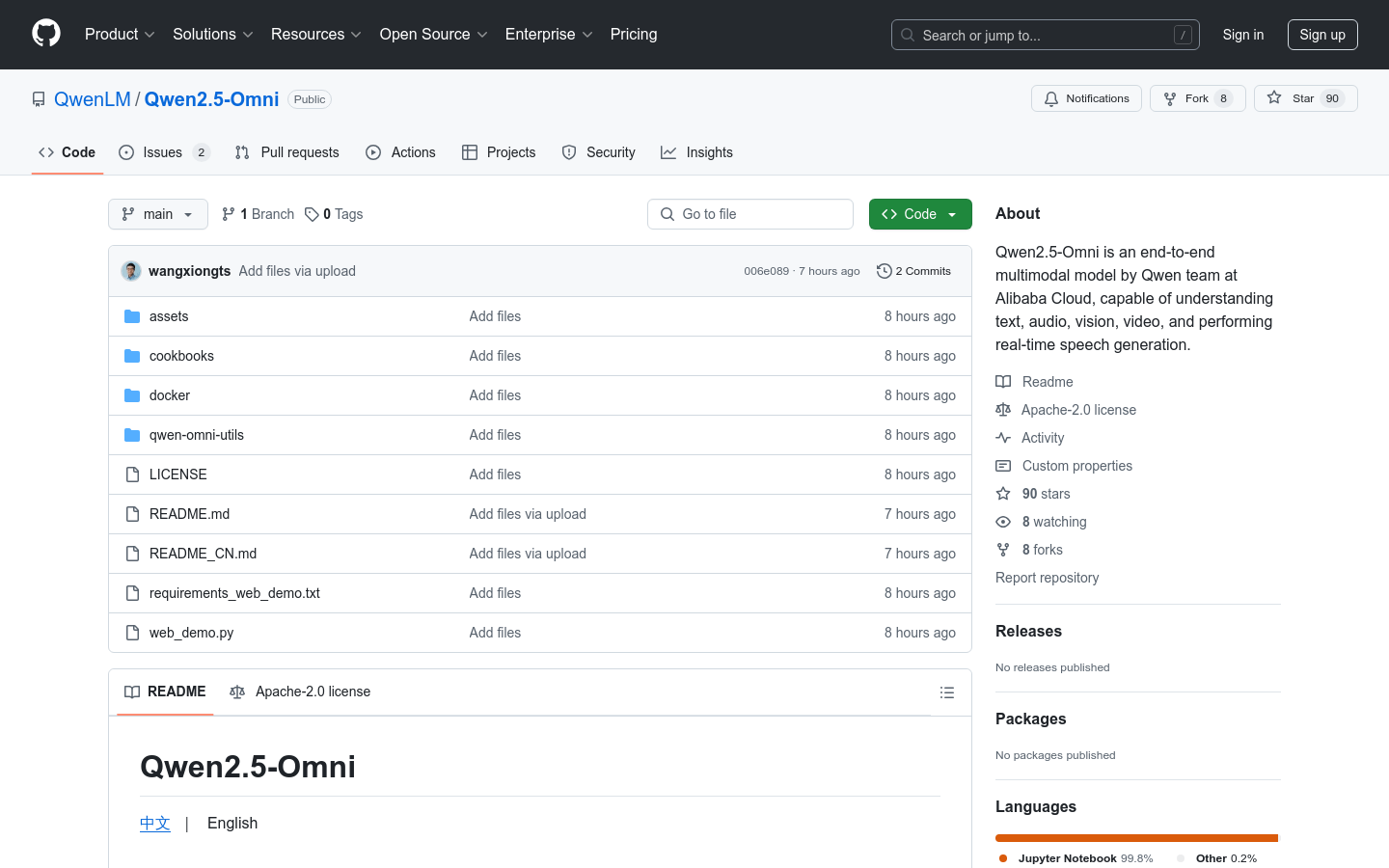

Qwen2.5-Omni is a new generation of end-to-end multimodal flagship model launched by Alibaba Cloud Tongyi Qianwen team. Designed for all-round multimodal perception, the model can seamlessly process multiple input forms such as text, images, audio and video, and generate text and natural speech synthesis output simultaneously through real-time streaming response. Its innovative Thinker-Talker architecture and TMRoPE position coding technology make it outstanding in multimodal tasks, especially in audio, video and image understanding. This model surpasses single-modal models of similar scale in multiple benchmarks, demonstrating strong performance and wide application potential. At present, Qwen2.5-Omni has been open source and open on Hugging Face, ModelScope, DashScope and GitHub, providing developers with rich usage scenarios and development support.

Demand population:

"This model is suitable for developers, researchers, enterprises and anyone who needs to process multimodal data. It can help developers quickly build multimodal applications such as smart customer service, virtual assistants, content creation tools, etc., and also provides researchers with powerful tools to explore the cutting-edge areas of multimodal interaction and artificial intelligence."

Example of usage scenarios:

In the intelligent customer service scenario, Qwen2.5-Omni can understand the questions raised by customers through voice or text in real time, and give accurate answers in the form of natural voice and text.

In the field of education, the model can be used to develop interactive learning tools that help students better understand knowledge through a combination of voice explanation and image presentation.

In terms of content creation, Qwen2.5-Omni can generate relevant video content based on the input text or images, providing creators with creative inspiration and materials.

Product Features:

All-round innovative architecture: Adopting the Thinker-Talker architecture, the Thinker module is responsible for processing multimodal input and generating high-level semantic representations and corresponding text content. The Talker module receives the semantic representations and text of Thinker output in a streaming manner, smoothly synthesizes discrete voice units, and realizes seamless connection between multimodal input and voice output.

Real-time audio and video interaction: supports full real-time interaction, can process chunked input and output results instantly, and is suitable for real-time dialogues, video conferencing and other scenarios that require immediate feedback.

Natural and smooth speech generation: Excellent in the naturalness and stability of speech generation, surpassing many existing streaming and non-streaming alternatives to generate high-quality natural speech.

Full-modal performance advantages: Exhibit excellent performance when benchmarking single-modal models of the same scale, especially in audio and video understanding, which outperforms similarly sized models such as Qwen2-Audio and Qwen2.5-VL-7B.

Excellent end-to-end voice command follow-up: It exhibits an effect comparable to text input processing in end-to-end voice command follow-up, performs excellently in benchmark tests such as general knowledge understanding and mathematical reasoning, and can accurately understand and execute voice commands.

Tutorials for use:

Visit platforms such as Qwen Chat or Hugging Face and select Qwen2.5-Omni model.

Create a new session or project on the platform, enter the text to be processed, upload images, audio or video files.

Select the output method of the model according to the requirements, such as text generation, speech synthesis, etc., and set relevant parameters (such as speech type, output format, etc.).

Click the Run or Generate button and the model will process the input data in real time and generate the results.

View generated text, voice or video results and make further edits or use as needed.