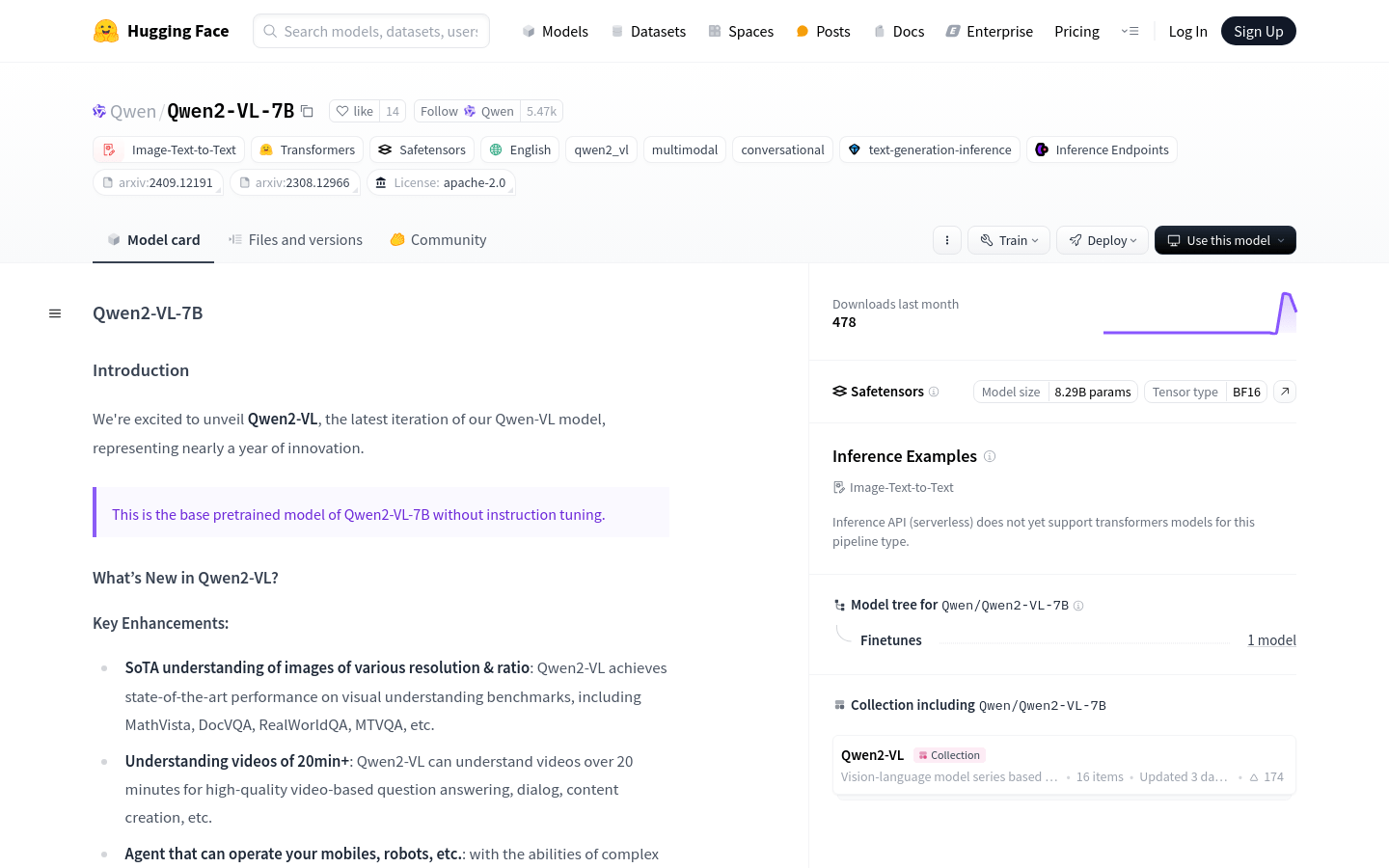

Qwen2-VL-7B is the latest iteration of the Qwen-VL model and represents nearly a year of innovation. The model achieves state-of-the-art performance on visual understanding benchmarks, including MathVista, DocVQA, RealWorldQA, MTVQA, and others. It can understand videos longer than 20 minutes and provide high-quality support for video-based question answering, dialogue, content creation, etc. In addition, Qwen2-VL also supports multi-language, in addition to English and Chinese, it also includes most European languages, Japanese, Korean, Arabic, Vietnamese, etc. Model architecture updates include Naive Dynamic Resolution and Multimodal Rotary Position Embedding (M-ROPE), which enhance its multi-modal processing capabilities.

Demand group:

"The target audience of Qwen2-VL-7B includes researchers, developers and enterprise users, especially those requiring visual language understanding and text generation. The model can be applied to automatic content creation, video analysis, multi-language text understanding, etc. Multiple scenarios to help users improve efficiency and accuracy."

Example of usage scenario:

Case 1: Using Qwen2-VL-7B for automatic summarization and question answering of video content.

Case 2: Integrate Qwen2-VL-7B into mobile applications to implement image-based search and recommendations.

Case 3: Using Qwen2-VL-7B for visual question answering and content analysis of multi-language documents.

Product features:

- Supports image understanding at various resolutions and scales: Qwen2-VL achieves state-of-the-art performance on visual understanding benchmarks.

- Understand videos longer than 20 minutes: Qwen2-VL is able to understand long videos, supporting high-quality video question answering and dialogue.

- Integrated into mobile devices and robots: Qwen2-VL has complex reasoning and decision-making capabilities and can be integrated into mobile devices and robots to achieve automatic operations based on the visual environment and text instructions.

- Multi-language support: Qwen2-VL supports text understanding in multiple languages, including most European languages, Japanese, Korean, Arabic, Vietnamese, etc.

- Any image resolution processing: Qwen2-VL can process any image resolution, providing an experience closer to human visual processing.

- Multimodal rotational position embedding (M-ROPE): Qwen2-VL captures 1D text, 2D visual and 3D video position information by decomposing position embedding, enhancing its multi-modal processing capabilities.

Usage tutorial:

1. Install the latest version of the Hugging Face transformers library, use the command `pip install -U transformers`.

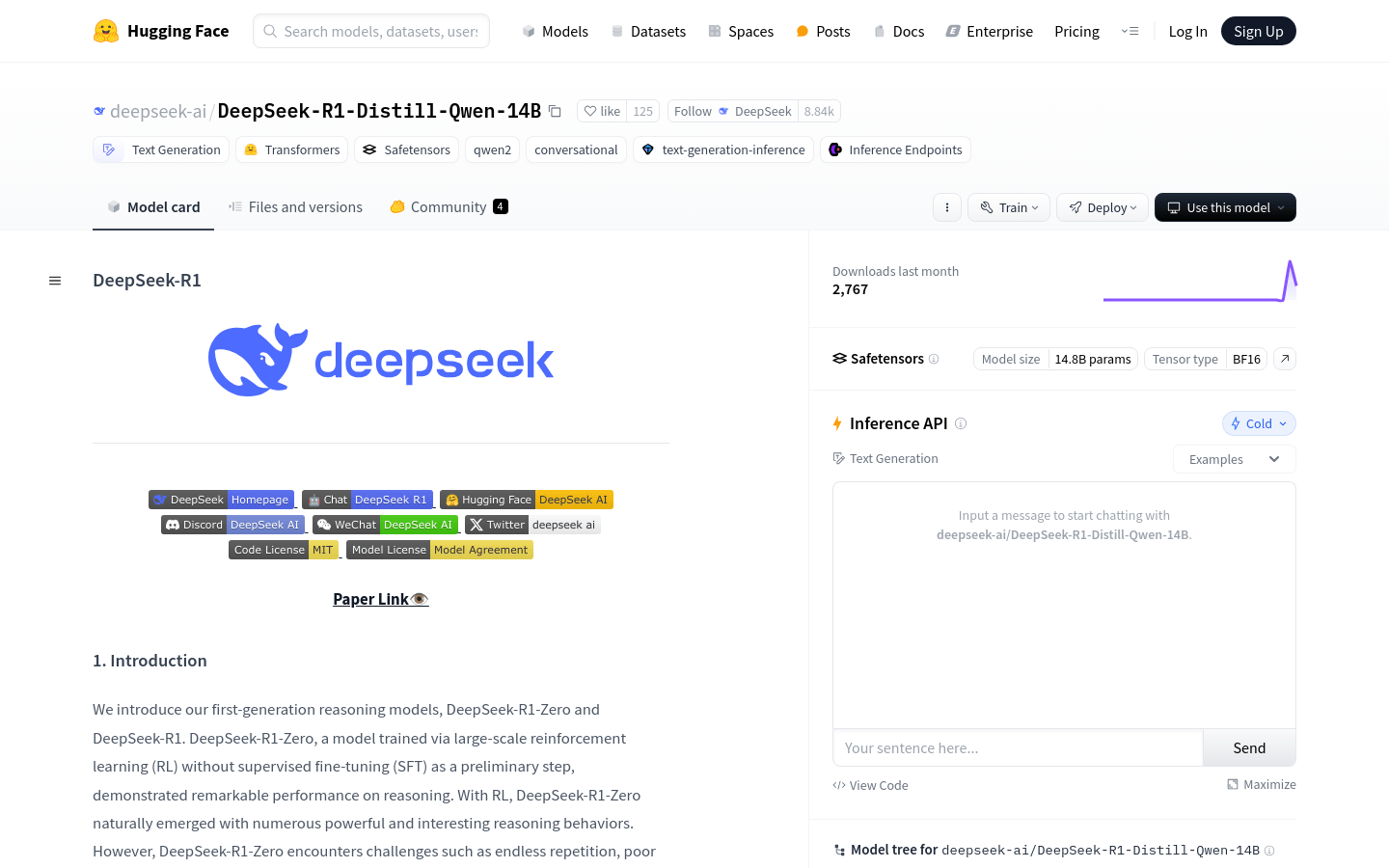

2. Visit the Hugging Face page of Qwen2-VL-7B for model details and usage guidelines.

3. According to specific needs, select the appropriate pre-trained model to download and deploy.

4. Use the tools and interfaces provided by Hugging Face to integrate Qwen2-VL-7B into your own project.

5. According to the API documentation of the model, write code to implement image and text input processing.

6. Run the model, obtain the output results, and perform post-processing as needed.

7. Carry out further analysis or application development based on the output of the model.