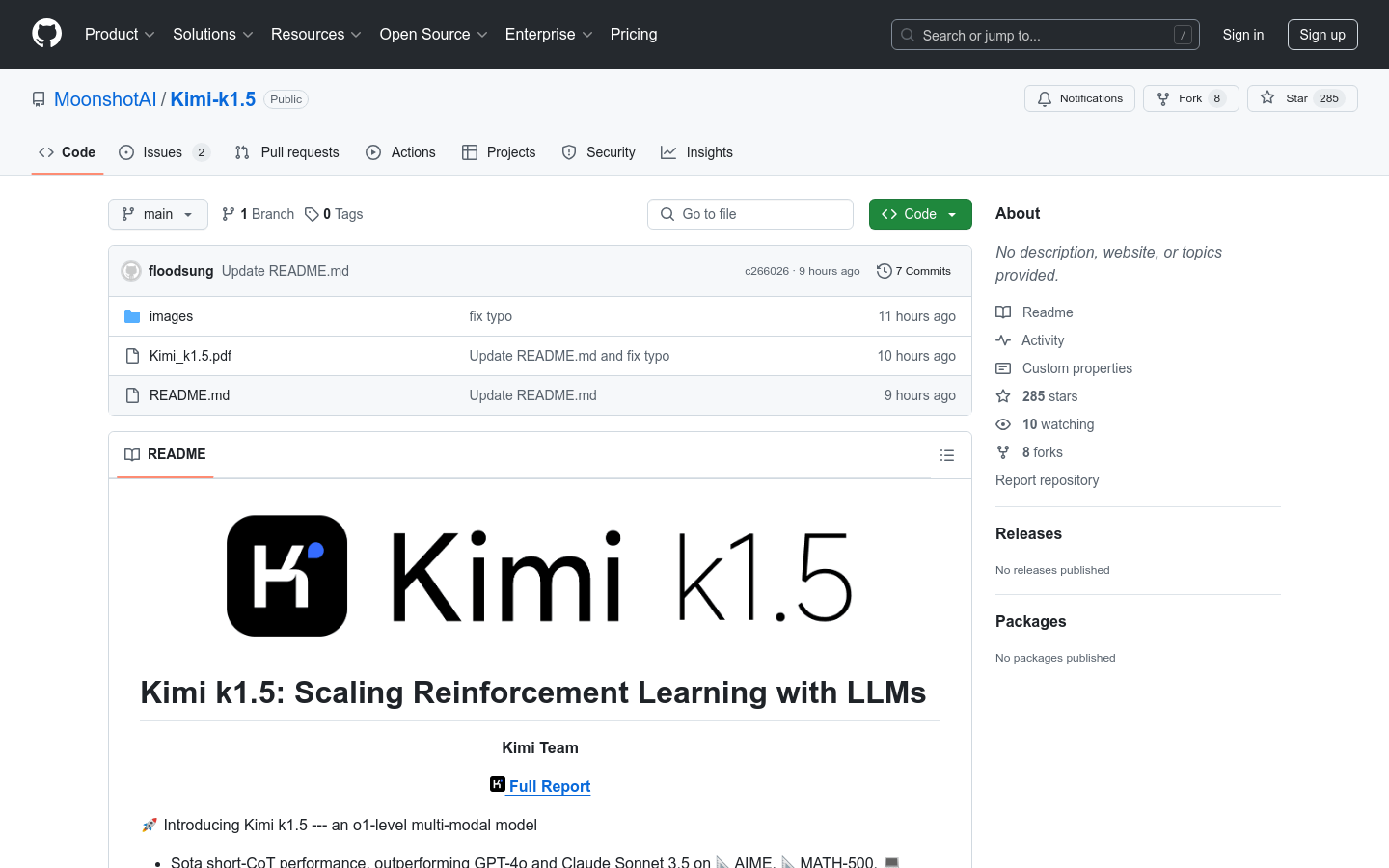

Product introduction

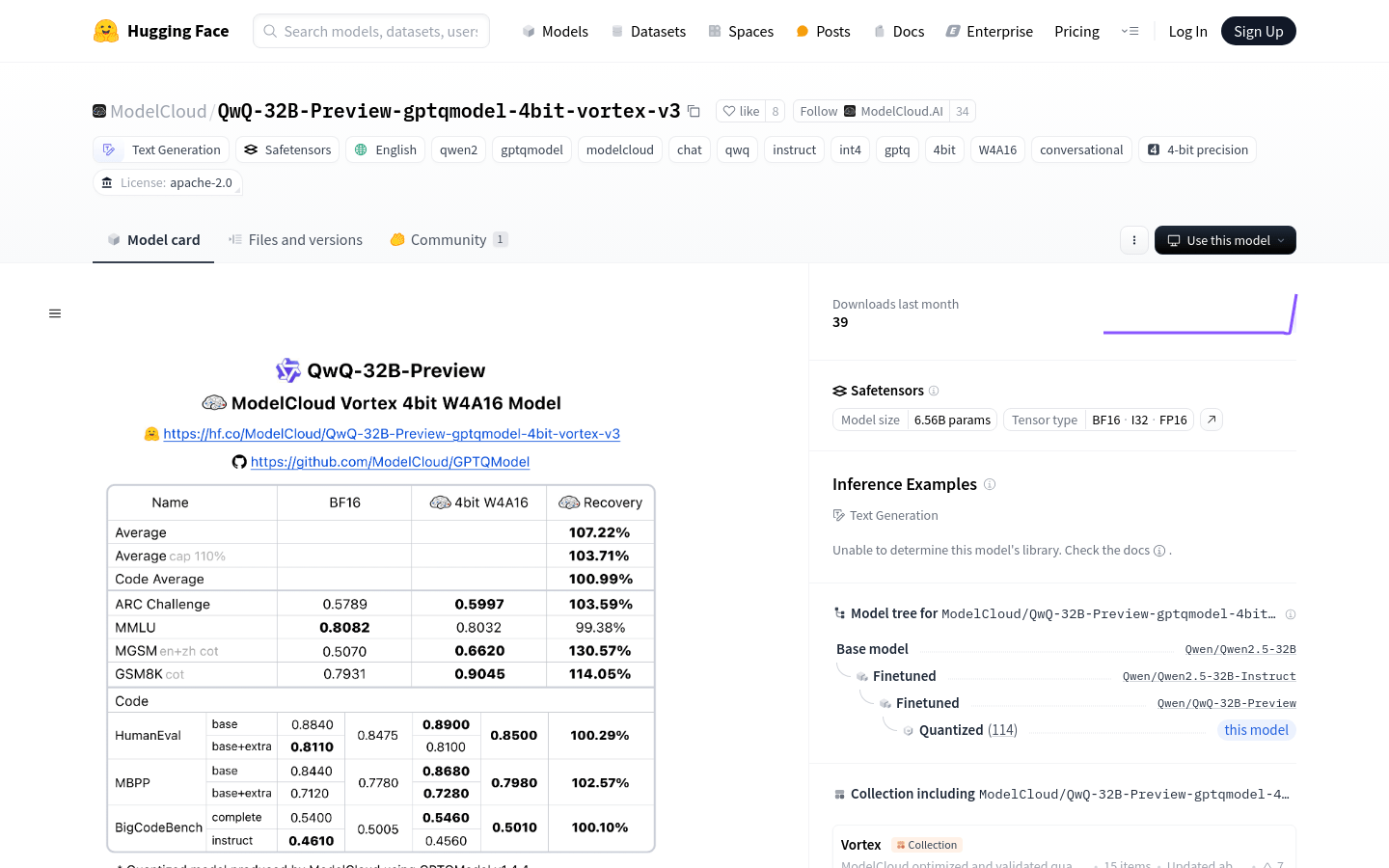

This is a 4-bit quantized language model based on Qwen2.5-32B, which uses GPTQ technology to achieve efficient reasoning and low resource consumption. It significantly reduces storage and computing requirements while maintaining high performance, making it ideal for resource-constrained environments. This model is mainly used in applications that require high-performance language generation, such as intelligent customer service, programming assistance, and content creation. The open source license and flexible deployment methods make it suitable for a wide range of applications in commercial and research fields.

target users

This product is suitable for developers and enterprises that require high-performance language generation, especially in resource consumption-sensitive scenarios such as intelligent customer service, programming assistance tools and content creation platforms. Efficient quantification technology and flexible deployment make it ideal.

Usage scenario examples

Intelligent customer service system: quickly generate natural language responses to improve customer satisfaction

Developer tools: Generate code snippets or optimization suggestions to improve programming efficiency

Content creation platform: generate creative text such as stories, articles or advertising copy

Product features

Supports 4-bit quantization, significantly reducing model storage and computing requirements

Based on GPTQ technology to achieve efficient reasoning and low-latency response

Supports multi-language text generation and has a wide range of applications

Provides flexible API interfaces to facilitate developer integration and deployment

Open source license, allowing free use and secondary development

Supports multiple inference frameworks such as PyTorch and Safetensors

Detailed model cards and usage examples are provided to make it easy to get started.

Supports multi-platform deployment, including cloud and local servers

Tutorial

1 Download the model files and dependent libraries, and visit the Hugging Face page

2 Use AutoTokenizer to load the model's tokenizer

3 Load the GPTQModel model and specify the model path

4 Construct the input text and use the word segmenter to convert it to the model input format

5 Call the generate method of the model to generate text output

6 Use the word segmenter to decode the output results and obtain the final generated text.

7 Further process or apply the generated text as required