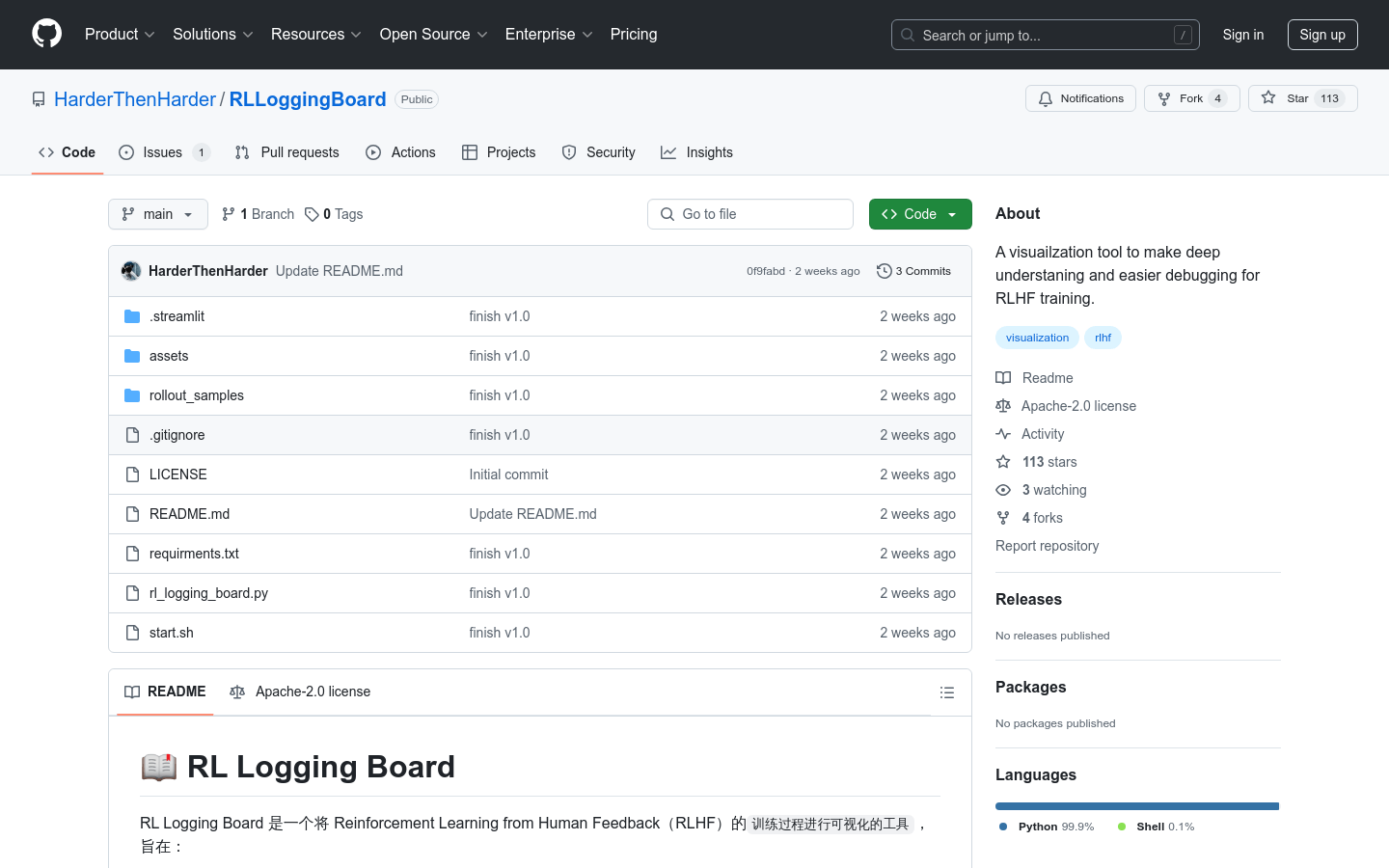

RLLoggingBoard : Reinforcement Learning Human Feedback Training Process Visualization Tool

introduce

RLLoggingBoard is a tool focused on visualizing the training process of reinforcement learning human feedback (RLHF). It helps researchers and developers understand the training process more intuitively, quickly locate problems, and optimize training effects through fine-grained indicator monitoring. This tool supports a variety of visualization modules, including reward curves, response sorting, and token-level indicators, etc. It is designed to assist existing training frameworks and improve training efficiency and effectiveness.

target users

This product is suitable for professionals engaged in reinforcement learning research and development, especially those developers who need in-depth monitoring and debugging of the RLHF training process. It helps them quickly locate problems, optimize training strategies, and improve model performance.

Usage scenario examples

Rhyming task: Use visualization tools to analyze whether the verses generated by the model meet the rhyming requirements and optimize the training process.

Dialogue generation task: Monitor the quality of dialogue generated by the model, and analyze the convergence of the model through reward distribution.

Text generation task: Through token-level indicator monitoring, discover and solve abnormal token problems in the text generated by the model.

Product features

Reward area visualization: Displays the training curve, score distribution, and reward difference with the reference model.

Visualization of response areas: Sort by indicators such as reward, KL divergence, and analyze the characteristics of each sample.

Token level monitoring: Displays fine-grained indicators such as rewards, value, and probability for each token.

Supports multiple training frameworks: decoupled from the training framework, it can be adapted to any framework that saves the required indicators.

Flexible data format: supports .jsonl file format to facilitate integration with existing training processes.

Optional reference model comparison: Supports saving the indicators of the reference model and performing comparative analysis between the RL model and the reference model.

Intuitively discover potential problems: Quickly locate abnormal samples and problems in training through visual means.

Supports a variety of visualization modules: Provides rich visualization functions to meet different monitoring needs.

Tutorial

1. Save the required indicator data to the .jsonl file in the training framework.

2. Save the data file to the specified directory.

3. Install the dependency packages required by the tool (run pip install -r requirements.txt).

4. Run the startup script (bash start.sh).

5. Access the visualization interface through the browser and select the data folder for analysis.

6. Use the visualization module to view reward curves, response rankings, token level indicators, etc.

7. Analyze problems in the training process based on the visualization results and optimize the training strategy.

8. Continuously monitor the training process to ensure that model performance meets expectations.