What is SlowFast-LLaVA?

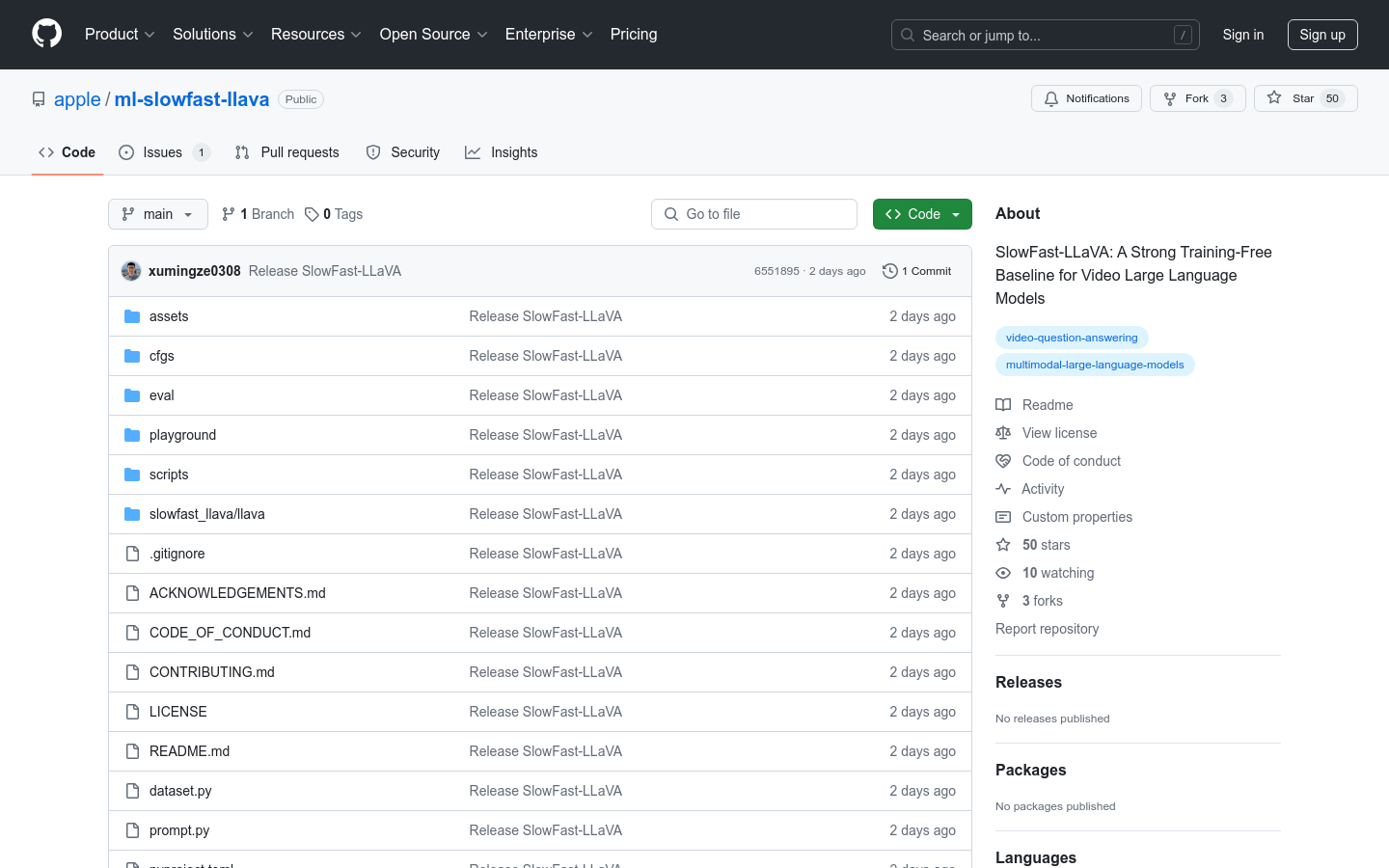

SlowFast-LLaVA is a no-training multi-modal large language model specifically designed for video understanding and reasoning. It can achieve performance comparable to or even better than leading video large language models on various video question and answer tasks and benchmarks without any fine-tuning.

Who Can Benefit from SlowFast-LLaVA?

This model is ideal for researchers and developers, particularly those focused on video understanding and artificial intelligence. It enables them to quickly deploy and test video question and answer systems without the need for time-consuming model training processes.

Example Usage Scenarios:

Researchers can use SlowFast-LLaVA to develop automatic video content question and answer systems.

Developers can utilize this model to prototype video content analysis applications.

Educational institutions can adopt it as a teaching tool to instruct students on advanced video understanding techniques.

Key Features:

No training required for video question answering and reasoning.

Supports multiple video question and answer tasks and benchmarks.

Uses pre-trained LLaVA-NeXT weights for model evaluation.

Provides detailed installation and usage guides.

Supports custom configurations for different hardware environments.

Includes extensive sample code and scripts for demonstrations and evaluations.

Step-by-Step Tutorial:

1. Install necessary software including CUDA, Python, and PyTorch.

2. Clone the project code locally and set up a new conda environment.

3. Follow the guide to install project dependencies and activate the environment.

4. Download and prepare the required pre-trained model weights.

5. Prepare datasets, including videos and question-answer files.

6. Adjust parameters in the configuration file as needed.

7. Run provided scripts for model inference and evaluation.

8. Analyze output results and refine the model or application as necessary.