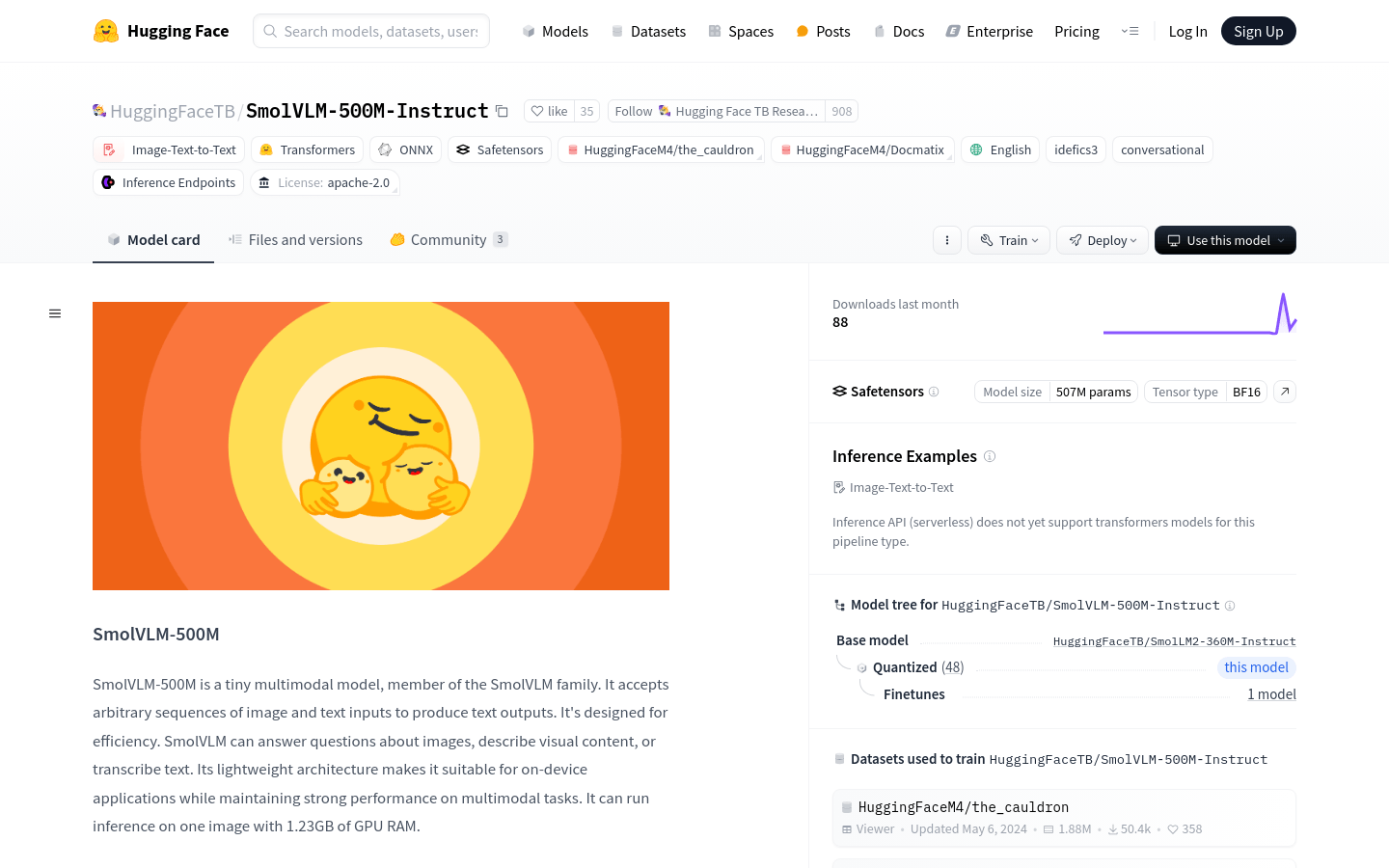

What is SmolVLM-500M?

SmolVLM-500M is a lightweight multimodal model developed by Hugging Face. Based on the Idefics3 architecture, it focuses on efficient image and text processing tasks. This model can handle image and text inputs in any order and generate text output, making it suitable for tasks like image description and visual question answering. Its lightweight design allows it to run on resource-constrained devices while maintaining strong performance.

Who Needs It?

This model is ideal for developers and researchers who need to run multimodal tasks on devices with limited resources. It is particularly useful for applications requiring quick processing of image and text inputs to generate text outputs, such as mobile apps, embedded devices, or real-time applications.

Example Scenarios

Quickly generate image descriptions on mobile devices to help users understand the content.

Enhance image recognition applications with visual question answering features.

Implement basic text transcription functions on embedded devices for recognizing text within images.

Key Features

Supports image description generation.

Offers visual question answering capabilities.

Can transcribe text from images.

Lightweight architecture for efficient device-side execution.

Efficient image encoding using large image patches and vision tokens.

Versatile support for various multimodal tasks, including story creation based on visual content.

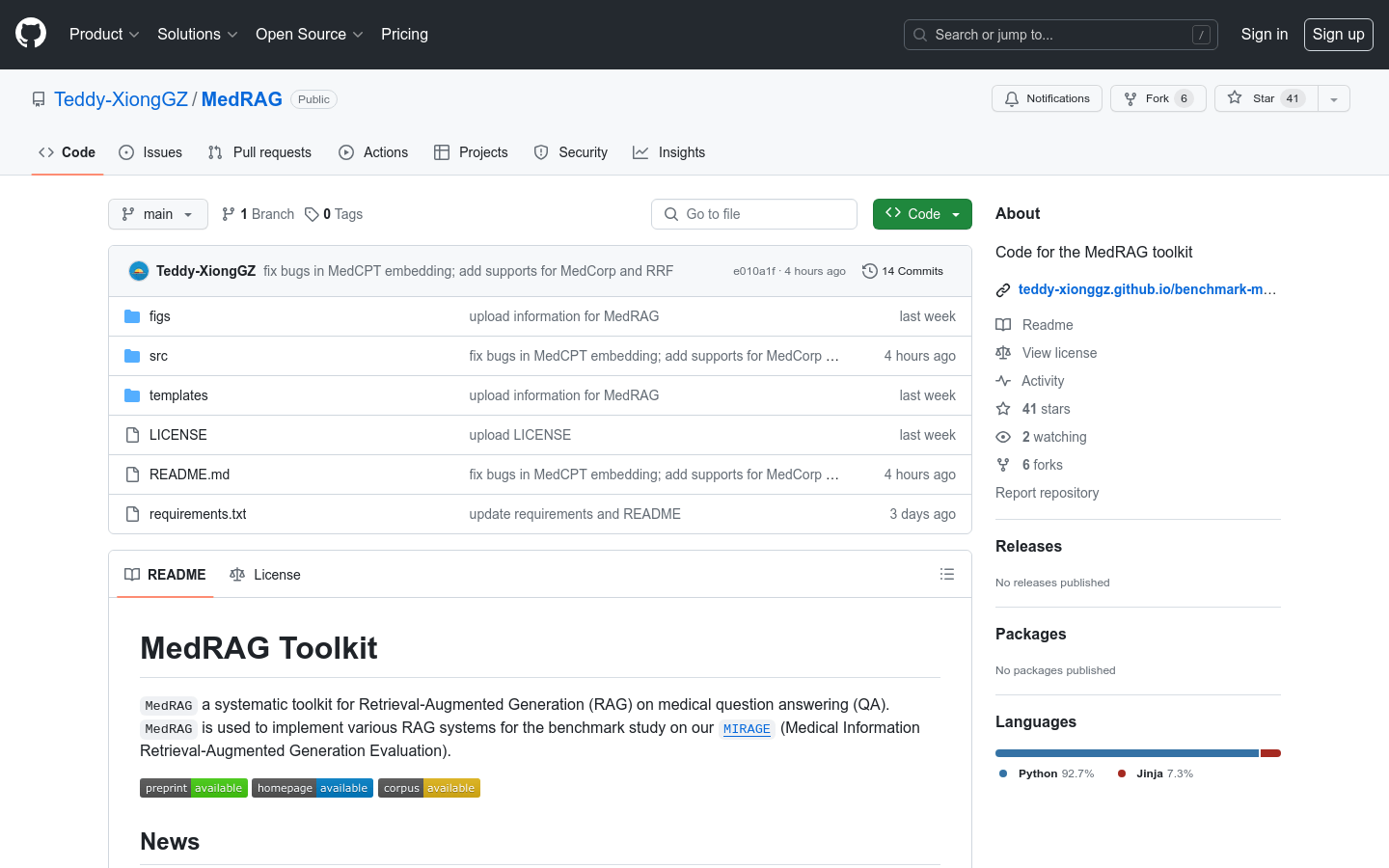

Open-source license under Apache 2.0, allowing free use and modification.

Low memory requirements, needing only 1.23GB of GPU memory for single-image inference.

How to Use

1. Load the model and processor using the transformers library with AutoProcessor and AutoModelForVision2Seq.

2. Prepare input data by combining image and text queries into input messages.

3. Process the input using the processor to convert it into a format the model can accept.

4. Run inference by passing the processed input to the model to generate text output.

5. Decode the generated text IDs into readable text content.

6. Fine-tune the model if needed using the provided fine-tuning guide for specific task optimization.