What is Stable Diffusion 3.5 Medium?

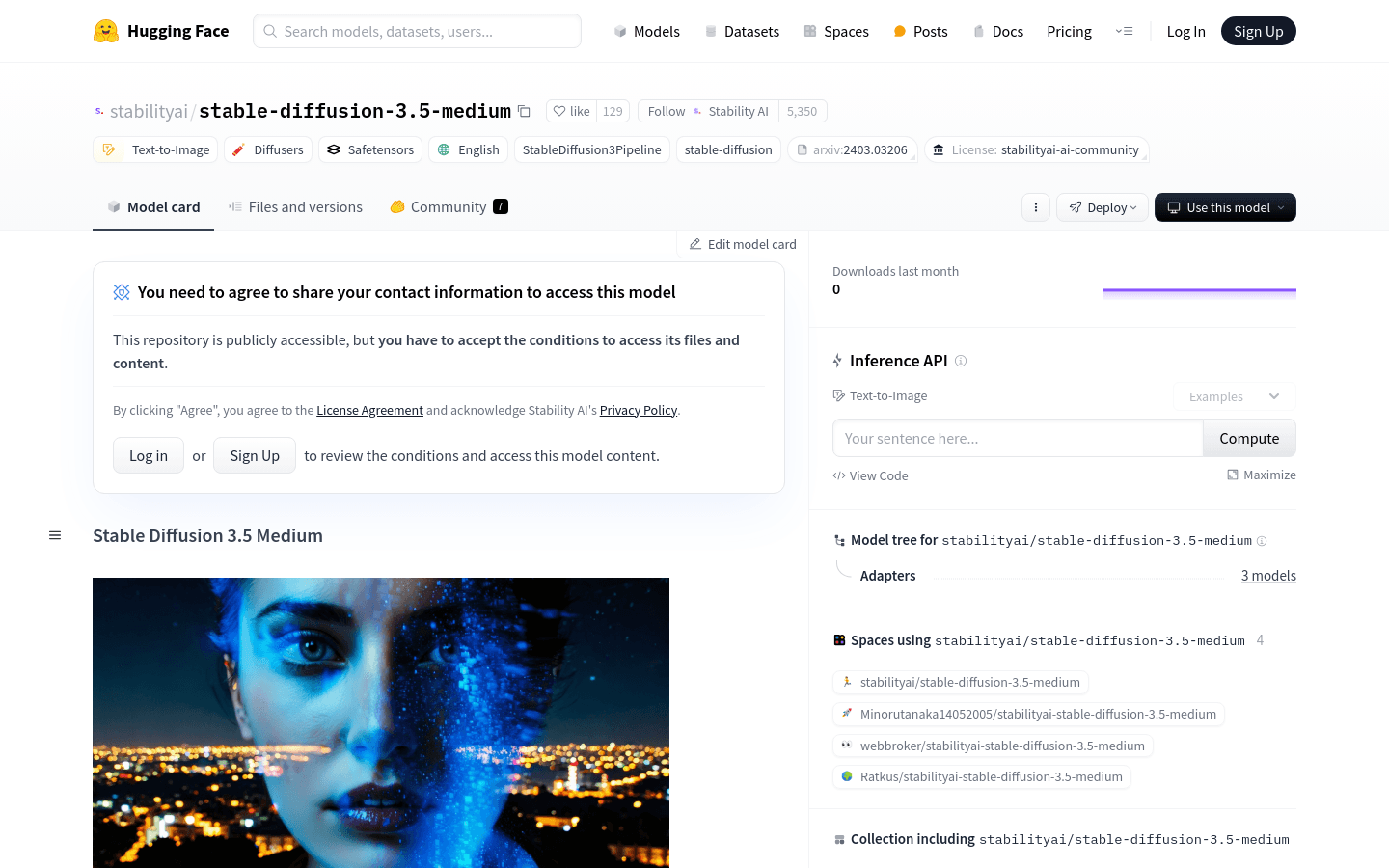

Stable Diffusion 3.5 Medium is an advanced text-to-image generation model developed by Stability AI. It features improved image quality, typography, complex prompt understanding, and resource efficiency. The model uses three fixed pre-trained text encoders and incorporates QK normalization for better training stability. It also includes dual attention blocks in the first 12 transformation layers, enhancing its ability to generate high-quality images across multiple resolutions.

Who Can Use Stable Diffusion 3.5 Medium?

This model is ideal for artists, designers, researchers, and developers who need to create high-quality digital art, design prototypes, or conduct research on AI models. Its strengths lie in generating detailed images efficiently and reliably.

How Can You Use Stable Diffusion 3.5 Medium?

Example Scenarios:

Artists: Generate digital art based on textual descriptions.

Educators: Demonstrate how to convert text into images in educational settings.

Researchers: Analyze the quality and consistency of generated images to improve AI models.

Key Features:

Generates high-quality images from text prompts.

Improved multi-resolution image generation.

Enhanced training stability with QK normalization.

Dual attention blocks for better image consistency.

Supports long text prompts within token limits.

Compatible with the Diffusers library for easy integration.

Available under a community license for non-commercial use and organizations earning less than $1 million annually.

Getting Started Guide:

1. Install the latest version of the Diffusers library using pip install -U diffusers.

2. Import necessary libraries and load the model: from diffusers import StableDiffusion3Pipeline.

3. Initialize the model pipeline and set parameters: pipe = StableDiffusion3Pipeline.frompretrained("stabilityai/stable-diffusion-3.5-medium", torchdtype=torch.bfloat16).

4. Move the model pipeline to the GPU for faster processing: pipe = pipe.to("cuda").

5. Generate an image using a text prompt: image = pipe("A capybara holding a sign that reads Hello World", numinferencesteps=40, guidance_scale=4.5).images[0].

6. Save the generated image: image.save("capybara.png").