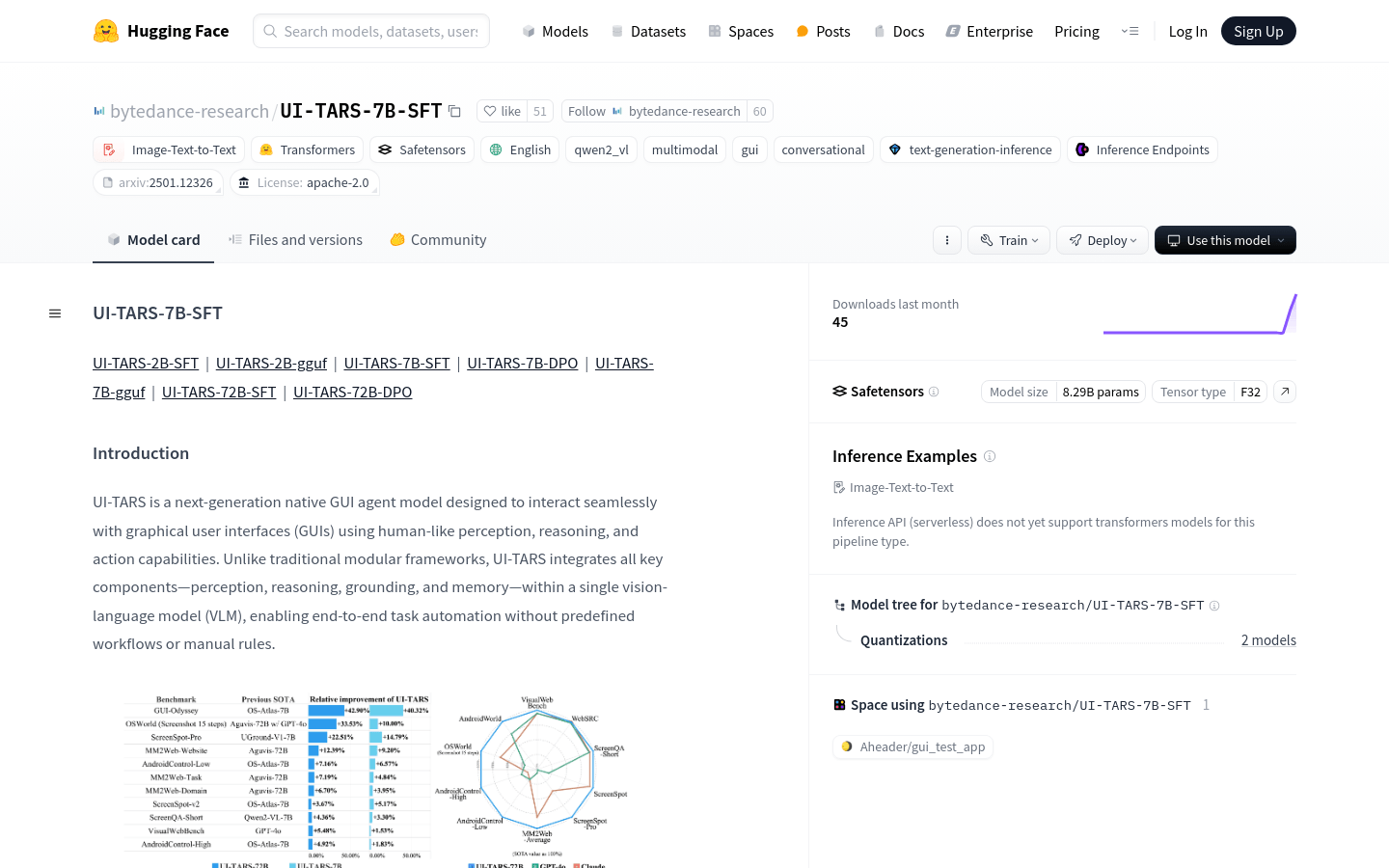

What is UI-TARS-7B-SFT ?

UI-TARS-7B-SFT is a revolutionary graphical user interface (GUI) automation model developed by ByteDance research team. It achieves seamless interaction with various software interfaces by simulating human perception, reasoning and action capabilities. The core advantage of this model is its powerful multimodal interaction capability and high-precision visual perception, allowing it to automatically complete complex tasks without the need for predefined workflows.

Demand population:

UI-TARS-7B-SFT is mainly aimed at enterprises and developers who need to efficiently handle a large number of GUI interaction tasks. Whether it is automated testing, smart office or smart customer service, this model can significantly improve work efficiency and reduce labor costs. In addition, for scenarios such as smart driving and smart home that require multimodal interaction, UI-TARS-7B-SFT can also provide a more natural and convenient user experience.

Example of usage scenarios:

1. Automated testing: UI-TARS-7B-SFT can automatically identify and operate interface elements, complete complex testing tasks, and ensure software quality.

2. Intelligent office: In an office environment, the model can automatically operate office software according to user instructions, such as generating reports, sorting data, etc., greatly improving work efficiency.

3. Intelligent customer service: In the customer service scenario, UI-TARS-7B-SFT can automatically operate the relevant interface based on user's questions, provide accurate answers, and improve customer satisfaction.

Product Features:

Strong visual perception: Excellent in a variety of visual tasks, able to accurately identify interface elements.

Efficient semantic understanding: Be able to accurately understand natural language instructions and perform complex tasks.

Accurate interface positioning: Quickly locate target elements in complex GUI environments to ensure operation accuracy.

End-to-end task automation: No predefined workflow is required, enabling full automation from the beginning to the end of the task.

Multimodal input support: able to process multiple types of data such as images and text at the same time to adapt to different interactive needs.

Memory and reasoning ability: make reasoning and decisions based on historical interaction information to improve the intelligence level of interaction.

Multitasking: It can flexibly switch between multiple tasks and improve work efficiency.

Good scalability: customize and optimize according to different needs to meet diverse application scenarios.

Tutorials for use:

1. Prepare the GUI interface: Make sure that the GUI interface that needs to be interacted with is ready.

2. Loading the model: Load the UI-TARS-7B-SFT model into supported frameworks, such as Hugging Face Transformers.

3. Input instructions: Enter modal data such as natural language instructions or images.

4. Model processing: The model perceives, reasons and decisions based on the input data and generates corresponding operation instructions.

5. Execute tasks: Send operation instructions to the GUI interface to complete interactive tasks.

6. Optimization effect: Adjust model parameters as needed to optimize interaction effect.

Through the above steps, you can take full advantage of the powerful capabilities of UI-TARS-7B-SFT to achieve efficient GUI automation interaction.