What is UniAnimate ?

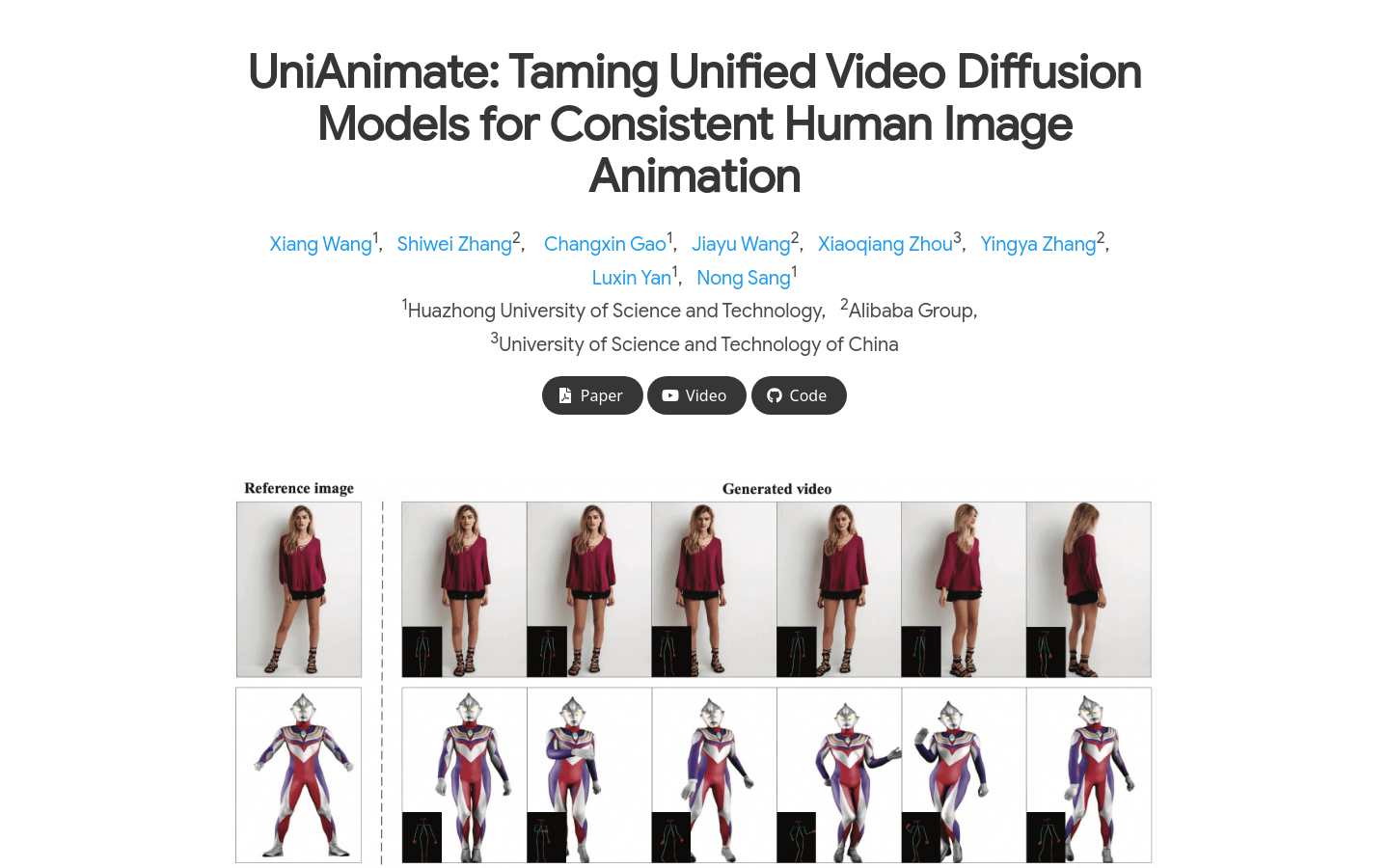

UniAnimate is an innovative video generation framework designed specifically for creating high-quality character animations. It is based on a unified video diffusion model that can map reference images, target poses, and noise videos to the same feature space, simplifying the optimization process and ensuring temporal coherence of generated videos. UniAnimate not only supports long-sequence video generation, but also can significantly improve the ability to generate long-term videos through random noise or first-frame condition input. In addition, it also introduces a time modeling architecture based on state space models, replacing the traditional computing-intensive time Transformer, further improving efficiency.

Who needs UniAnimate ?

UniAnimate is mainly aimed at researchers and developers in the fields of computer vision and graphics, especially those specializing in character animation and video generation. It is ideal for the following application scenarios:

Filmmaking: Generate high-quality character animations to enhance visual effects.

Game development: Create coherent character action sequences to enhance the game experience.

Virtual reality: Create realistic character dynamic effects to enhance immersion.

Core features of UniAnimate

1. Reference image processing: Use the CLIP encoder and VAE encoder to extract potential features of the reference image.

2. Pose guidance integration: Integrate reference posture representation into final guidance to help the model learn the human body structure.

3. Target pose encoding: The target pose sequence is encoded through a pose encoder and combined with noise input.

4. Unified video diffusion model: The combined input data is input into the model to remove noise and generate coherent video.

5. Flexible time module: supports time Transformer or time Mamba to meet different computing needs.

6. Video Output: Use the VAE decoder to convert the generated potential video into pixel-level output.

How to use UniAnimate ?

1. Prepare a reference image and a series of target pose sequences.

2. Use the CLIP encoder and VAE encoder to extract potential features of the reference image.

3. Combine the reference posture representation with potential features to form a reference guide.

4. Encode the target pose sequence through a pose encoder and combine it with noise video.

5. Input the combined input data into the unified video diffusion model for noise removal.

6. Select the time module (Time Transformer or Time Mamba) as needed.

7. Use the VAE decoder to convert the processed potential video into the final pixel-level video output.

Why choose UniAnimate ?

UniAnimate has performed well in both quantitative and qualitative assessments, surpassing the existing technology. It can not only generate high-quality long-sequence videos, but also generate highly consistent one-minute videos by iteratively using the first-frame conditional strategy. Whether it is film production, game development or virtual reality experience, UniAnimate can provide users with efficient and reliable solutions.

Summarize

UniAnimate is a powerful and flexible video generation tool designed for character animation. It helps users easily create high-quality, coherent long-sequence videos through innovative technical architecture and efficient processing processes. If you are looking for a tool that can improve character animation, UniAnimate is definitely worth a try!