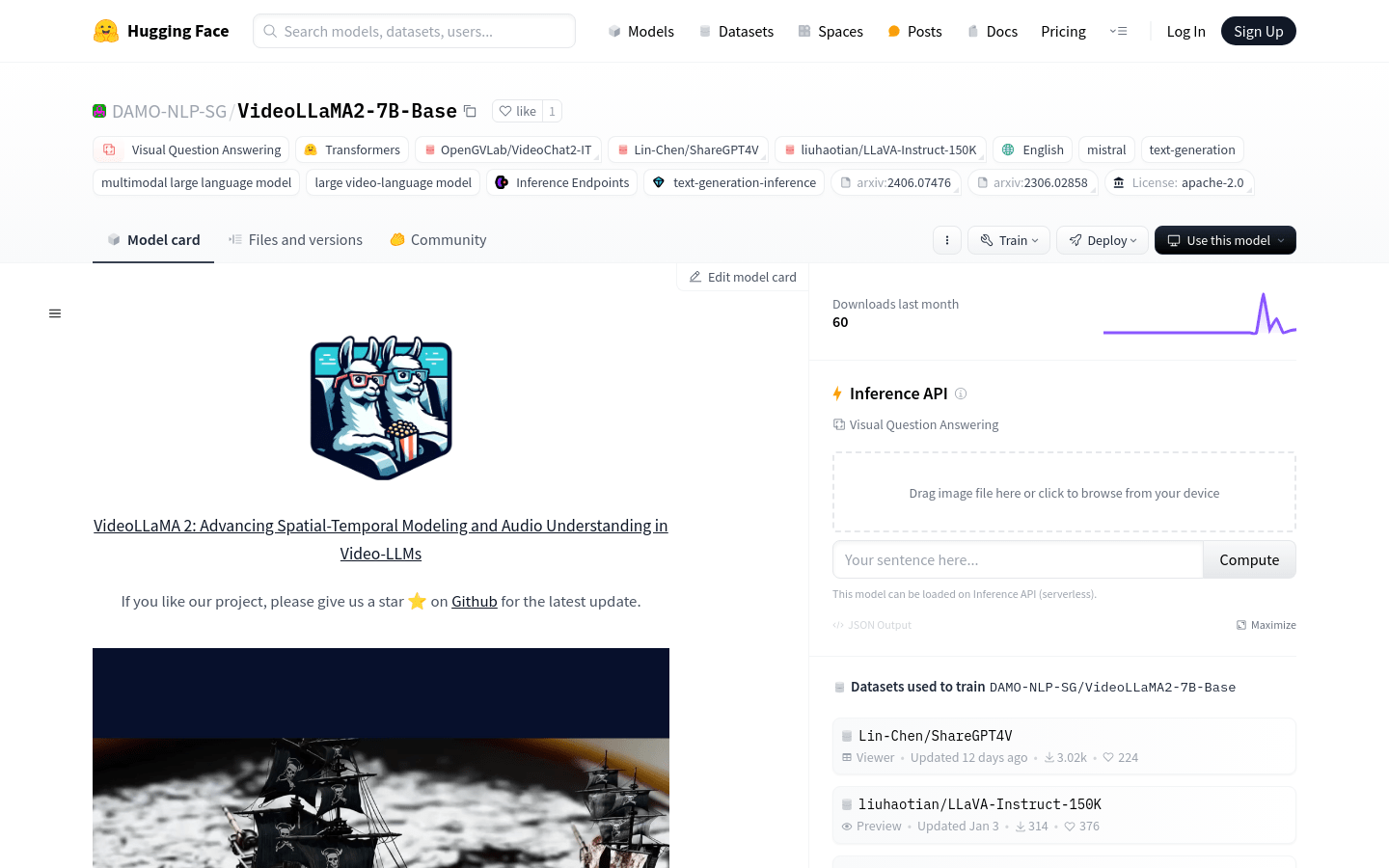

What is VideoLLaMA2-7B-Base?

VideoLLaMA2-7B-Base is a large video language model developed by DAMO-NLP-SG that focuses on understanding and generating video content. It excels in visual question answering and video caption generation using advanced spatial-temporal modeling and audio understanding capabilities.

Who Can Use This Model?

This model is ideal for researchers analyzing video content, creators looking to automate video captions, and developers integrating video analysis tools into their applications.

Example Scenarios:

Researchers can analyze social media videos to study public sentiment.

Video creators can automatically generate captions for instructional videos to improve accessibility.

Developers can offer automated video summary services by integrating this model into their applications.

Key Features:

Visual Question Answering: Understands video content and answers related questions.

Video Caption Generation: Automatically generates descriptive captions for videos.

Multimodal Processing: Analyzes text and visual information together.

Spatial-Temporal Modeling: Optimizes understanding of video space and time features.

Audio Understanding: Enhances the model's ability to interpret audio in videos.

Model Inference: Provides an inference interface for quick output generation.

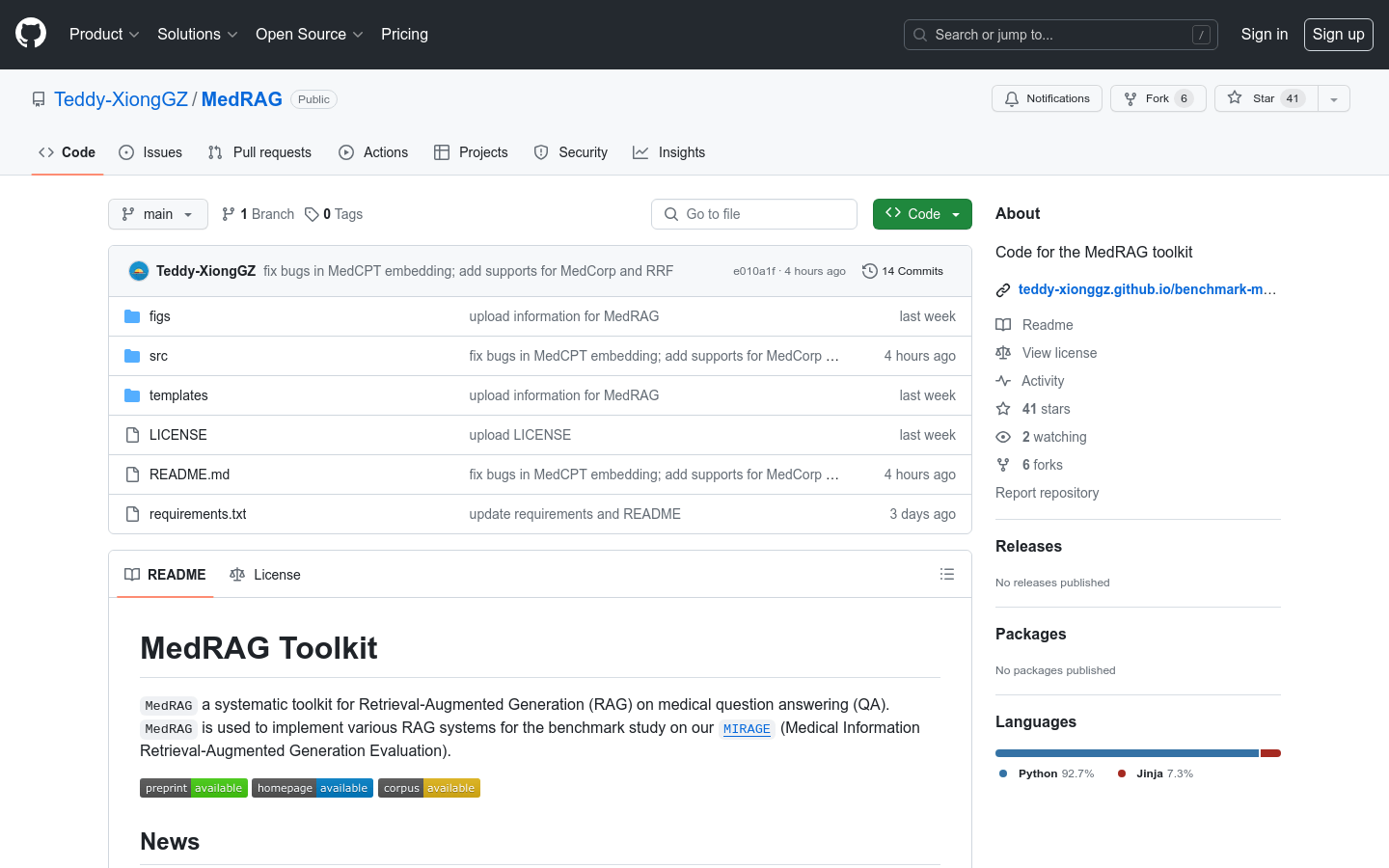

Code Support: Offers code for training, evaluation, and inference to facilitate further development.

Getting Started Guide:

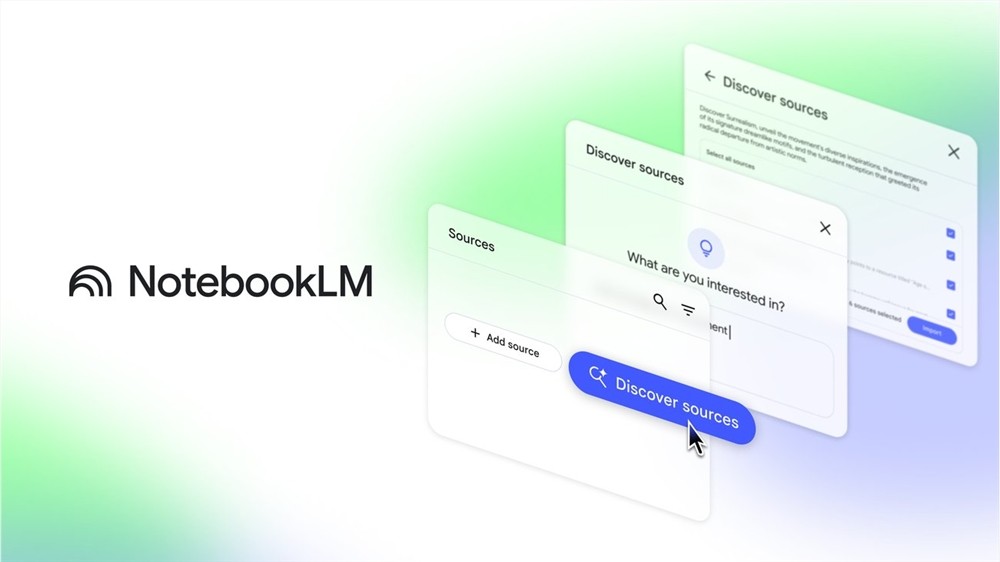

1. Visit the Hugging Face model library page and select the VideoLLaMA2-7B-Base model.

2. Read the model documentation to understand input and output formats and usage restrictions.

3. Download or clone the model's code repository for local deployment or further development.

4. Install necessary dependencies and set up the environment as described in the code repository.

5. Run the model’s inference code, input video files and relevant questions, and obtain the model output.

6. Analyze the model output and adjust parameters or conduct further development as needed.