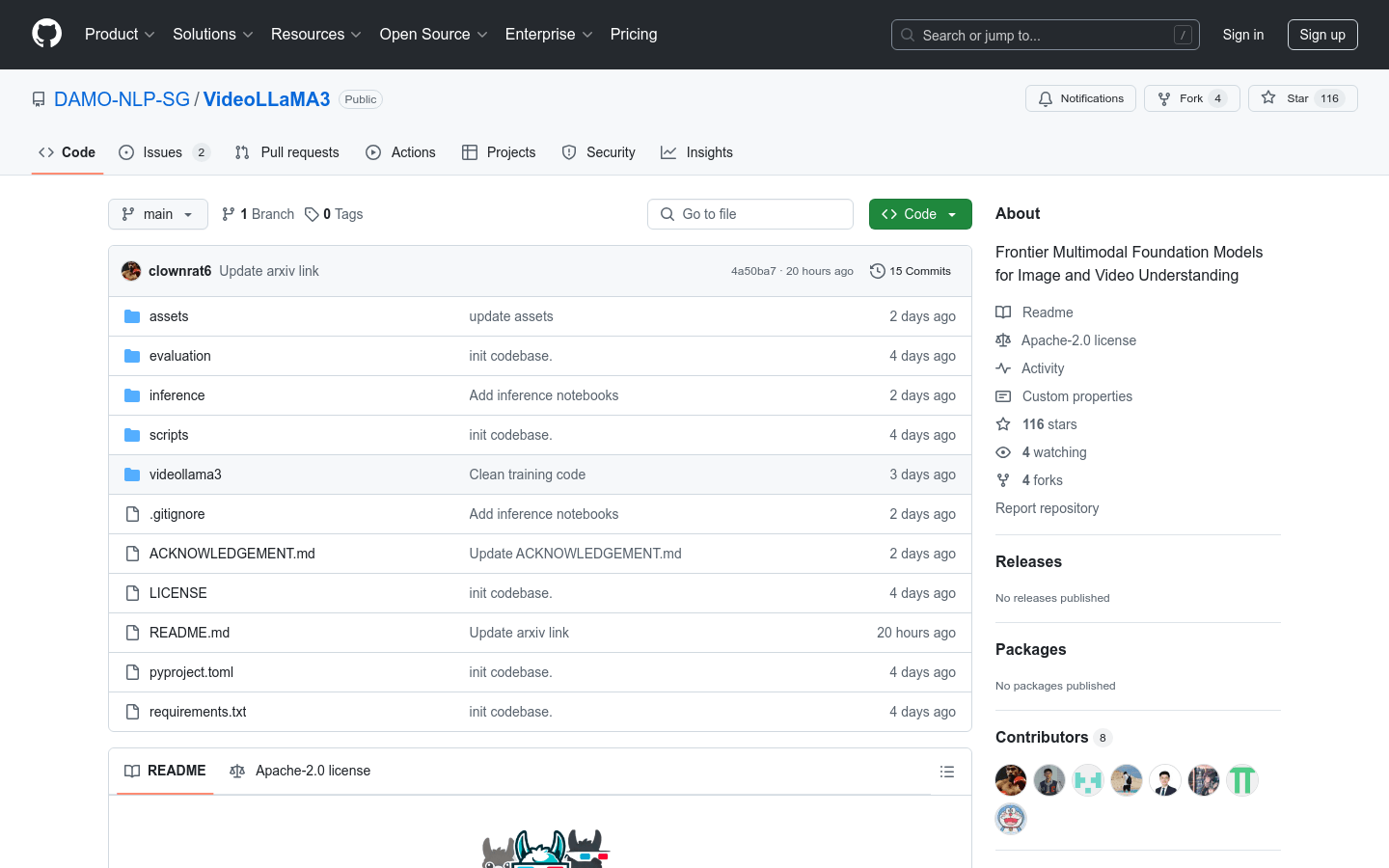

What is VideoLLaMA3?

VideoLLaMA3 is a cutting-edge multimodal foundation model developed by the DAMO-NLP-SG team. It focuses on image and video understanding, combining advanced visual encoders like SigLip with powerful language generation capabilities based on the Qwen2.5 architecture. This model excels in complex visual and language tasks due to its efficient spatial-temporal modeling, strong multimodal fusion abilities, and optimized training on large datasets.

Who Can Benefit from VideoLLaMA3?

Researchers, developers, and businesses needing deep video understanding can benefit from this model. Its robust multimodal comprehension helps users handle complex visual and language tasks more efficiently, enhancing productivity and user experience.

Example Scenarios:

Video Content Analysis: Users can upload videos and receive detailed natural language descriptions, helping them quickly understand the content.

Visual Question Answering: Users can ask questions related to videos or images and get accurate answers.

Multimodal Applications: Combining video and text data for content generation or classification tasks improves performance and accuracy.

Key Features:

Supports multimodal inputs including video and images, generating natural language descriptions.

Offers multiple pre-trained models, such as 2B and 7B parameter versions.

Optimized for handling long video sequences with advanced temporal modeling.

Supports multilingual generation for cross-language video understanding tasks.

Provides complete inference code and online demos for easy setup.

Supports local deployment and cloud inference to fit various use cases.

Includes detailed performance evaluations and benchmark test results to help choose the right model version.

Getting Started Guide:

1. Install necessary dependencies like PyTorch and transformers.

2. Clone the VideoLLaMA3 GitHub repository and install project dependencies.

3. Download the pre-trained model weights, choosing between 2B or 7B versions.

4. Use provided inference code or online demo to test with video or image data.

5. Adjust model parameters or fine-tune for specific application needs.

6. Deploy the model locally or in the cloud for real-world applications.