What is StackBlitz?

StackBlitz is a cutting-edge web-based IDE tailored for the JavaScript ecosystem. It uses WebContainers, powered by WebAssembly, to generate instant Node.js environments directly in your browser, providing exceptional speed and security.

---

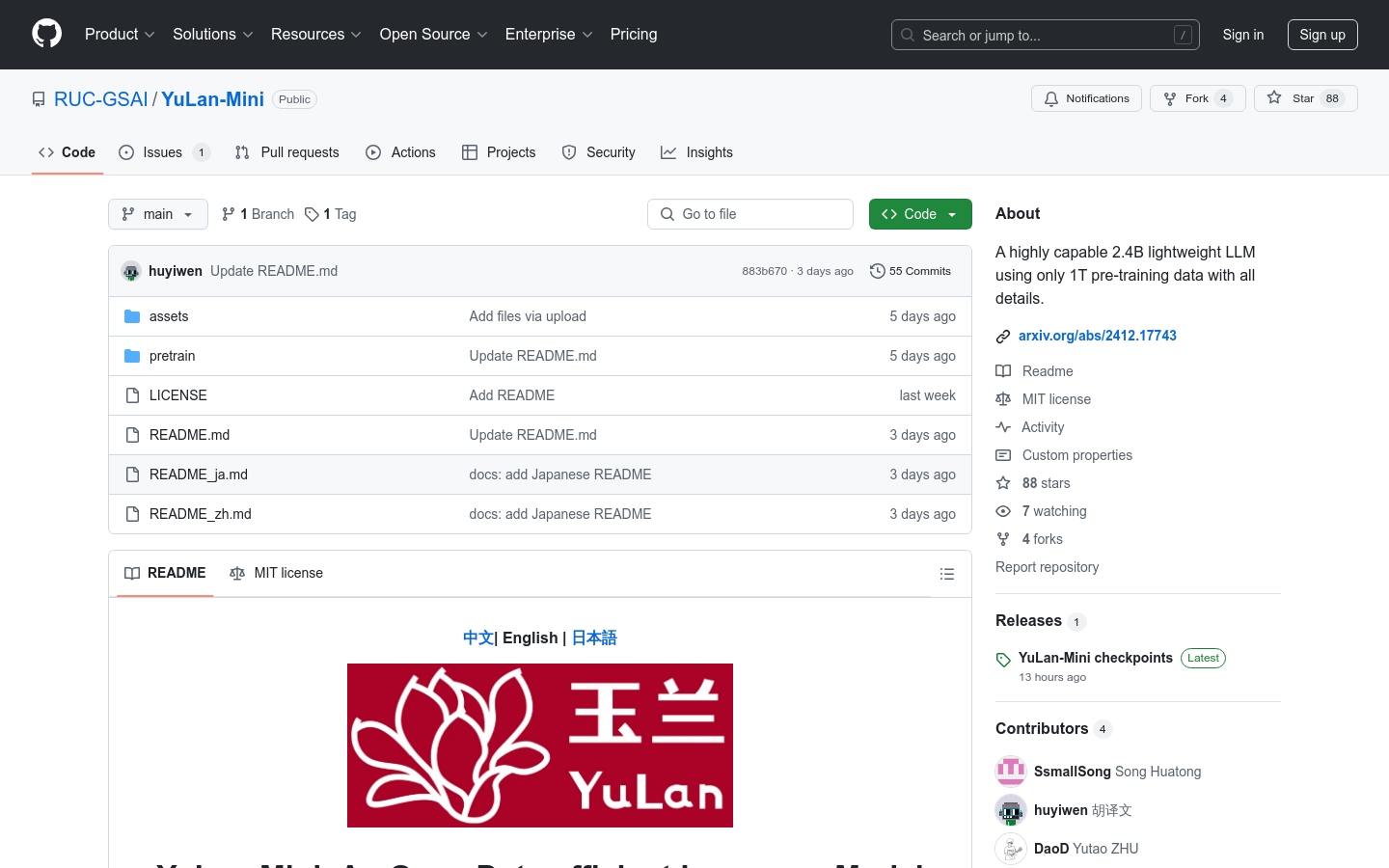

YuLan-Mini Introduction

YuLan-Mini is a lightweight language model developed by the AI Box team at Renmin University of China. With 2.4 billion parameters, it demonstrates impressive performance despite being trained on just 1.08 terabytes of data. This model excels particularly in mathematics and coding tasks. To enhance reproducibility, the team plans to release the related pre-training resources.

Target Audience

The primary users include researchers, developers, and businesses seeking high-performance models with limited resources. YuLan-Mini’s lightweight design makes it ideal for small enterprises and academic research.

Example Use Cases

Researchers: Use YuLan-Mini for automatic math problem solving and verification.

Developers: Leverage YuLan-Mini to generate high-quality code snippets, improving development efficiency.

Educational Institutions: Employ YuLan-Mini to provide personalized learning materials and answer student queries.

Product Features

Lightweight Model: 2.4 billion parameters ensuring top-notch performance.

Efficient Data Usage: Trained on only 1.08 terabytes of data, showcasing high efficiency.

Specialized Skills: Strong in mathematical and programming tasks.

Open Source: Pre-training resources, including code and data, are openly available to promote transparency and reproducibility.

Long Context Support: Capable of handling up to 28K context length for complex tasks.

Model Weights and Optimizers: Provided for easy research and further training.

Versatile Usage: Supports various scenarios such as pre-training, fine-tuning, and learning rate annealing.

Getting Started Guide

1. Visit the YuLan-Mini GitHub page to explore project details and documentation.

2. Follow the guidelines to download and install the necessary pre-trained models and code.

3. Use Hugging Face's interface to load the model and tokenizer for inference testing.

4. Adjust model parameters as needed for fine-tuning or additional training to fit specific tasks.

5. Apply the model in real-world applications like text generation and question answering systems.

6. Engage in community discussions, share feedback, and suggest improvements.