Canny ControlNet is the most commonly used ControlNet model. It uses the Canny edge detection algorithm to extract edge information in the image, and then uses this edge information to guide the AI to generate the image.

Keep structure: Be able to maintain the basic structure and outline of the original image well

Strong flexibility: The intensity of guidance can be controlled by adjusting the parameters of edge detection

Wide scope of application: suitable for various scenarios such as sketching, line drawing, architectural design, etc.

Stable effect: Canny's guidance effect is more stable and predictable than other ControlNet models

Since some nodes use new ComfyUI nodes, you need to update ComfyUI to the latest version first

Update ComfyUI Please refer to the ComfyUI update tutorial

First, the following model needs to be installed:

| Model Type | Model File | Download address |

|---|---|---|

| SD1.5 Basic Model | dreamshaper_8.safetensors | Civitai |

| Canny ControlNet Model | control_v11p_sd15_canny.pth | Hugging Face |

| VAE model (optional) | vae-ft-mse-840000-ema-pruned.safetensors | Hugging Face |

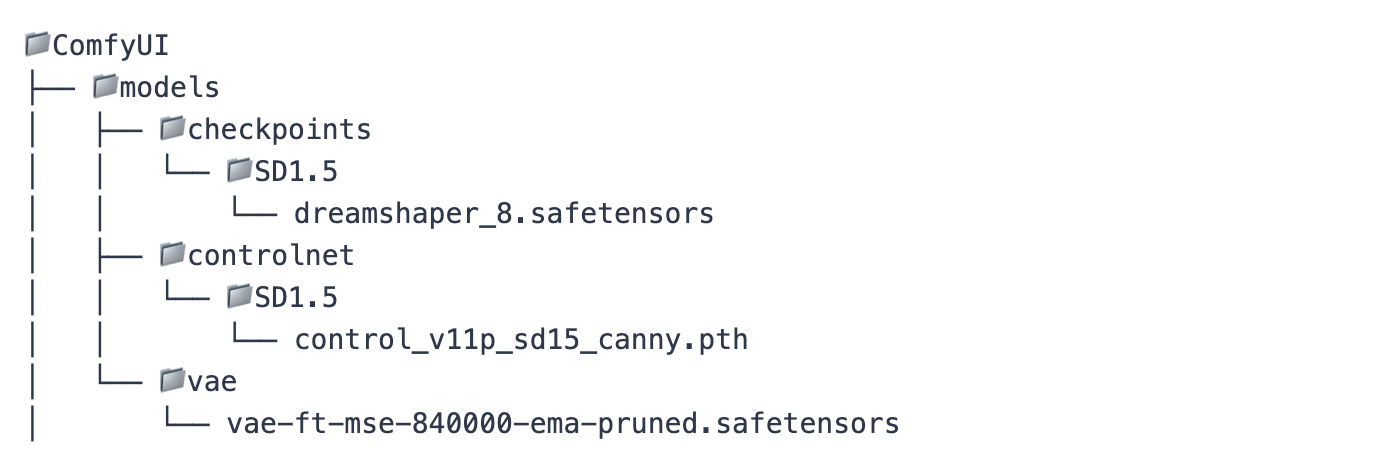

Please place the model file in the following structure:

3. SD1.5 Canny ControlNet workflow file download

SD1.5 Canny ControlNet Workflow

Save the image below to locally, select load this image in the LoadImage node after loading the workflow SD1.5 Canny ControlNet Workflow

This workflow mainly contains the following parts:

1. Model loading part: Loading the SD model, VAE model and ControlNet model

2. Prompt word coding part: deal with positive and negative prompt words

3. Image processing part: including image loading and Canny edge detection

4.ControlNet control section: Apply edge information to the generation process

5.Sampling and Saving Parts: Generate the final image and save it

1.LoadImage: Used to load input images

2.Canny: There are two important parameters for edge detection:

low_threshold: low threshold, controls the sensitivity of edge detection

high_threshold: high threshold, controls edge continuity

3.ControlNetLoader: Load the ControlNet model

4.ControlNetApplyAdvanced: Controls the application method of ControlNet, including the following important parameters:

strength: Control strength

start_percent: The point of time that starts to affect

end_percent: The point of time when the end effect is

Download the workflow file for this tutorial

Click "Load" in ComfyUI, or directly drag the downloaded JSON file into ComfyUI

Prepare a picture you want to process

LoadImage node to load images

low_threshold Recommended range: 0.2-0.5

High_threshold Recommended range: 0.5-0.8

The edge detection effect can be previewed in real time through the PreviewImage node

In the KSampler node:

Steps: 20-30 recommended

cfg: Recommended 7-8

sampler_name: It is recommended to use "dpmpp_2m"

scheduler: "karras" is recommended

strength: 1.0 means to follow edge information completely

The strength value can be reduced as needed to reduce the control

If there are too many edges: Increase threshold value

If the edges are too few: reduce the threshold value

It is recommended to preview the effect first through PreviewImage

Positive prompt words should describe the style and details you want in detail

Negative prompt words to contain elements you want to avoid

The prompt word should be related to the content of the original image

If the generated image is too blurry: Increase the cfg value

If edge following is not enough: Increase the strength value

If the details are not enough: Increase the steps value

The following are some common usage scenarios and corresponding parameter settings:

low_threshold: 0.2

high_threshold: 0.5

strength: 1.0

steps: 25

low_threshold: 0.4

high_threshold: 0.7

strength: 0.8

steps: 30