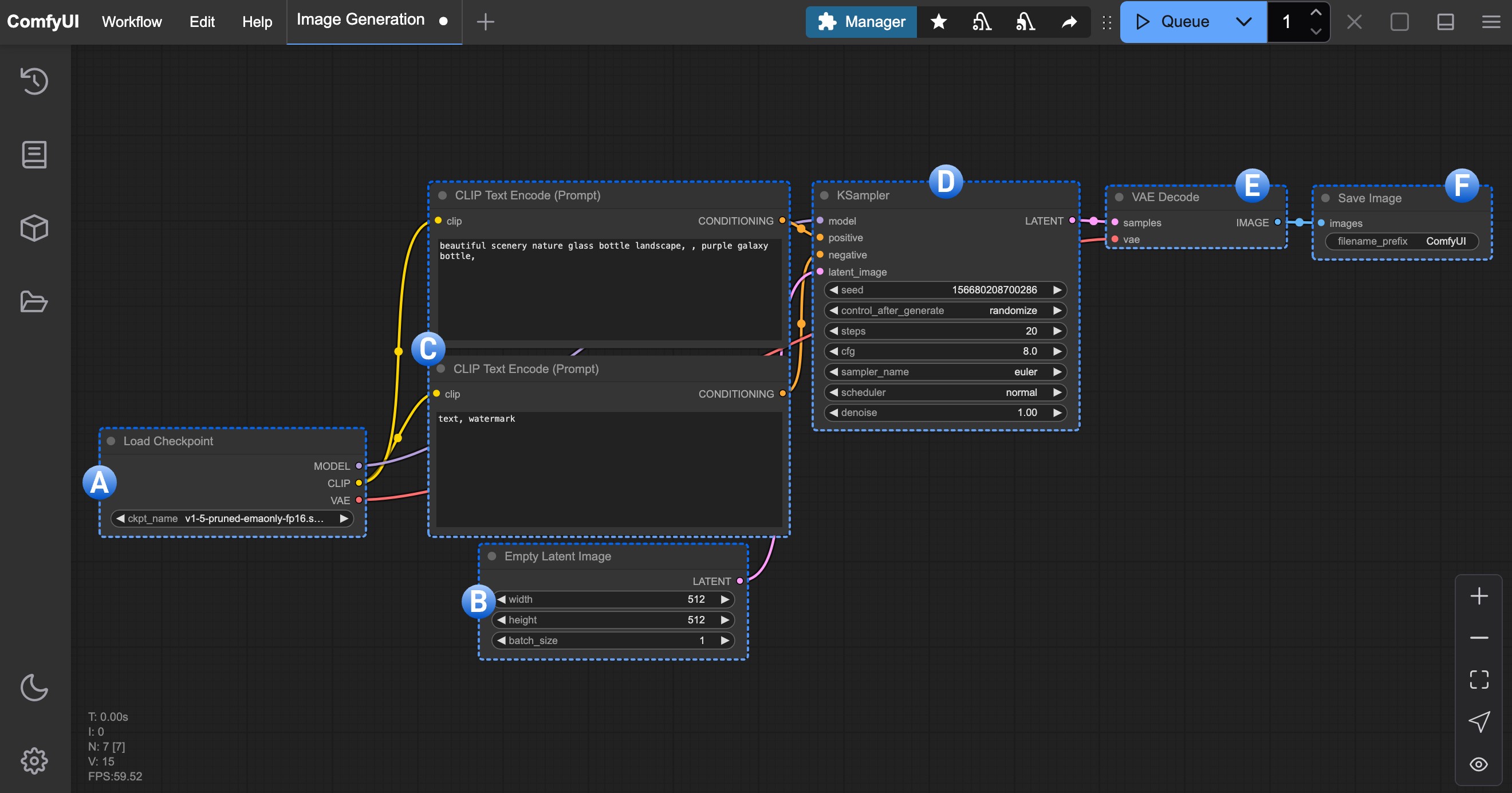

Text to Image is the basic process in AI drawing. The corresponding picture is generated by inputting text descriptions. Its core is the diffusion model.

In the process of literary and graphic drawings, we need the following conditions:

1. Painter: Drawing Model

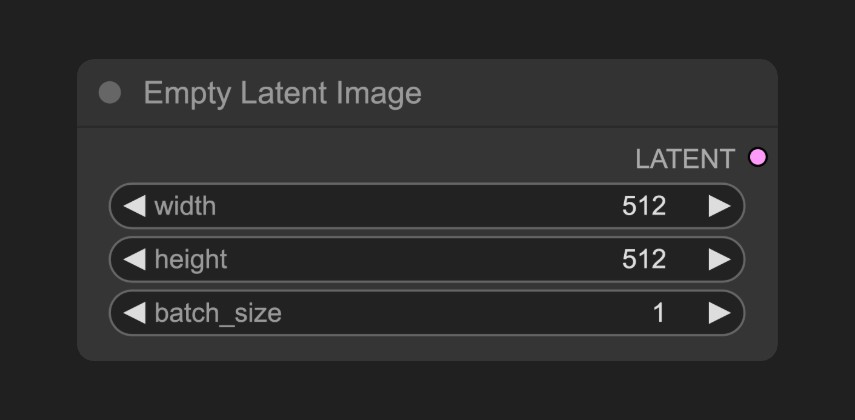

2. Canvas: potential space

3.**Requirements for the picture (prompt words):**Prompt words, including positive prompt words (elements that want to appear in the picture) and negative prompt words (elements that don't want to appear in the picture)

This text-to-image generation process can be simply understood as telling a painter (drawing model) your drawing requirements (positive prompt words, negative prompt words) and the painter will draw what you want according to your requirements.

Please make sure you have at least one SD1.5 model file in the ComfyUI/models/checkpoints folder.

You can use these models:

1. v1-5-pruned-emaonly-fp16.safetensors

3. Anything V5

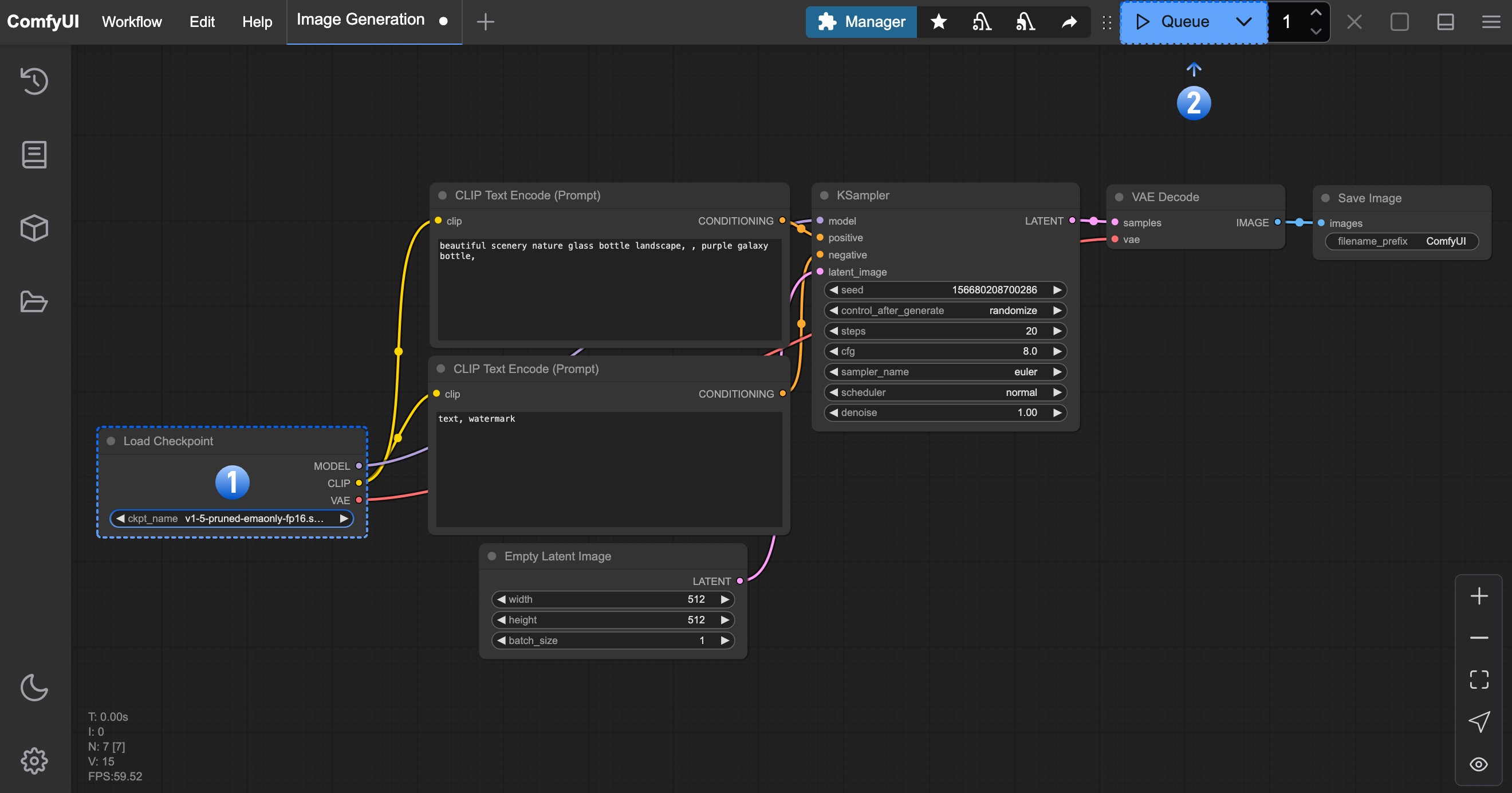

Please download the image below and drag the image into the ComfyUI interface, or use the menu Workflows -> Open Open this image to load the corresponding workflow

You can also select Text to Image from the menu Workflows -> Browse example workflows

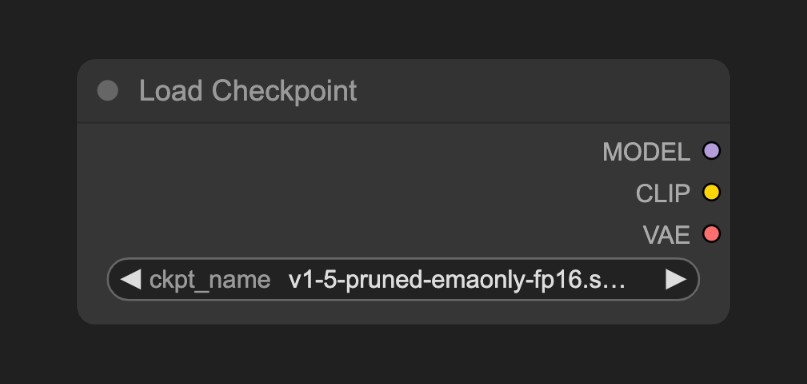

After completing the installation of the corresponding drawing model, please refer to the steps below to load the corresponding model and perform the first image generation

Please correspond to the picture number and complete the following operations

1. Please use arrows in the Load Checkpoint node or click on the text area to ensure that v1-5-pruned-emaonly-fp16.safetensors is selected, and the left and right switching arrows will not show null text.

2. Click the Queue button, or use the shortcut key Ctrl + Enter to perform image generation

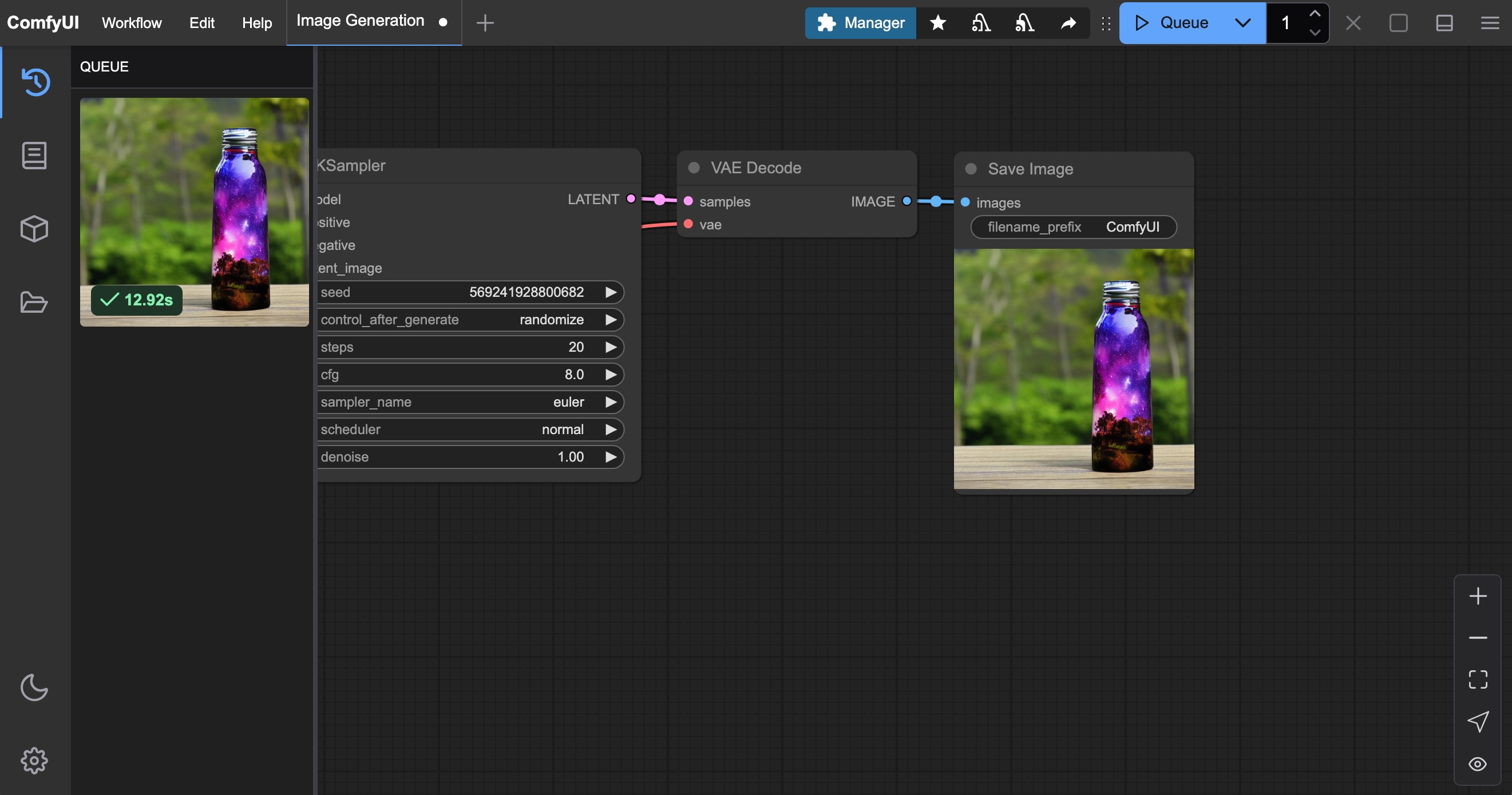

After waiting for the corresponding process to be executed, you should be able to see the corresponding image results in the Save Image node of the interface. You can right-click to save it to the local area.

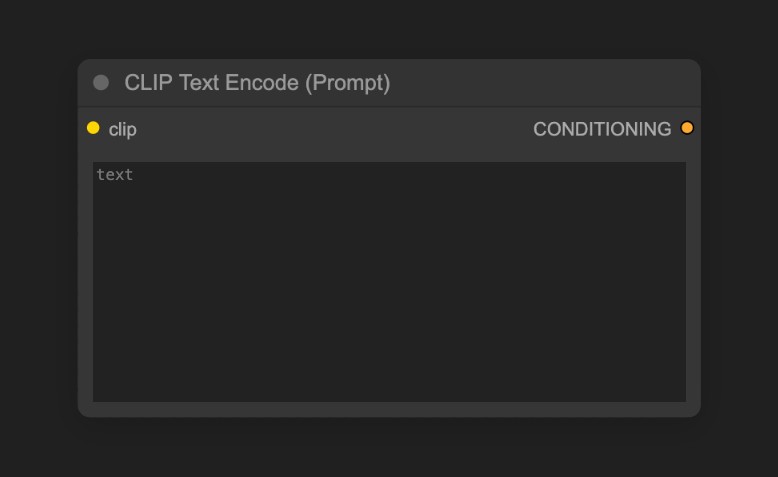

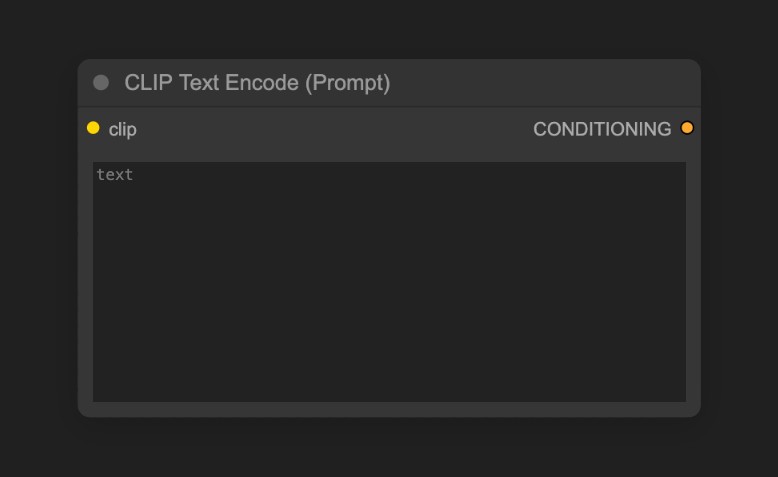

You can try to modify the text at CLIP Text Encoder

Where the Positive connected to the KSampler node is a positive prompt word, and the Negative connected to the KSampler node is a negative prompt word

Here are some simple prompt word principles for the SD1.5 model

Try to use English

Use English commas to separate the prompt words

Try to use phrases rather than long sentences

Use more specific description

You can use expressions like (golden hour:1.2) to increase the weight of a specific keyword, so that it will appear in the picture with a higher probability. 1.2 is the weight and golden hour is the keyword.

You can use keywords such as masterpiece, best quality, 4k to improve the generation quality

Here are a few different sets of propt examples that you can try to use to see the generated effect, or use your own propt to try to generate

1. Two-dimensional animation style

Positive prompt words:

anime style, 1girl with long pink hair, cherry blossom background, studio ghibli aesthetic, soft lighting, intricate detailsmasterpiece, best quality, 4k

Negative prompt words:

low quality, blurry, deformed hands, extra fingers

2. Realistic style

Positive prompt words:

(ultra realistic portrait:1.3), (elegant woman in crisis silk dress:1.2),full body, soft cinematic lighting, (golden hour:1.2),(fujifilm XT4:1.1),shall depth of field,(skin texture details:1.3), (film grain:1.1),gentle wind flow, warm color grading, (perfect facial symmetry:1.3)

Negative prompt words:

(deformed, cartoon, anime, doll, plastic skin, overexposed, blurry, extra fingers)

3. Specific artist style

Positive prompt words:

fantasy elf, detailed character, glowing magic, vibrant colors, long flowing hair, elegant armor, ethereal beauty, mysterious forest, magical aura, high detail, soft lighting, fantasy portrait, Artgerm style

Negative prompt words:

blurry, low detail, cartoonish, unrealistic anatomy, out of focus, cluttered, flat lighting

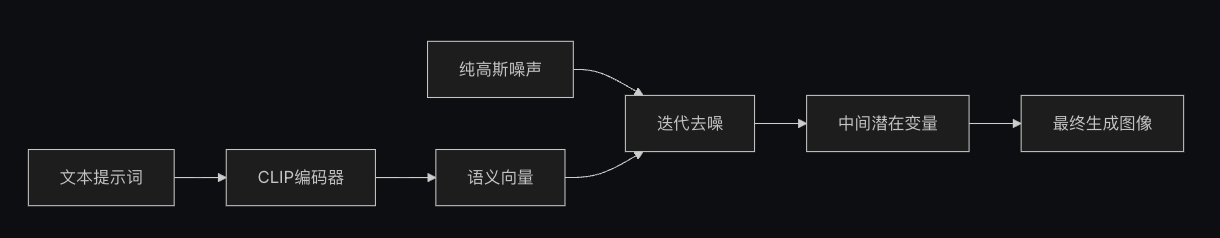

The entire process of literary genres can be understood as the counter-diffusion process of the diffusion model. The v1-5-pruned-emaonly-fp16.safetensors we downloaded is a model that has been trained to generate target images from pure Gaussian noise. We only need to enter our prompt word, and it can generate target images through random noise reduction.

We may need to understand the following two concepts,

1. Latent Space: Latent Space is an abstract data representation method in diffusion model. By converting the image from pixel space to potential space, the storage space of the image can be reduced, and it can be easier to train the diffusion model and reduce the complexity of noise reduction. Just like when architects design buildings, they use blueprints (potential space) instead of directly designing on the building (pixel space). This method can maintain structural features while greatly reducing the modification cost.

2. Pixel Space: Pixel Space is the storage space of the picture, which is the picture we finally see, used to store the pixel value of the picture.

This node is usually used to load the drawing model. Usually, checkpoint will contain three components: MODEL (UNet), CLIP and VAE.

1.MODEL (UNet): is the UNet model of the corresponding model, responsible for noise prediction and image generation during diffusion process, and drives the diffusion process

2.CLIP: This is a text encoder. Because the model cannot directly understand our text prompt word (prompt), we need to encode our text prompt word (prompt) into a vector and convert it into a semantic vector that the model can understand.

3.VAE: This is a variational autoencoder. Our diffusion model processes potential space, while our picture is pixel space, so the picture needs to be converted into potential space, then diffused, and finally the potential space is converted into pictures.

Define a latent space (Latent Space), which is output to the KSampler node. The empty Latent image node builds a purely noise potential space

Its specific function can be understood as defining the size of the canvas, which is the size of the image we finally generate

Used to encode prompt words, that is, enter your requirements for the picture

1. The positive prompt word entered in the Positive condition connected to the KSampler node (elements that want to appear in the screen)

2. The Negative prompt word entered in the Negative condition connected to the KSampler node (elements that do not want to appear in the screen)

The corresponding prompt word is encoded as a semantic vector by the CLIP component from the Load Checkpoint node and output as a condition to the KSampler node.

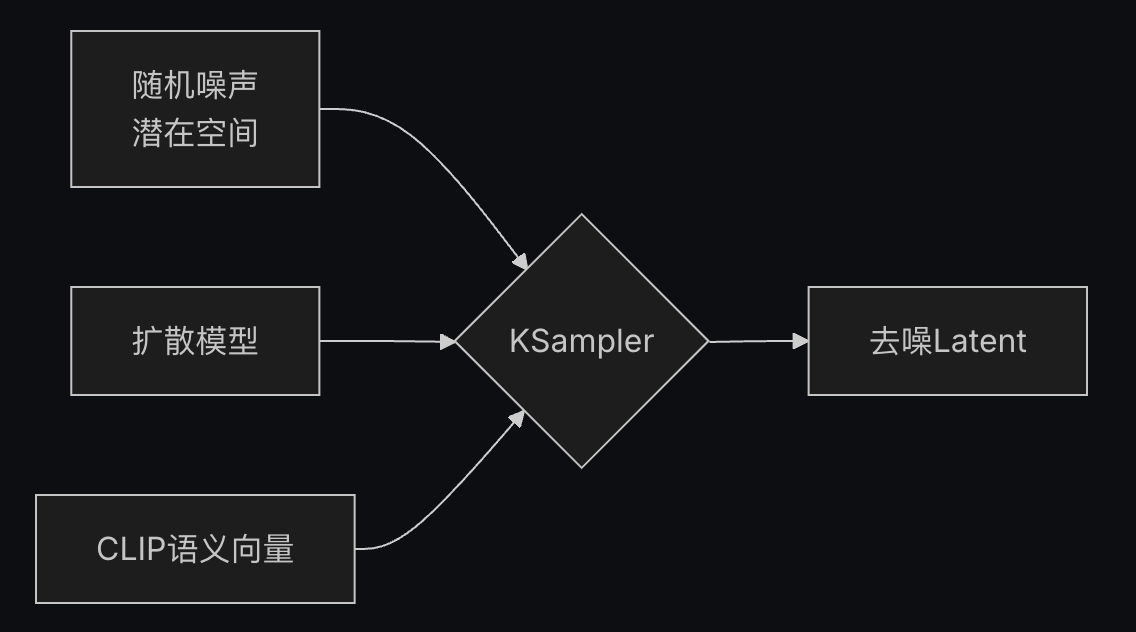

The K sampler is the core of the entire workflow. The entire noise reduction process is completed in this node and a latent space image is finally output.

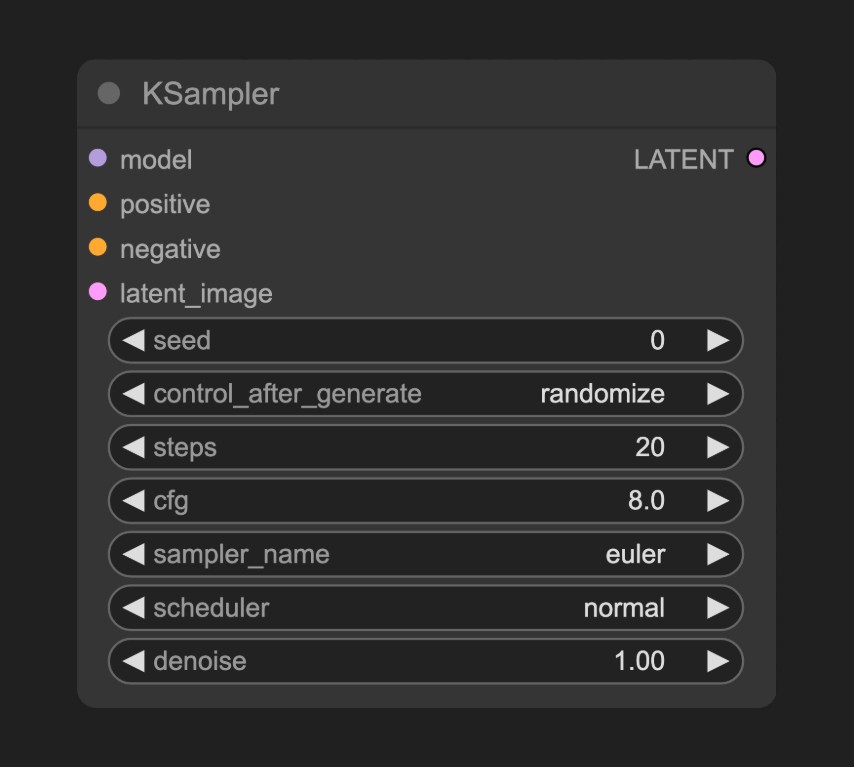

The parameters of the KSampler node are as follows

| Parameter name | describe | effect |

|---|---|---|

| model | Diffusing model for denoising | Determine the style and quality of the generated image |

| Positive | Forward prompt word conditional encoding | Boot the creation of content containing the specified element |

| negative | Negative prompt word conditional encoding | Suppress the generation of undesirable content |

| latent_image | Potential spatial image to be denoised | As input carrier for noise initialization |

| seed | Random seeds of noise generation | Control the randomness of generated results |

| control_after_generate | Control mode after seed generation | Determine the change rules of seeds when multiple batches are generated |

| steps | Number of steps for denoising iteration | The more steps, the more detailed the details, but the time is increased |

| cfg | Classifier free boot coefficient | Control the constraint intensity of prompt word (too high leads to overfitting) |

| sampler_name | Sampling algorithm name | Mathematical method for denoising the denoising path |

| scheduler | Scheduler type | Control noise attenuation rate and step size allocation |

| denoise | Noise reduction intensity coefficient | Controls the noise intensity added to the latent space, 0.0 retains the original input characteristics, and 1.0 is complete noise |

In the KSampler node, the latent space uses seed as initialization parameters to build random noise, and the semantic vectors Positive and Negative are input as conditions into the diffusion model

Then, according to the number of denoising steps specified by the steps parameter, denoising will denoising the latent space according to the denoise intensity coefficient specified by the denoise parameter, and generate a new latent space image

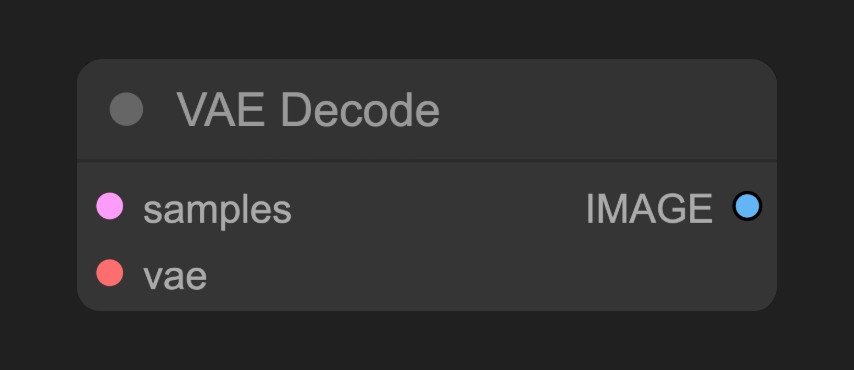

Convert potential spatial images output by the KSampler to pixel-space images

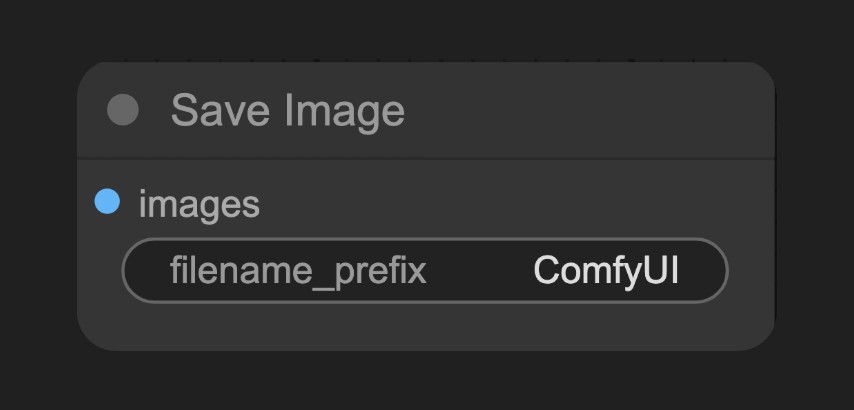

Preview and save the image decoded from the latent space and save it to the local ComfyUI/output folder

SD1.5 (Stable Diffusion 1.5) is an AI drawing model developed by Stability AI . The basic version of the Stable Diffusion series is trained based on 512×512 resolution image, so it has good support for 512×512 resolution image generation, with a volume of about 4GB, and can run smoothly on ** consumer graphics cards (such as 6GB graphics memory). Currently, SD1.5 has a very rich surrounding ecosystem, and it supports a wide range of plug-ins (such as ControlNet, LoRA) and optimization tools. As a milestone model in the field of AI painting, SD1.5 is still the best entry-level choice thanks to its open source features, lightweight architecture and rich ecosystem. Although upgraded versions such as SDXL/SD3 are launched in the future, their cost-effectiveness in consumer hardware is still irreplaceable.

Release time: October 2022

Core architecture: Based on Latent Diffusion Model (LDM)

Training data: LAION-Aesthetics v2.5 dataset (about 590 million steps of training)

Open source features: fully open source model/code/training data

Model Advantages:

Lightweight: Small size, only about 4GB, running smoothly on consumer graphics cards

Low barrier to use: Supports a wide range of plug-ins and optimization tools

Eco-maturity: Supports a wide range of plug-ins and optimization tools

Fast generation speed: run smoothly on consumer graphics cards

Model limitations:

Details: Hands/complex light and shadows are prone to distortion

Resolution limit: Directly generate 1024x1024 quality degradation

Prompt word dependency: need to accurately describe the control effect in English